Tools and Tips For Data Warehouse Developers (SQLGLA)

0 likes1,600 views

Tools and Tips For Data Warehouse Developers (Presented at SQLGLA in Glasgow, Scotland on September 14th, 2018)

1 of 93

Downloaded 15 times

Ad

Recommended

Deliver Your Modern Data Warehouse (Microsoft Tech Summit Oslo 2018)

Deliver Your Modern Data Warehouse (Microsoft Tech Summit Oslo 2018)Cathrine Wilhelmsen Deliver Your Modern Data Warehouse (Presented at Microsoft Tech Summit in Oslo on December 6th, 2018)

Microsoft Data Integration Pipelines: Azure Data Factory and SSIS

Microsoft Data Integration Pipelines: Azure Data Factory and SSISMark Kromer The document discusses tools for building ETL pipelines to consume hybrid data sources and load data into analytics systems at scale. It describes how Azure Data Factory and SQL Server Integration Services can be used to automate pipelines that extract, transform, and load data from both on-premises and cloud data stores into data warehouses and data lakes for analytics. Specific patterns shown include analyzing blog comments, sentiment analysis with machine learning, and loading a modern data warehouse.

Microsoft Build 2018 Analytic Solutions with Azure Data Factory and Azure SQL...

Microsoft Build 2018 Analytic Solutions with Azure Data Factory and Azure SQL...Mark Kromer From MS Build 2018 : Microsoft Build 2018 Analytic Solutions with Azure Data Factory and Azure SQL Data Warehouse

ETL in the Cloud With Microsoft Azure

ETL in the Cloud With Microsoft AzureMark Kromer Short introduction to different options for ETL & ELT in the Cloud with Microsoft Azure. This is a small accompanying set of slides for my presentations and blogs on this topic

Building Data Lakes with Apache Airflow

Building Data Lakes with Apache AirflowGary Stafford Build a simple Data Lake on AWS using a combination of services, including Amazon Managed Workflows for Apache Airflow (Amazon MWAA), AWS Glue, AWS Glue Studio, Amazon Athena, and Amazon S3.

Blog post and link to the video: https://garystafford.medium.com/building-a-data-lake-with-apache-airflow-b48bd953c2b

DataOps for the Modern Data Warehouse on Microsoft Azure @ NDCOslo 2020 - Lac...

DataOps for the Modern Data Warehouse on Microsoft Azure @ NDCOslo 2020 - Lac...Lace Lofranco Talk Description:

The Modern Data Warehouse architecture is a response to the emergence of Big Data, Machine Learning and Advanced Analytics. DevOps is a key aspect of successfully operationalising a multi-source Modern Data Warehouse.

While there are many examples of how to build CI/CD pipelines for traditional applications, applying these concepts to Big Data Analytical Pipelines is a relatively new and emerging area. In this demo heavy session, we will see how to apply DevOps principles to an end-to-end Data Pipeline built on the Microsoft Azure Data Platform with technologies such as Data Factory, Databricks, Data Lake Gen2, Azure Synapse, and AzureDevOps.

Resources: https://aka.ms/mdw-dataops

ADF Mapping Data Flow Private Preview Migration

ADF Mapping Data Flow Private Preview MigrationMark Kromer #Microsoft #Azure #DataFactory #MappingDataFlows instructions for migrating off private preview to ADF V2

Eugene Polonichko "Architecture of modern data warehouse"

Eugene Polonichko "Architecture of modern data warehouse"Lviv Startup Club The document discusses the architecture of a modern data warehouse using Microsoft technologies. It describes traditional data warehousing approaches and outlines ten characteristics of a modern data warehouse. It then details Microsoft's approach using Azure Data Factory to ingest diverse data types into Azure Blob Storage, Azure Databricks for analytics and data transformation, and Azure SQL Data Warehouse for combined structured data. It also discusses technologies for storage, visualization, and links for further information.

Microsoft Azure BI Solutions in the Cloud

Microsoft Azure BI Solutions in the CloudMark Kromer This document provides an overview of several Microsoft Azure cloud data and analytics services:

- Azure Data Factory is a data integration service that can move and transform data between cloud and on-premises data stores as part of scheduled or event-driven workflows.

- Azure SQL Data Warehouse is a cloud data warehouse that provides elastic scaling for large BI and analytics workloads. It can scale compute resources on demand.

- Azure Machine Learning enables building, training, and deploying machine learning models and creating APIs for predictive analytics.

- Power BI provides interactive reports, visualizations, and dashboards that can combine multiple datasets and be embedded in applications.

Introduction to Azure Data Factory

Introduction to Azure Data FactorySlava Kokaev Azure Data Factory is a cloud-based data integration service that orchestrates and automates the movement and transformation of data. In this session we will learn how to create data integration solutions using the Data Factory service and ingest data from various data stores, transform/process the data, and publish the result data to the data stores.

Analyzing StackExchange data with Azure Data Lake

Analyzing StackExchange data with Azure Data LakeBizTalk360 Big data is the new big thing where storing the data is the easy part. Gaining insights in your pile of data is something different. Based on a data dump of the well-known StackExchange websites, we will store & analyse 150+ GB of data with Azure Data Lake Store & Analytics to gain some insights about their users. After that we will use Power BI to give an at a glance overview of our learnings.

If you are a developer that is interested in big data, this is your time to shine! We will use our existing SQL & C# skills to analyse everything without having to worry about running clusters.

Data quality patterns in the cloud with ADF

Data quality patterns in the cloud with ADFMark Kromer Azure Data Factory can be used to build modern data warehouse patterns with Azure SQL Data Warehouse. It allows extracting and transforming relational data from databases and loading it into Azure SQL Data Warehouse tables optimized for analytics. Data flows in Azure Data Factory can also clean and join disparate data from Azure Storage, Data Lake Store, and other data sources for loading into the data warehouse. This provides simple and productive ETL capabilities in the cloud at any scale.

Modern ETL: Azure Data Factory, Data Lake, and SQL Database

Modern ETL: Azure Data Factory, Data Lake, and SQL DatabaseEric Bragas This document discusses modern Extract, Transform, Load (ETL) tools in Azure, including Azure Data Factory, Azure Data Lake, and Azure SQL Database. It provides an overview of each tool and how they can be used together in a data warehouse architecture with Azure Data Lake acting as the data hub and Azure SQL Database being used for analytics and reporting through the creation of data marts. It also includes two demonstrations, one on Azure Data Factory and another showing Azure Data Lake Store and Analytics.

Azure Data Factory for Redmond SQL PASS UG Sept 2018

Azure Data Factory for Redmond SQL PASS UG Sept 2018Mark Kromer Azure Data Factory is a fully managed data integration service in the cloud. It provides a graphical user interface for building data pipelines without coding. Pipelines can orchestrate data movement and transformations across hybrid and multi-cloud environments. Azure Data Factory supports incremental loading, on-demand Spark, and lifting SQL Server Integration Services packages to the cloud.

R in Power BI

R in Power BIEric Bragas An introduction to using R in Power BI via the various touch points such as: R script data sources, R transformations, custom R visuals, and the community gallery of R visualizations

BTUG - Dec 2014 - Hybrid Connectivity Options

BTUG - Dec 2014 - Hybrid Connectivity OptionsMichael Stephenson This document discusses various integration patterns and architectures that involve Microsoft Azure and BizTalk Server. It presents questions that customers may ask about integration solutions. It also provides examples of hybrid integration architectures that leverage Azure services like Service Bus along with on-premises BizTalk Server. The document aims to help customers analyze requirements and evaluate different architectural options for their integration needs.

Using Redash for SQL Analytics on Databricks

Using Redash for SQL Analytics on DatabricksDatabricks This talk gives a brief overview with a demo performing SQL analytics with Redash and Databricks. We will introduce some of the new features coming as part of our integration with Databricks following the acquisition earlier this year, along with a demo of the other Redash features that enable a productive SQL experience on top of Delta Lake.

Develop scalable analytical solutions with Azure Data Factory & Azure SQL Dat...

Develop scalable analytical solutions with Azure Data Factory & Azure SQL Dat...Microsoft Tech Community In this session you will learn how to develop data pipelines in Azure Data Factory and build a Cloud-based analytical solution adopting modern data warehouse approaches with Azure SQL Data Warehouse and implementing incremental ETL orchestration at scale. With the multiple sources and types of data available in an enterprise today Azure Data factory enables full integration of data and enables direct storage in Azure SQL Data Warehouse for powerful and high-performance query workloads which drive a majority of enterprise applications and business intelligence applications.

Modern data warehouse

Modern data warehouseRakesh Jayaram Modern DW Architecture

- The document discusses modern data warehouse architectures using Azure cloud services like Azure Data Lake, Azure Databricks, and Azure Synapse. It covers storage options like ADLS Gen 1 and Gen 2 and data processing tools like Databricks and Synapse. It highlights how to optimize architectures for cost and performance using features like auto-scaling, shutdown, and lifecycle management policies. Finally, it provides a demo of a sample end-to-end data pipeline.

Microsoft Azure Data Factory Hands-On Lab Overview Slides

Microsoft Azure Data Factory Hands-On Lab Overview SlidesMark Kromer This document outlines modules for a lab on moving data to Azure using Azure Data Factory. The modules will deploy necessary Azure resources, lift and shift an existing SSIS package to Azure, rebuild ETL processes in ADF, enhance data with cloud services, transform and merge data with ADF and HDInsight, load data into a data warehouse with ADF, schedule ADF pipelines, monitor ADF, and verify loaded data. Technologies used include PowerShell, Azure SQL, Blob Storage, Data Factory, SQL DW, Logic Apps, HDInsight, and Office 365.

Databricks: A Tool That Empowers You To Do More With Data

Databricks: A Tool That Empowers You To Do More With DataDatabricks In this talk we will present how Databricks has enabled the author to achieve more with data, enabling one person to build a coherent data project with data engineering, analysis and science components, with better collaboration, better productionalization methods, with larger datasets and faster.

The talk will include a demo that will illustrate how the multiple functionalities of Databricks help to build a coherent data project with Databricks jobs, Delta Lake and auto-loader for data engineering, SQL Analytics for Data Analysis, Spark ML and MLFlow for data science, and Projects for collaboration.

Integration Monday - Analysing StackExchange data with Azure Data Lake

Integration Monday - Analysing StackExchange data with Azure Data LakeTom Kerkhove Big data is the new big thing where storing the data is the easy part. Gaining insights in your pile of data is something different.

Based on a data dump of the well-known StackExchange websites, we will store & analyse 150+ GB of data with Azure Data Lake Store & Analytics to gain some insights about their users. After that we will use Power BI to give an at a glance overview of our learnings.

If you are a developer that is interested in big data, this is your time to shine! We will use our existing SQL & C# skills to analyse everything without having to worry about running clusters.

SharePoint User Group - Leeds - 2015-09-02

SharePoint User Group - Leeds - 2015-09-02Michael Stephenson The document discusses various hybrid connectivity options between on-premise systems and the Microsoft cloud, including using Azure Service Bus, Event Hubs, API apps, and BizTalk services to connect applications and data between on-premise and Azure. It also provides examples of how these options can be used to integrate systems like SAP, SharePoint, and line of business applications in a hybrid cloud environment. Overall the document serves as a guide to the different approaches for achieving hybrid connectivity between on-premise infrastructure and the Microsoft cloud platform.

Spark as a Service with Azure Databricks

Spark as a Service with Azure DatabricksLace Lofranco Presented at: Global Azure Bootcamp (Melbourne)

Participants will get a deep dive into one of Azure’s newest offering: Azure Databricks, a fast, easy and collaborative Apache® Spark™ based analytics platform optimized for Azure. In this session, we will go through Azure Databricks key collaboration features, cluster management, and tight data integration with Azure data sources. We’ll also walk through an end-to-end Recommendation System Data Pipeline built using Spark on Azure Databricks.

Azure Data Factory for Azure Data Week

Azure Data Factory for Azure Data WeekMark Kromer The document discusses Azure Data Factory and its capabilities for cloud-first data integration and transformation. ADF allows orchestrating data movement and transforming data at scale across hybrid and multi-cloud environments using a visual, code-free interface. It provides serverless scalability without infrastructure to manage along with capabilities for lifting and running SQL Server Integration Services packages in Azure.

PowerBI v2, Power to the People, 1 year later

PowerBI v2, Power to the People, 1 year laterserge luca PowerBI v2 Power to the people, 1 year later with Serge Luca and Isabelle Van Campenhoudt Européen SharePoint conférence 2015 Stockholm

ADF Mapping Data Flows Training V2

ADF Mapping Data Flows Training V2Mark Kromer Mapping Data Flow is a new feature of Azure Data Factory that allows users to build data transformations in a visual interface without code. It provides a serverless, scale-out transformation engine for processing big data with unstructured requirements. Mapping Data Flows can be operationalized with Data Factory's scheduling, control flow, and monitoring capabilities.

Azure Stream Analytics

Azure Stream AnalyticsMarco Parenzan Modern business is fast and needs to take decisions immediatly. It cannot wait that a traditional BI task that works on data snapshots at some time. Social data, Internet of Things, Just in Time don't undestand "snapshot" and needs working on streaming, live data. Microsoft offers a PaaS solution to satisfy this need with Azure Stream Analytics. Let's see how it works.

Tools and Tips For Data Warehouse Developers (SQLSaturday Slovenia)

Tools and Tips For Data Warehouse Developers (SQLSaturday Slovenia)Cathrine Wilhelmsen Tools and Tips For Data Warehouse Developers (Presented at SQLSaturday Slovenia on December 9th 2017)

Tools and Tips: From Accidental to Efficient Data Warehouse Developer (SQLSat...

Tools and Tips: From Accidental to Efficient Data Warehouse Developer (SQLSat...Cathrine Wilhelmsen Tools and Tips: From Accidental to Efficient Data Warehouse Developer (Presented at SQLSaturday Sacramento on July 23rd 2016)

Ad

More Related Content

What's hot (20)

Microsoft Azure BI Solutions in the Cloud

Microsoft Azure BI Solutions in the CloudMark Kromer This document provides an overview of several Microsoft Azure cloud data and analytics services:

- Azure Data Factory is a data integration service that can move and transform data between cloud and on-premises data stores as part of scheduled or event-driven workflows.

- Azure SQL Data Warehouse is a cloud data warehouse that provides elastic scaling for large BI and analytics workloads. It can scale compute resources on demand.

- Azure Machine Learning enables building, training, and deploying machine learning models and creating APIs for predictive analytics.

- Power BI provides interactive reports, visualizations, and dashboards that can combine multiple datasets and be embedded in applications.

Introduction to Azure Data Factory

Introduction to Azure Data FactorySlava Kokaev Azure Data Factory is a cloud-based data integration service that orchestrates and automates the movement and transformation of data. In this session we will learn how to create data integration solutions using the Data Factory service and ingest data from various data stores, transform/process the data, and publish the result data to the data stores.

Analyzing StackExchange data with Azure Data Lake

Analyzing StackExchange data with Azure Data LakeBizTalk360 Big data is the new big thing where storing the data is the easy part. Gaining insights in your pile of data is something different. Based on a data dump of the well-known StackExchange websites, we will store & analyse 150+ GB of data with Azure Data Lake Store & Analytics to gain some insights about their users. After that we will use Power BI to give an at a glance overview of our learnings.

If you are a developer that is interested in big data, this is your time to shine! We will use our existing SQL & C# skills to analyse everything without having to worry about running clusters.

Data quality patterns in the cloud with ADF

Data quality patterns in the cloud with ADFMark Kromer Azure Data Factory can be used to build modern data warehouse patterns with Azure SQL Data Warehouse. It allows extracting and transforming relational data from databases and loading it into Azure SQL Data Warehouse tables optimized for analytics. Data flows in Azure Data Factory can also clean and join disparate data from Azure Storage, Data Lake Store, and other data sources for loading into the data warehouse. This provides simple and productive ETL capabilities in the cloud at any scale.

Modern ETL: Azure Data Factory, Data Lake, and SQL Database

Modern ETL: Azure Data Factory, Data Lake, and SQL DatabaseEric Bragas This document discusses modern Extract, Transform, Load (ETL) tools in Azure, including Azure Data Factory, Azure Data Lake, and Azure SQL Database. It provides an overview of each tool and how they can be used together in a data warehouse architecture with Azure Data Lake acting as the data hub and Azure SQL Database being used for analytics and reporting through the creation of data marts. It also includes two demonstrations, one on Azure Data Factory and another showing Azure Data Lake Store and Analytics.

Azure Data Factory for Redmond SQL PASS UG Sept 2018

Azure Data Factory for Redmond SQL PASS UG Sept 2018Mark Kromer Azure Data Factory is a fully managed data integration service in the cloud. It provides a graphical user interface for building data pipelines without coding. Pipelines can orchestrate data movement and transformations across hybrid and multi-cloud environments. Azure Data Factory supports incremental loading, on-demand Spark, and lifting SQL Server Integration Services packages to the cloud.

R in Power BI

R in Power BIEric Bragas An introduction to using R in Power BI via the various touch points such as: R script data sources, R transformations, custom R visuals, and the community gallery of R visualizations

BTUG - Dec 2014 - Hybrid Connectivity Options

BTUG - Dec 2014 - Hybrid Connectivity OptionsMichael Stephenson This document discusses various integration patterns and architectures that involve Microsoft Azure and BizTalk Server. It presents questions that customers may ask about integration solutions. It also provides examples of hybrid integration architectures that leverage Azure services like Service Bus along with on-premises BizTalk Server. The document aims to help customers analyze requirements and evaluate different architectural options for their integration needs.

Using Redash for SQL Analytics on Databricks

Using Redash for SQL Analytics on DatabricksDatabricks This talk gives a brief overview with a demo performing SQL analytics with Redash and Databricks. We will introduce some of the new features coming as part of our integration with Databricks following the acquisition earlier this year, along with a demo of the other Redash features that enable a productive SQL experience on top of Delta Lake.

Develop scalable analytical solutions with Azure Data Factory & Azure SQL Dat...

Develop scalable analytical solutions with Azure Data Factory & Azure SQL Dat...Microsoft Tech Community In this session you will learn how to develop data pipelines in Azure Data Factory and build a Cloud-based analytical solution adopting modern data warehouse approaches with Azure SQL Data Warehouse and implementing incremental ETL orchestration at scale. With the multiple sources and types of data available in an enterprise today Azure Data factory enables full integration of data and enables direct storage in Azure SQL Data Warehouse for powerful and high-performance query workloads which drive a majority of enterprise applications and business intelligence applications.

Modern data warehouse

Modern data warehouseRakesh Jayaram Modern DW Architecture

- The document discusses modern data warehouse architectures using Azure cloud services like Azure Data Lake, Azure Databricks, and Azure Synapse. It covers storage options like ADLS Gen 1 and Gen 2 and data processing tools like Databricks and Synapse. It highlights how to optimize architectures for cost and performance using features like auto-scaling, shutdown, and lifecycle management policies. Finally, it provides a demo of a sample end-to-end data pipeline.

Microsoft Azure Data Factory Hands-On Lab Overview Slides

Microsoft Azure Data Factory Hands-On Lab Overview SlidesMark Kromer This document outlines modules for a lab on moving data to Azure using Azure Data Factory. The modules will deploy necessary Azure resources, lift and shift an existing SSIS package to Azure, rebuild ETL processes in ADF, enhance data with cloud services, transform and merge data with ADF and HDInsight, load data into a data warehouse with ADF, schedule ADF pipelines, monitor ADF, and verify loaded data. Technologies used include PowerShell, Azure SQL, Blob Storage, Data Factory, SQL DW, Logic Apps, HDInsight, and Office 365.

Databricks: A Tool That Empowers You To Do More With Data

Databricks: A Tool That Empowers You To Do More With DataDatabricks In this talk we will present how Databricks has enabled the author to achieve more with data, enabling one person to build a coherent data project with data engineering, analysis and science components, with better collaboration, better productionalization methods, with larger datasets and faster.

The talk will include a demo that will illustrate how the multiple functionalities of Databricks help to build a coherent data project with Databricks jobs, Delta Lake and auto-loader for data engineering, SQL Analytics for Data Analysis, Spark ML and MLFlow for data science, and Projects for collaboration.

Integration Monday - Analysing StackExchange data with Azure Data Lake

Integration Monday - Analysing StackExchange data with Azure Data LakeTom Kerkhove Big data is the new big thing where storing the data is the easy part. Gaining insights in your pile of data is something different.

Based on a data dump of the well-known StackExchange websites, we will store & analyse 150+ GB of data with Azure Data Lake Store & Analytics to gain some insights about their users. After that we will use Power BI to give an at a glance overview of our learnings.

If you are a developer that is interested in big data, this is your time to shine! We will use our existing SQL & C# skills to analyse everything without having to worry about running clusters.

SharePoint User Group - Leeds - 2015-09-02

SharePoint User Group - Leeds - 2015-09-02Michael Stephenson The document discusses various hybrid connectivity options between on-premise systems and the Microsoft cloud, including using Azure Service Bus, Event Hubs, API apps, and BizTalk services to connect applications and data between on-premise and Azure. It also provides examples of how these options can be used to integrate systems like SAP, SharePoint, and line of business applications in a hybrid cloud environment. Overall the document serves as a guide to the different approaches for achieving hybrid connectivity between on-premise infrastructure and the Microsoft cloud platform.

Spark as a Service with Azure Databricks

Spark as a Service with Azure DatabricksLace Lofranco Presented at: Global Azure Bootcamp (Melbourne)

Participants will get a deep dive into one of Azure’s newest offering: Azure Databricks, a fast, easy and collaborative Apache® Spark™ based analytics platform optimized for Azure. In this session, we will go through Azure Databricks key collaboration features, cluster management, and tight data integration with Azure data sources. We’ll also walk through an end-to-end Recommendation System Data Pipeline built using Spark on Azure Databricks.

Azure Data Factory for Azure Data Week

Azure Data Factory for Azure Data WeekMark Kromer The document discusses Azure Data Factory and its capabilities for cloud-first data integration and transformation. ADF allows orchestrating data movement and transforming data at scale across hybrid and multi-cloud environments using a visual, code-free interface. It provides serverless scalability without infrastructure to manage along with capabilities for lifting and running SQL Server Integration Services packages in Azure.

PowerBI v2, Power to the People, 1 year later

PowerBI v2, Power to the People, 1 year laterserge luca PowerBI v2 Power to the people, 1 year later with Serge Luca and Isabelle Van Campenhoudt Européen SharePoint conférence 2015 Stockholm

ADF Mapping Data Flows Training V2

ADF Mapping Data Flows Training V2Mark Kromer Mapping Data Flow is a new feature of Azure Data Factory that allows users to build data transformations in a visual interface without code. It provides a serverless, scale-out transformation engine for processing big data with unstructured requirements. Mapping Data Flows can be operationalized with Data Factory's scheduling, control flow, and monitoring capabilities.

Azure Stream Analytics

Azure Stream AnalyticsMarco Parenzan Modern business is fast and needs to take decisions immediatly. It cannot wait that a traditional BI task that works on data snapshots at some time. Social data, Internet of Things, Just in Time don't undestand "snapshot" and needs working on streaming, live data. Microsoft offers a PaaS solution to satisfy this need with Azure Stream Analytics. Let's see how it works.

Develop scalable analytical solutions with Azure Data Factory & Azure SQL Dat...

Develop scalable analytical solutions with Azure Data Factory & Azure SQL Dat...Microsoft Tech Community

Similar to Tools and Tips For Data Warehouse Developers (SQLGLA) (20)

Tools and Tips For Data Warehouse Developers (SQLSaturday Slovenia)

Tools and Tips For Data Warehouse Developers (SQLSaturday Slovenia)Cathrine Wilhelmsen Tools and Tips For Data Warehouse Developers (Presented at SQLSaturday Slovenia on December 9th 2017)

Tools and Tips: From Accidental to Efficient Data Warehouse Developer (SQLSat...

Tools and Tips: From Accidental to Efficient Data Warehouse Developer (SQLSat...Cathrine Wilhelmsen Tools and Tips: From Accidental to Efficient Data Warehouse Developer (Presented at SQLSaturday Sacramento on July 23rd 2016)

Tools and Tips: From Accidental to Efficient Data Warehouse Developer (SQLBit...

Tools and Tips: From Accidental to Efficient Data Warehouse Developer (SQLBit...Cathrine Wilhelmsen Tools and Tips: From Accidental to Efficient Data Warehouse Developer (Presented at SQLBits XIV on March 6th 2015)

Tools and Tips: From Accidental to Efficient Data Warehouse Developer (SQLSat...

Tools and Tips: From Accidental to Efficient Data Warehouse Developer (SQLSat...Cathrine Wilhelmsen The document provides tips to help data warehouse developers become more efficient. It summarizes a presentation on tools and techniques for improving productivity when developing data warehouses and ETL processes. The presentation covers topics like visual features in SQL Server Management Studio, shortcuts, templates, snippets, query analysis, activity monitoring, and using Biml to generate SSIS packages from metadata. Attendees are encouraged to visit the speaker's website for more details and resources from the presentation.

Tools and Tips: From Accidental to Efficient Data Warehouse Developer (24 Hou...

Tools and Tips: From Accidental to Efficient Data Warehouse Developer (24 Hou...Cathrine Wilhelmsen Tools and Tips: From Accidental to Efficient Data Warehouse Developer (Presented at 24 Hours of PASS: Summit Preview on July 20th 2017)

There's a shortcut for that! SSMS Tips and Tricks (Denver SQL)

There's a shortcut for that! SSMS Tips and Tricks (Denver SQL)Cathrine Wilhelmsen There's a shortcut for that! SSMS Tips and Tricks (Presented for the Denver SQL Server User Group on October 19th 2017)

SQL PASS BAC - 60 reporting tips in 60 minutes

SQL PASS BAC - 60 reporting tips in 60 minutesIke Ellis This document contains 60 tips for reporting, SQL Server, Power BI, data visualization, and life hacks presented over 60 minutes. The tips include using color picker websites for SSRS, querying shortcuts in SSMS, commenting in SSMS, checking for indexes and clustered indexes in performance, and prettifying T-SQL scripts. It also provides tips for life such as starting a jar each year to track good things that happen, using dates tables in SQL for easy date calculations, and taking power strips when traveling. The document ends by thanking attendees and providing contact information for the presenter.

Ultimate Free SQL Server Toolkit

Ultimate Free SQL Server ToolkitKevin Kline Free and useful tools have proliferated since the launch of the CodePlex and SourceForge websites. Join Kevin Kline, long-time author of the SQL Server Magazine column "Tool Time", as he profiles the very best of the free tools covered in his monthly column - dozens of free tools and utilities! Some of the cover tools help to:

- Track database growth

- Implement logging in SSIS job steps

- Stress test your database applications

- Automate important preventative maintenance tasks

- Automate maintenance tasks for Analysis Services

- Help protect against SQL Injection attacks

- Graphically manage Extended Events

- Utilize PowerShell scripts to ease administration

And much more. These tools are all free and independently supported by SQL Server enthusiasts around the world.

Bi Architecture And Conceptual Framework

Bi Architecture And Conceptual FrameworkSlava Kokaev This document discusses business intelligence architecture and concepts. It covers topics like analysis services, SQL Server, data mining, integration services, and enterprise BI strategy and vision. It provides overviews of Microsoft's BI platform, conceptual frameworks, dimensional modeling, ETL processes, and data visualization systems. The goal is to improve organizational processes by providing critical business information to employees.

Top Ten Siemens S7 Tips and Tricks

Top Ten Siemens S7 Tips and TricksDMC, Inc. The document summarizes the presentation "Top Ten S7 Tips and Tricks" given at the 2011 Automation Summit. The presentation covered ten tips for programming Siemens S7 PLCs more efficiently, including using modular object-oriented architecture with function blocks and data types, monitoring function block instances, reporting system errors, using RAM disks and auto-generating symbol tables, activating and deactivating network nodes, basic safety programming, parsing data in local memory, backing up data block data, and useful keyboard shortcuts. The presenter was Nick Shea from DMC Engineering.

Azure Stream Analytics : Analyse Data in Motion

Azure Stream Analytics : Analyse Data in MotionRuhani Arora The document discusses evolving approaches to data warehousing and analytics using Azure Data Factory and Azure Stream Analytics. It provides an example scenario of analyzing game usage logs to create a customer profiling view. Azure Data Factory is presented as a way to build data integration and analytics pipelines that move and transform data between on-premises and cloud data stores. Azure Stream Analytics is introduced for analyzing real-time streaming data using a declarative query language.

Building Custom Big Data Integrations

Building Custom Big Data IntegrationsPat Patterson Big data ingest frameworks ship with an array of connectors for common data origins and destinations, such as flat files, S3, HDFS, Kafka etc, but sometimes, you need to send data to, or receive data from a system that's not on the list. StreamSets includes template code for building your own connectors and processors; we'll walk through the process of building a simple destination that sends data to a REST web service, and show how it can be extended to target more sophisticated systems such as Salesforce Wave Analytics.

Redgate Community Circle: Tools For SQL Server Performance Tuning

Redgate Community Circle: Tools For SQL Server Performance TuningGrant Fritchey This slide deck is in support of the live and video classes held by Redgate Software as part of the Community Circle initiative. This series of classes is meant to teach you the tools that can help to make query tuning easier in SQL Server. There are a number of different tools, all part of the fundamental Microsoft Data Platform offering, that can be used to help tune queries. This class outlines those tools and their uses.

SQL Pass Architecture SQL Tips & Tricks

SQL Pass Architecture SQL Tips & TricksIke Ellis This document provides 20 tips for SQL Server performance tuning, SSIS, SSRS, and other Microsoft data tools. The tips cover topics like using SSIS for accessibility, report formatting, hardware troubleshooting using PerfMon and tracing, readable presentations, indexing, windowing functions, scripting with PowerShell, TempDB configuration, prettifying SQL code, dates tables, enforcing business rules in the database, and logging. The document encourages staying involved with SQL Server user groups and provides contact information for the author.

Social media analytics using Azure Technologies

Social media analytics using Azure TechnologiesKoray Kocabas Social media are computer-mediated tools that allow people to create, share or exchange information, ideas, and pictures/videos in virtual communities and networks. To sum up Social Media is everything for your customers and Your company need to listen them to understand, make a custom offer or improve loyalty etc. Azure Stream Analytics and HDInsight platforms can solve this problem for you. We'll focus on how to get Twitter data using Stream Analytics and how to make data enrichment and storing using HDInsight and What is the problem about sentiment analytics using Azure Machine Learning.

Assyst 9 Overview Roadmap

Assyst 9 Overview RoadmapDCL1963 The document summarizes new features and the roadmap for assyst software versions 9, 9 SP1, 9 SP2, and beyond. Key new features include assystDiscovery for device discovery, surveys, chat, and wiki enhancements. Future versions will focus on improved usability, knowledge management, reporting, and interoperability with the goal of optimizing service value and integrating IT further with business goals.

AnalysisServices

AnalysisServiceswebuploader The document summarizes Microsoft's SQL Server 2005 Analysis Services (SSAS). It provides an overview of SSAS capabilities such as data mining algorithms, unified dimensional modeling, scalability features, and integrated manageability with SQL Server. It also describes demos of the OLAP and data mining capabilities and how SSAS can be deployed and managed for scalability, availability, and serviceability.

60 reporting tips in 60 minutes - SQLBits 2018

60 reporting tips in 60 minutes - SQLBits 2018Ike Ellis Presentation to SQLBits 2018. For report writers that use PowerBI, T-SQL, Excel, SSRS, SSIS, and SSMS

Mstr meetup

Mstr meetupBhavani Akunuri BI Team @ LinkedIn hosted a user group meeting for MicroStrategy customers in bay area. Presentation includes information about LinkedIn, concepts of metadata driven model for business dashboards, customizations using SDK, JSP and JQUERY.

TestGuild and QuerySurge Presentation -DevOps for Data Testing

TestGuild and QuerySurge Presentation -DevOps for Data TestingRTTS This slide deck is from one of our 4 webinars in our half-day series in conjunction with Test Guild.

Chris Thompson and Mike Calabrese, Senior Solution Architects and QuerySurge experts, provide great information, a demo and lots of humor in this webinar on how to implement DevOps for Data in your DataOps pipeline.

This webinar was performed in conjunction with Test Guild.

To watch the video, go to:

https://youtu.be/1ihuRPgY_rs

Ad

More from Cathrine Wilhelmsen (20)

Fra utvikler til arkitekt: Skap din egen karrierevei ved å utvikle din person...

Fra utvikler til arkitekt: Skap din egen karrierevei ved å utvikle din person...Cathrine Wilhelmsen Fra utvikler til arkitekt: Skap din egen karrierevei

ved å utvikle din personlige merkevare (Presented at Tekna in Oslo, Norway on April 23rd, 2024)

One Year in Fabric: Lessons Learned from Implementing Real-World Projects (PA...

One Year in Fabric: Lessons Learned from Implementing Real-World Projects (PA...Cathrine Wilhelmsen One Year in Fabric: Lessons Learned from Implementing Real-World Projects (Presented at PASS Data Community Summit on November 8th, 2024)

Data Factory in Microsoft Fabric (MsBIP #82)

Data Factory in Microsoft Fabric (MsBIP #82)Cathrine Wilhelmsen Data Factory in Microsoft Fabric (Presented at the Microsoft BI Professionals Denmark meeting #82 on April 15th, 2024)

Getting Started: Data Factory in Microsoft Fabric (Microsoft Fabric Community...

Getting Started: Data Factory in Microsoft Fabric (Microsoft Fabric Community...Cathrine Wilhelmsen Getting Started: Data Factory in Microsoft Fabric (Presented at Microsoft Fabric Community Conference on March 26th, 2024)

Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Fac...

Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Fac...Cathrine Wilhelmsen Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Factory (Presented at SQLBits on March 23rd, 2024)

Website Analytics in My Pocket using Microsoft Fabric (SQLBits 2024)

Website Analytics in My Pocket using Microsoft Fabric (SQLBits 2024)Cathrine Wilhelmsen The document is about how the author Cathrine Wilhelmsen built her own website analytics dashboard using Microsoft Fabric and Power BI. She collects data from the Cloudflare API and stores it in Microsoft Fabric. This allows her to visualize and access the analytics data on her phone through a mobile app beyond the 30 days retention offered by Cloudflare. In her presentation, she demonstrates how she retrieves the website data, processes it with Microsoft Fabric pipelines, and visualizes it in Power BI for a self-hosted analytics solution.

Data Integration using Data Factory in Microsoft Fabric (ESPC Microsoft Fabri...

Data Integration using Data Factory in Microsoft Fabric (ESPC Microsoft Fabri...Cathrine Wilhelmsen Data Integration using Data Factory in Microsoft Fabric (Presented at the ESPC Microsoft Fabric Week on February 19th, 2024)

Choosing between Fabric, Synapse and Databricks (Data Left Unattended 2023)

Choosing between Fabric, Synapse and Databricks (Data Left Unattended 2023)Cathrine Wilhelmsen Choosing between Microsoft Fabric, Azure Synapse Analytics and Azure Databricks (Presented at Data Left Unattended on December 7th, 2023)

Data Integration with Data Factory (Microsoft Fabric Day Oslo 2023)

Data Integration with Data Factory (Microsoft Fabric Day Oslo 2023)Cathrine Wilhelmsen Cathrine Wilhelmsen gave a presentation on using Microsoft Data Factory for data integration within Microsoft Fabric. Data Factory allows users to define data pipelines to ingest, transform and orchestrate data workflows. Pipelines contain activities that can copy or move data between different data stores. Connections specify how to connect to these data stores. Dataflows Gen2 provide enhanced orchestration capabilities, including defining activity dependencies and schedules. The presentation demonstrated how to use these capabilities in Data Factory for complex data integration scenarios.

The Battle of the Data Transformation Tools (PASS Data Community Summit 2023)

The Battle of the Data Transformation Tools (PASS Data Community Summit 2023)Cathrine Wilhelmsen The Battle of the Data Transformation Tools (Presented as part of the "Batte of the Data Transformation Tools" Learning Path at PASS Data Community Summit on November 16th, 2023)

Visually Transform Data in Azure Data Factory or Azure Synapse Analytics (PAS...

Visually Transform Data in Azure Data Factory or Azure Synapse Analytics (PAS...Cathrine Wilhelmsen Visually Transform Data in

Azure Data Factory or Azure Synapse Analytics (Presented as part of the "Batte of the Data Transformation Tools" Learning Path at PASS Data Community Summit on November 15th, 2023)

Building an End-to-End Solution in Microsoft Fabric: From Dataverse to Power ...

Building an End-to-End Solution in Microsoft Fabric: From Dataverse to Power ...Cathrine Wilhelmsen Building an End-to-End Solution in Microsoft Fabric: From Dataverse to Power BI (Presented at SQLSaturday Oregon & SW Washington on November 11th, 2023)

Website Analytics in my Pocket using Microsoft Fabric (AdaCon 2023)

Website Analytics in my Pocket using Microsoft Fabric (AdaCon 2023)Cathrine Wilhelmsen The document is about how the author created a mobile-friendly dashboard for her website analytics using Microsoft Fabric and Power BI. She collects data from the Cloudflare API and stores it in Microsoft Fabric. Then she visualizes the data in Power BI which can be viewed on her phone. This allows her to track website traffic and see which pages are most popular over time. She demonstrates her dashboard and discusses future improvements like comparing statistics across different time periods.

Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Fac...

Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Fac...Cathrine Wilhelmsen Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Factory (Presented at Data Saturday Oslo on September 2nd, 2023)

Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (D...

Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (D...Cathrine Wilhelmsen Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (Presented on September 2nd 2023 at Data Saturday Oslo)

Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (S...

Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (S...Cathrine Wilhelmsen Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (Presented at SQLBits on March 18th, 2023)

We all experience stress in our lives. When the stress is time-limited and manageable, it can be positive and productive. This kind of stress can help you get things done and lead to personal growth. However, when the stress stretches out over longer periods of time and we are unable to manage it, it can be negative and debilitating. This kind of stress can affect your mental health as well as your physical health, and increase the risk of depression and burnout.

The tricky part is that both depression and burnout can hit you hard without the warning signs you might recognize from stress. Where stress barges through your door and yells "hey, it's me!", depression and burnout can silently sneak in and gradually make adjustments until one day you turn around and see them smiling while realizing that you no longer recognize your house. I know, because I've dealt with both. And when I thought I had kicked them out, they both came back for new visits.

I don't have the Answers™️ or Solutions™️ to how to keep them away forever. But in hindsight, there were plenty of warning signs I missed, ignored, or was oblivious to at the time. In this deeply personal session, I will share my story of dealing with both depression and burnout. What were the warning signs? Why did I miss them? Could I have done something differently? And most importantly, what can I - and you - do to help ourselves or our loved ones if we notice that something is not quite right?

"I can't keep up!" - Turning Discomfort into Personal Growth in a Fast-Paced ...

"I can't keep up!" - Turning Discomfort into Personal Growth in a Fast-Paced ...Cathrine Wilhelmsen "I can't keep up!" - Turning Discomfort into Personal Growth in a Fast-Paced World (Presented at SQLBits on March 17th, 2023)

Do you sometimes think the world is moving so fast that you're struggling to keep up?

Does it make you feel a little uncomfortable?

Awesome!

That means that you have ambitions. You want to learn new things, take that next step in your career, achieve your goals. You can do anything if you set your mind to it.

It just might not be easy.

All growth requires some discomfort. You need to manage and balance that discomfort, find a way to push yourself a little bit every day without feeling overwhelmed. In a fast-paced world, you need to know how to break down your goals into smaller chunks, how to prioritize, and how to optimize your learning.

Are you ready to turn your "I can't keep up" into "I can't believe I did all of that in just one year"?

Lessons Learned: Implementing Azure Synapse Analytics in a Rapidly-Changing S...

Lessons Learned: Implementing Azure Synapse Analytics in a Rapidly-Changing S...Cathrine Wilhelmsen Lessons Learned: Implementing Azure Synapse Analytics in a Rapidly-Changing Startup (Presented at SQLBits on March 11th, 2022)

What happens when you mix one rapidly-changing startup, one data analyst, one data engineer, and one hypothesis that Azure Synapse Analytics could be the right tool of choice for gaining business insights?

We had no idea, but we gave it a go!

Our ambition was to think big, start small, and act fast – to deliver business value early and often.

Did we succeed?

Join us for an honest conversation about why we decided to implement Azure Synapse Analytics alongside Power BI, how we got started, which areas we completely messed up at first, what our current solution looks like, the lessons learned along the way, and the things we would have done differently if we could start all over again.

6 Tips for Building Confidence as a Public Speaker (SQLBits 2022)

6 Tips for Building Confidence as a Public Speaker (SQLBits 2022)Cathrine Wilhelmsen 6 Tips for Building Confidence as a Public Speaker (Presented at SQLBits on March 10th, 2022)

Do you feel nervous about getting on stage to deliver a presentation?

That was me a few years ago. Palms sweating. Hands shaking. Voice trembling. I could barely breathe and talked at what felt like a thousand words per second. Now, public speaking is one of my favorite hobbies. Sometimes, I even plan my vacations around events! What changed?

There are no shortcuts to building confidence as a public speaker. However, there are many things you can do to make the journey a little easier for yourself. In this session, I share the top tips I have learned over the years. All it takes is a little preparation and practice.

You can do this!

Lessons Learned: Understanding Pipeline Pricing in Azure Data Factory and Azu...

Lessons Learned: Understanding Pipeline Pricing in Azure Data Factory and Azu...Cathrine Wilhelmsen Lessons Learned: Understanding Pipeline Pricing in Azure Data Factory and Azure Synapse Analytics (Presented at DataMinutes #2 on January 21st, 2022)

Ad

Recently uploaded (20)

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnn

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnncegiver630 Telangana State, India’s newest state that was carved from the erstwhile state of Andhra

Pradesh in 2014 has launched the Water Grid Scheme named as ‘Mission Bhagiratha (MB)’

to seek a permanent and sustainable solution to the drinking water problem in the state. MB is

designed to provide potable drinking water to every household in their premises through

piped water supply (PWS) by 2018. The vision of the project is to ensure safe and sustainable

piped drinking water supply from surface water sources

FPET_Implementation_2_MA to 360 Engage Direct.pptx

FPET_Implementation_2_MA to 360 Engage Direct.pptxssuser4ef83d Engage Direct 360 marketing optimization sas entreprise miner

4. Multivariable statistics_Using Stata_2025.pdf

4. Multivariable statistics_Using Stata_2025.pdfaxonneurologycenter1 Multivariable statistics_Using Stata

Developing Security Orchestration, Automation, and Response Applications

Developing Security Orchestration, Automation, and Response ApplicationsVICTOR MAESTRE RAMIREZ Developing Security Orchestration, Automation, and Response Applications

Deloitte Analytics - Applying Process Mining in an audit context

Deloitte Analytics - Applying Process Mining in an audit contextProcess mining Evangelist Mieke Jans is a Manager at Deloitte Analytics Belgium. She learned about process mining from her PhD supervisor while she was collaborating with a large SAP-using company for her dissertation.

Mieke extended her research topic to investigate the data availability of process mining data in SAP and the new analysis possibilities that emerge from it. It took her 8-9 months to find the right data and prepare it for her process mining analysis. She needed insights from both process owners and IT experts. For example, one person knew exactly how the procurement process took place at the front end of SAP, and another person helped her with the structure of the SAP-tables. She then combined the knowledge of these different persons.

Process Mining and Data Science in the Financial Industry

Process Mining and Data Science in the Financial IndustryProcess mining Evangelist Lalit Wangikar, a partner at CKM Advisors, is an experienced strategic consultant and analytics expert. He started looking for data driven ways of conducting process discovery workshops. When he read about process mining the first time around, about 2 years ago, the first feeling was: “I wish I knew of this while doing the last several projects!".

Interviews are subject to all the whims human recollection is subject to: specifically, recency, simplification and self preservation. Interview-based process discovery, therefore, leaves out a lot of “outliers” that usually end up being one of the biggest opportunity area. Process mining, in contrast, provides an unbiased, fact-based, and a very comprehensive understanding of actual process execution.

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management By James Francis, CEO of Paradigm Asset Management

In the landscape of urban safety innovation, Mt. Vernon is emerging as a compelling case study for neighboring Westchester County cities. The municipality’s recently launched Public Safety Camera Program not only represents a significant advancement in community protection but also offers valuable insights for New Rochelle and White Plains as they consider their own safety infrastructure enhancements.

Perencanaan Pengendalian-Proyek-Konstruksi-MS-PROJECT.pptx

Perencanaan Pengendalian-Proyek-Konstruksi-MS-PROJECT.pptxPareaRusan planning and calculation monitoring project

Simple_AI_Explanation_English somplr.pptx

Simple_AI_Explanation_English somplr.pptxssuser2aa19f Ai artificial intelligence ai with python course first upload

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management

Tools and Tips For Data Warehouse Developers (SQLGLA)

- 1. Tools and Tips for Data Warehouse Developers Cathrine Wilhelmsen SQLGLA · September 14th 2018

- 2. Gold Sponsors Silver Sponsors Bronze Sponsors Local Partners

- 3. Cathrine Wilhelmsen @cathrinew cathrinew.net Senior Business Intelligence Consultant, Inmeta

- 4. once upon a time...

- 6. how I felt…

- 8. how I want to be...

- 10. Today? SQL Tools and Tips Free Scripts and Resources Biml for SSIS

- 12. Visual Information in SSMS

- 14. Status Bar and Tab Text

- 15. Results in Separate Tab

- 16. Tab Groups - Vertical

- 17. Tab Groups - Horizontal

- 18. Split Query in Two

- 19. Pin Tabs

- 21. Query Shortcuts

- 22. Keyboard Shortcuts docs.microsoft.com/en-us/sql/ssms/sql-server-management-studio-keyboard-shortcuts Assign shortcuts you frequently use Remove shortcuts you accidentally click

- 23. HOME END PG UP PG DNCTRL ALT SHIFT TAB Magic keys!

- 24. CTRL R Show / Hide Query Results

- 25. ALTSHIFT ENTER Toggle Full Screen

- 28. SHIFTALT Column / Multi-Line Editing

- 29. CTRL K CTRL C Comment Line CTRL K CTRL U Uncomment Line Comment / Uncomment

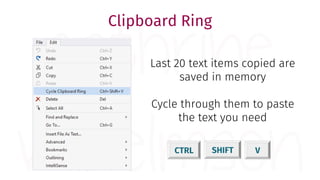

- 30. CTRL SHIFT V Last 20 text items copied are saved in memory Cycle through them to paste the text you need Clipboard Ring

- 31. Too many shortcuts? Use Quick Launch! …or just ALT + Keyboard! CTRL Q

- 32. am2.co/shortcuts Keyboard Shortcuts Cheat Sheet by Andy Mallon

- 33. SQLWorkbooks.com/SSMS SSMS Shortcuts and Secrets by Kendra Little

- 34. Templates and Snippets in SSMS

- 35. Template Browser Create Templates CTRL ALT T Templates

- 36. Replace Template Parameters with actual values CTRL SHIFT M Template Parameters

- 37. CTRL K CTRL X Insert Snippet CTRL K CTRL S Surround With Snippet Snippets

- 38. Redgate SQL Prompt (Licensed) SSMS Tools Pack (Licensed) red-gate.com ssmstoolspack.com Advanced Snippets and Formatting Favorites

- 39. ApexSQL Complete / Refactor SSMS Boost Poor Man's T-SQL Formatter dbForge SQL Complete (Licensed) SQL Formatter SqlSmash apexsql.com ssmsboost.com poorsql.com devart.com/dbforge sql-format.com sqlsmash.com Advanced Snippets and Formatting Alternatives

- 40. Search in SSMS

- 41. Free Tool: Redgate SQL Search red-gate.com/products/sql-development/sql-search/

- 42. Free Tool: Redgate SQL Search

- 43. Redgate SQL Search ApexSQL Search SSMS Tools Pack (Licensed) SSMS Boost red-gate.com apexsql.com ssmstoolspack.com ssmsboost.com Tools: Search in SSMS

- 44. Registered Servers and Multiserver Queries in SSMS

- 45. Registered Servers Save and group servers Is the server running? CTRL ALT G

- 46. Manage services from SSMS

- 49. Query Analysis in SSMS

- 50. Execution Plans Display Estimated Execution Plan CTRL L Include Actual Execution Plan CTRL M

- 51. Execution Plans See how a query will be executed:

- 54. Live Query Statistics Include Live Query Statistics

- 55. Live Query Statistics Include Live Query Statistics

- 56. Free Tool: SQL Sentry Plan Explorer sqlsentry.com/products/plan-explorer

- 57. Free Tool: SQL Sentry Plan Explorer answers.sqlperformance.com

- 58. red-gate.com/books Free Book: SQL Server Execution Plans by Grant Fritchey

- 59. Query Statistics in SSMS

- 60. Statistics IO SET STATISTICS IO OFF; SET STATISTICS IO ON;

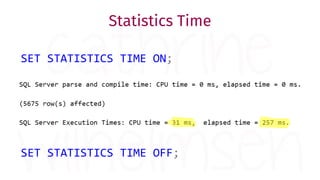

- 61. Statistics Time SET STATISTICS TIME OFF; SET STATISTICS TIME ON;

- 62. statisticsparser.com Free Tool: Statistics Parser by Richie Rump

- 63. Client Statistics Include Client Statistics SHIFT SALT

- 64. Client Statistics Compare multiple query executions:

- 65. Activity Monitoring in SSMS

- 66. whoisactive.com Free Script: sp_WhoIsActive by Adam Machanic

- 67. whoisactive.com Free Script: sp_WhoIsActive by Adam Machanic

- 68. SQL Ops Studio

- 70. Color Themes CTRL K CTRL T

- 71. Keyboard Shortcuts CTRL K CTRL S

- 72. Save and View Results Save as CSV

- 73. Save and View Results Save as JSON

- 74. Save and View Results Save as Excel

- 75. Save and View Results View as Chart

- 76. Save and View Results

- 78. Custom Snippets

- 81. Tools: Test Data and Mockups

- 84. Tools: Biml for SSIS

- 85. Of course I can create 200 SSIS Packages! …what do you need me to do after lunch?

- 86. Business Intelligence Markup Language Easy to read and write XML language Generate SSIS packages from metadata

- 87. How does SSIS development work?

- 88. How does Biml development work?

- 89. What do you need?

- 91. Where can I learn more? Free online training bimlscript.com

- 92. Biml on Monday… …BimlBreak the rest of the week!

- 93. @cathrinew cathrinew.net [email protected] Too fast? Not enough details? Don't worry! cathrinew.net/efficient