Ad

"Understanding Correlation and Regression: Key Concepts for Data Analysis"

- 2. Correlation Correlation Correlation is a statistical technique used to determine the degree to which two variables are related

- 3. • Rectangular coordinate • Two quantitative variables • One variable is called independent (X) and the second is called dependent (Y) • Points are not joined • No frequency table Scatter diagram

- 4. Example

- 5. Scatter diagram of weight and systolic blood Scatter diagram of weight and systolic blood pressure pressure 80 100 120 140 160 180 200 220 60 70 80 90 100 110 120 wt (kg) SBP(mmHg)

- 6. 80 100 120 140 160 180 200 220 60 70 80 90 100 110 120 Wt (kg) SBP(mmHg) Scatter diagram of weight and systolic blood pressure

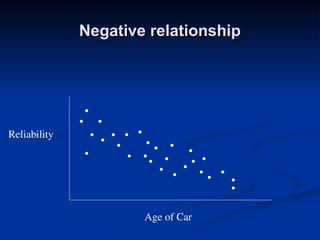

- 7. Scatter plots The pattern of data is indicative of the type of relationship between your two variables: positive relationship negative relationship no relationship

- 9. 0 2 4 6 8 10 12 14 16 18 0 10 20 30 40 50 60 70 80 90 Age in Weeks Height in CM

- 12. Correlation Coefficient Correlation Coefficient Statistic showing the degree of relation between two variables

- 13. Simple Correlation coefficient Simple Correlation coefficient (r) (r) It is also called Pearson's correlation It is also called Pearson's correlation or product moment correlation or product moment correlation coefficient. coefficient. It measures the It measures the nature nature and and strength strength between two variables of between two variables of the the quantitative quantitative type. type.

- 14. The The sign sign of of r r denotes the nature of denotes the nature of association association while the while the value value of of r r denotes the denotes the strength of association. strength of association.

- 15. If the sign is If the sign is +ve +ve this means the relation this means the relation is is direct direct (an increase in one variable is (an increase in one variable is associated with an increase in the associated with an increase in the other variable and a decrease in one other variable and a decrease in one variable is associated with a variable is associated with a decrease in the other variable). decrease in the other variable). While if the sign is While if the sign is -ve -ve this means an this means an inverse or indirect inverse or indirect relationship (which relationship (which means an increase in one variable is means an increase in one variable is associated with a decrease in the other). associated with a decrease in the other).

- 16. The value of r ranges between ( -1) and ( +1) The value of r ranges between ( -1) and ( +1) The value of r denotes the strength of the The value of r denotes the strength of the association as illustrated association as illustrated by the following diagram. by the following diagram. - 1 1 0 -0.25 -0.75 0.75 0.25 strong strong intermediate intermediate weak weak no relation perfect correlation perfect correlation Direct indirect

- 17. If If r r = Zero = Zero this means no association or this means no association or correlation between the two variables. correlation between the two variables. If If 0 < 0 < r r < 0.25 < 0.25 = weak correlation. = weak correlation. If If 0.25 ≤ 0.25 ≤ r r < 0.75 < 0.75 = intermediate correlation. = intermediate correlation. If If 0.75 ≤ 0.75 ≤ r r < 1 < 1 = strong correlation. = strong correlation. If If r r = l = l = perfect correlation. = perfect correlation.

- 18. n y) ( y . n x) ( x n y x xy r 2 2 2 2 How to compute the simple correlation coefficient (r)

- 19. Example Example : : A sample of 6 children was selected, data about their A sample of 6 children was selected, data about their age in years and weight in kilograms was recorded as age in years and weight in kilograms was recorded as shown in the following table . It is required to find the shown in the following table . It is required to find the correlation between age and weight. correlation between age and weight. serial No Age (years) Weight (Kg) 1 7 12 2 6 8 3 8 12 4 5 10 5 6 11 6 9 13

- 20. These 2 variables are of the quantitative type, one These 2 variables are of the quantitative type, one variable (Age) is called the independent and variable (Age) is called the independent and denoted as (X) variable and the other (weight) denoted as (X) variable and the other (weight) is called the dependent and denoted as (Y) is called the dependent and denoted as (Y) variables to find the relation between age and variables to find the relation between age and weight compute the simple correlation coefficient weight compute the simple correlation coefficient using the following formula: using the following formula: n y) ( y . n x) ( x n y x xy r 2 2 2 2

- 21. Regression Analyses Regression Analyses Regression: technique concerned with predicting some variables by knowing others The process of predicting variable Y using variable X

- 22. Regression Regression Uses a variable (x) to predict some outcome Uses a variable (x) to predict some outcome variable (y) variable (y) Tells you how values in y change as a function Tells you how values in y change as a function of changes in values of x of changes in values of x

- 23. Correlation and Regression Correlation and Regression Correlation describes the strength of a Correlation describes the strength of a linear relationship between two variables Linear means “straight line” Regression tells us how to draw the straight line described by the correlation

- 24. Regression Calculates the “best-fit” line for a certain set of data Calculates the “best-fit” line for a certain set of data The regression line makes the sum of the squares of The regression line makes the sum of the squares of the residuals smaller than for any other line the residuals smaller than for any other line Regression minimizes residuals 80 100 120 140 160 180 200 220 60 70 80 90 100 110 120 Wt (kg)

- 25. Regression Equation Regression equation describes the regression line mathematically Intercept Slope 80 100 120 140 160 180 200 220 60 70 80 90 100 110 120 Wt (kg) SBP(mmHg)

- 26. Linear Equations Linear Equations Y Y = bX + a a = Y-intercept X Change in Y Change in X b = Slope bX a ŷ

- 27. By using the least squares method (a procedure By using the least squares method (a procedure that minimizes the vertical deviations of plotted that minimizes the vertical deviations of plotted points surrounding a straight line) we are points surrounding a straight line) we are able to construct a best fitting straight line to the able to construct a best fitting straight line to the scatter diagram points and then formulate a scatter diagram points and then formulate a regression equation in the form of: regression equation in the form of: n x) ( x n y x xy b 2 2 1 b bX a ŷ Y mean X-Xmean

- 28. Hours studying and grades Hours studying and grades

- 29. Regressing grades on hours grades on hours Linear Regression 2.00 4.00 6.00 8.00 10.00 Number of hours spent studying 70.00 80.00 90.00 Final grade in course = 59.95 + 3.17 * study R-Square = 0.88 Predicted final grade in class = 59.95 + 3.17*(number of hours you study per week)

- 30. Predict the final grade of Predict the final grade of … … Someone who studies for 12 hours Final grade = 59.95 + (3.17*12) Final grade = 97.99 Someone who studies for 1 hour: Final grade = 59.95 + (3.17*1) Final grade = 63.12 Predicted final grade in class = 59.95 + 3.17*(hours of study)

- 31. Exercise Exercise A sample of 6 persons was selected the A sample of 6 persons was selected the value of their age ( x variable) and their value of their age ( x variable) and their weight is demonstrated in the following weight is demonstrated in the following table. Find the regression equation and table. Find the regression equation and what is the predicted weight when age is what is the predicted weight when age is 8.5 years 8.5 years. .

- 32. Serial no . Age (x) Weight (y) 1 2 3 4 5 6 7 6 8 5 6 9 12 8 12 10 11 13

- 33. Answer Answer Serial no . Age (x) Weight (y) xy X2 Y2 1 2 3 4 5 6 7 6 8 5 6 9 12 8 12 10 11 13 84 48 96 50 66 117 49 36 64 25 36 81 144 64 144 100 121 169 Total 41 66 461 291 742

- 34. 6.83 6 41 x 11 6 66 y 92 . 0 6 ) 41 ( 291 6 66 41 461 2 b Regression equation 6.83) 0.9(x 11 ŷ(x)

- 35. 0.92x 4.675 ŷ(x) 12.50Kg 8.5 * 0.92 4.675 ŷ(8.5) Kg 58 . 11 7.5 * 0.92 4.675 ŷ(7.5)

- 36. 11.4 11.6 11.8 12 12.2 12.4 12.6 7 7.5 8 8.5 9 Age (in years) Weight (in Kg) we create a regression line by plotting two estimated values for y against their X component, then extending the line right and left.

- 37. Exercise 2 Exercise 2 The following are the The following are the age (in years) and age (in years) and systolic blood systolic blood pressure of 20 pressure of 20 apparently healthy apparently healthy adults. adults. Age (x) B.P (y) Age (x) B.P (y) 20 43 63 26 53 31 58 46 58 70 120 128 141 126 134 128 136 132 140 144 46 53 60 20 63 43 26 19 31 23 128 136 146 124 143 130 124 121 126 123

- 38. Find the correlation between age Find the correlation between age and blood pressure using simple and blood pressure using simple and Spearman's correlation and Spearman's correlation coefficients, and comment. coefficients, and comment. Find the regression equation? Find the regression equation? What is the predicted blood What is the predicted blood pressure for a man aging 25 years? pressure for a man aging 25 years?

- 39. Serial x y xy x2 1 20 120 2400 400 2 43 128 5504 1849 3 63 141 8883 3969 4 26 126 3276 676 5 53 134 7102 2809 6 31 128 3968 961 7 58 136 7888 3364 8 46 132 6072 2116 9 58 140 8120 3364 10 70 144 10080 4900

- 40. Serial x y xy x2 11 46 128 5888 2116 12 53 136 7208 2809 13 60 146 8760 3600 14 20 124 2480 400 15 63 143 9009 3969 16 43 130 5590 1849 17 26 124 3224 676 18 19 121 2299 361 19 31 126 3906 961 20 23 123 2829 529 Total 852 2630 114486 41678

- 41. n x) ( x n y x xy b 2 2 1 4547 . 0 20 852 41678 20 2630 852 114486 2 = =112.13 + 0.4547 x for age 25 B.P = 112.13 + 0.4547 * 25=123.49 = 123.5 mm hg ŷ

- 42. Multiple Regression Multiple regression analysis is a straightforward extension of simple regression analysis which allows more than one independent variable.