Understanding Kubernetes Scheduling - CNTUG 2024-10

0 likes274 views

It's a live demo for meetup, it demostrate the preemption by using kubernetes-scheduler-simulator.

1 of 49

Download to read offline

Ad

Recommended

Docker 101

Docker 101Lâm Đào slideshow: https://www.slideshare.net/ssuser9b325a/docker-101-144718472

This is an introduction to docker in Vietnamese language

In this document

- Introduction to docker

- Docker network

- Demo scenario

Slide show:

https://www.slideshare.net/ssuser9b325a/docker-101-144718472

Docker internals

Docker internalsRohit Jnagal This document discusses Docker internals and components. It covers:

1. Docker provides build once, configure once capabilities to deploy applications everywhere reliably, consistently, efficiently and cheaply.

2. Docker components include the Docker daemon, libcontainer, cgroups, namespaces, AUFS/BTRFS/dm-thinp, and the kernel-userspace interface.

3. Docker uses filesystem isolation through layering, copy-on-write, caching and differencing using union filesystems like AUFS to provide efficient sharing of files between containers.

ArgoCD 的雷 碰過的人就知道 @TSMC IT Community Meetup #4

ArgoCD 的雷 碰過的人就知道 @TSMC IT Community Meetup #4Johnny Sung 「白畫面」「嗯...怎麼還是白畫面?」還在對於那些冷冰冰的 yaml 設定檔發愣嗎?這次手把手的帶你入門 Kubernetes (K8s) 的部署環節,講解 K8s 的一些基礎元件,Kustomize 的寫法,跟 ArgoCD 架設需要注意的地方,讓你少走一些彎路。從基礎到日常操作,我們將一步步展示如何輕易的掌控 Kubernetes 部署與管理的關鍵技術。

#argocd #argo #kubernetes #k8s #deployment

Kubernetes and container security

Kubernetes and container securityVolodymyr Shynkar Everyone heard about Kubernetes. Everyone wants to use this tool. However, sometimes we forget about security, which is essential throughout the container lifecycle.

Therefore, our journey with Kubernetes security should begin in the build stage when writing the code becomes the container image.

Kubernetes provides innate security advantages, and together with solid container protection, it will be invincible.

During the sessions, we will review all those features and highlight which are mandatory to use. We will discuss the main vulnerabilities which may cause compromising your system.

Contacts:

LinkedIn - https://www.linkedin.com/in/vshynkar/

GitHub - https://github.com/sqerison

-------------------------------------------------------------------------------------

Materials from the video:

The policies and docker files examples:

https://gist.github.com/sqerison/43365e30ee62298d9757deeab7643a90

The repo with the helm chart used in a demo:

https://github.com/sqerison/argo-rollouts-demo

Tools that showed in the last section:

https://github.com/armosec/kubescape

https://github.com/aquasecurity/kube-bench

https://github.com/controlplaneio/kubectl-kubesec

https://github.com/Shopify/kubeaudit#installation

https://github.com/eldadru/ksniff

Further learning.

A book released by CISA (Cybersecurity and Infrastructure Security Agency):

https://media.defense.gov/2021/Aug/03/2002820425/-1/-1/1/CTR_KUBERNETES%20HARDENING%20GUIDANCE.PDF

O`REILLY Kubernetes Security:

https://kubernetes-security.info/

O`REILLY Container Security:

https://info.aquasec.com/container-security-book

Thanks for watching!

CD using ArgoCD(KnolX).pdf

CD using ArgoCD(KnolX).pdfKnoldus Inc. ArgoCD is a Continuous Delivery and Deployment tool based on GitOps principles. It helps to automate deployment to Kubernetes cluster from github. We will look into how to adopt and use argoCD for continuous deployment.

Ansible presentation

Ansible presentationSuresh Kumar This document discusses repetitive system administration tasks and proposes Ansible as a solution. It describes how Ansible works using agentless SSH to automate tasks like software installation, configuration, and maintenance across multiple servers. Key aspects covered include Ansible's inventory, modules, playbooks, templates, variables, roles and Docker integration. Ansible Tower is also introduced as a GUI tool for running Ansible jobs. The document recommends Ansible for anyone doing the same tasks across multiple servers to gain efficiencies over manual processes.

Ansible

AnsibleVishal Yadav Introduces Ansible as DevOps favorite choice for Configuration Management and Server Provisioning. Enables audience to get started with using Ansible. Developed in Python which only needs YAML syntax knowledge to automate using this tool.

Kubernetes 101

Kubernetes 101Winton Winton This document discusses microservices and containers, and how Kubernetes can be used for container orchestration. It begins with an overview of microservices and the benefits of breaking monolithic applications into independent microservices. It then discusses how containers can be used to package and deploy microservices, and introduces Docker as a container platform. Finally, it explains that as container usage grows, an orchestrator like Kubernetes is needed to manage multiple containers and microservices, and provides a high-level overview of Kubernetes' architecture and capabilities for scheduling, self-healing, scaling, and other management of containerized applications.

Hbase hivepig

Hbase hivepigRadha Krishna This document discusses NoSQL and big data processing. It provides background on scaling relational databases and introduces key concepts like the CAP theorem. The CAP theorem states that a distributed data store can only provide two out of three guarantees around consistency, availability, and partition tolerance. Many NoSQL systems sacrifice consistency for availability and partition tolerance, adopting an eventual consistency model instead of ACID transactions.

Local Apache NiFi Processor Debug

Local Apache NiFi Processor DebugDeon Huang The document provides steps for debugging a local NiFi processor, including getting the NiFi source code from GitHub, setting up NiFi and an IDE, and launching the IDE in debug mode to trigger breakpoints when a processor starts. It recommends using a feature branch workflow and links to Apache NiFi contribution guides.

GitOps w/argocd

GitOps w/argocdJean-Philippe Bélanger This document summarizes GitOps and the benefits of using GitOps for continuous delivery and deployment. It discusses how GitOps allows for simplified continuous delivery through using Git as a single source of truth, which can enhance productivity and experience while also increasing stability. Rollbacks are easy if issues arise by reverting commits in Git. Additional benefits include reliability, consistency, security, and auditability of changes. The document also provides an overview of ArgoCD, an open source GitOps tool for continuous delivery on Kubernetes, and its architecture.

Kubernetes (k8s).pdf

Kubernetes (k8s).pdfJaouad Assabbour Dans ce cours, vous allez apprendre les bases de Kubernetes et mettre en place un petit projet grâce à K8s.

Ansible presentation

Ansible presentationJohn Lynch This document provides an overview of Ansible, an open source tool for configuration management and application deployment. It discusses how Ansible aims to simplify infrastructure automation tasks through a model-driven approach without requiring developers to learn DevOps tools. Key points:

- Ansible uses YAML playbooks to declaratively define server configurations and deployments in an idempotent and scalable way.

- It provides ad-hoc command execution and setup facts gathering via SSH. Playbooks can target groups of servers to orchestrate complex multi-server tasks.

- Variables, templates, conditionals allow playbooks to customize configurations for different environments. Plugins support integration with cloud, monitoring, messaging tools.

- Ansible aims to reduce complexity compared

Join semantics in kafka streams

Join semantics in kafka streamsKnoldus Inc. With the recent adoption of the Confluent and Kafka Streams, organizations have experienced significantly improved system stability with real-time processing framework, as well as improved scalability and lower maintenance costs.

The focus of this webinar is:

~Different join operators in Kafka Streams.

~Exploring different options in Kafka Streams to join semantics, both with and without shared keys.

~How to put Application Owner in control by leveraging simplified app-centric architecture.

If you have any queries, contact Himani over mail [email protected]

Introduction to GCP BigQuery and DataPrep

Introduction to GCP BigQuery and DataPrepPaweł Mitruś This document provides an overview and summary of Google Cloud Platform's BigQuery and DataPrep services. For BigQuery, it covers what it is used for, pricing models, how to load and query data, best practices, and limits. For DataPrep, it discusses what it is used for, pricing, how to wrangle and transform data using recipes and flows, performance optimization tips, and limits. Live demos are provided of loading, querying data in BigQuery and using transformations in DataPrep. The presenter's contact information is also included at the end.

Nick Fisk - low latency Ceph

Nick Fisk - low latency CephShapeBlue This document discusses optimizing Ceph latency through hardware design. It finds that CPU frequency has a significant impact on latency, with higher frequencies resulting in lower latencies. Testing shows 4KB write latency of 2.4ms at 900MHz but 694us at higher frequencies. The document also discusses how CPU power states that wake slowly, like C6 at 85us, can negatively impact latency. Overall it advocates designing hardware with fast CPUs and avoiding slower cores or dual sockets to minimize latency in Ceph deployments.

Monitoring and Scaling Redis at DataDog - Ilan Rabinovitch, DataDog

Monitoring and Scaling Redis at DataDog - Ilan Rabinovitch, DataDogRedis Labs Think you have big data? What about high availability

requirements? At DataDog we process billions of data points every day including metrics and events, as we help the world

monitor the their applications and infrastructure. Being the world’s monitoring system is a big responsibility, and thanks to

Redis we are up to the task. Join us as we discuss how the DataDog team monitors and scales Redis to power our SaaS based monitoring offering. We will discuss our usage and deployment patterns, as well as dive into monitoring best practices for production Redis workloads

Introduction of cloud native CI/CD on kubernetes

Introduction of cloud native CI/CD on kubernetesKyohei Mizumoto This document discusses setting up continuous integration and continuous delivery (CI/CD) pipelines on Kubernetes using Concourse CI and Argo CD. It provides an overview of each tool, instructions for getting started with Concourse using Helm and configuring sample pipelines in YAML, and instructions for installing and configuring applications in Argo CD through application manifests.

Git Branching Model

Git Branching ModelHarun Yardımcı This document discusses Git branching models and conventions. It introduces common branches like master, release, production and hotfix branches. It explains how to create and switch branches. Feature branches are used for new development and hotfix branches are for urgent fixes. Commit messages should include the related Jira issue number. The branching model aims to separate development, release and production stages through distinct branches with clear naming conventions.

Bloat and Fragmentation in PostgreSQL

Bloat and Fragmentation in PostgreSQLMasahiko Sawada PostgreSQL uses MVCC which creates multiple versions of rows during updates and deletes. This leads to bloat and fragmentation over time as unused row versions accumulate. The VACUUM command performs garbage collection to recover space from dead rows. HOT updates and pruning help reduce bloat by avoiding index bloat during certain updates. Future improvements include parallel and eager vacuuming as well as pluggable storage engines like zheap to further reduce bloat.

Delivering Docker & K3s worloads to IoT Edge devices

Delivering Docker & K3s worloads to IoT Edge devicesAjeet Singh Raina About 94% of AI Adopters are planning to use containers in the next 1 year. What’s driving this exponential growth? Faster time to deployment and Faster AI workload processing are the two major reasons. You can use GPUs in big data applications such as machine learning, data analytics, and genome sequencing. Docker containerization makes it easier for you to package and distribute applications. You can enable GPU support when using YARN on Docker containers. In this talk, I will demonstrate how Docker accelerates the AI workload development and deployment over the IoT Edge devices in efficient manner

Distributed Caching in Kubernetes with Hazelcast

Distributed Caching in Kubernetes with HazelcastMesut Celik As Monolith to Microservices migration almost became mainstream, Engineering Teams have to think about how their caching strategies will evolve in cloud-native world. Kubernetes is clear winner in containerized world so caching solutions must be cloud-ready and natural fit for Kubernetes.

Caching is an important piece in high performance microservices and choosing right architectural pattern can be crucial for your deployments. Hazelcast is a well known caching solution in open source community and can handle caching piece in microservices based applications.

In this talk, you will learn

* Distributed Caching With Hazelcast

* Distributed Caching Patterns in Kubernetes

* Kubernetes Deployment Options and Best Practices

* How to Handle Distributed Caching Day 2 Operations

Gitlab, GitOps & ArgoCD

Gitlab, GitOps & ArgoCDHaggai Philip Zagury This document discusses improving the developer experience through GitOps and ArgoCD. It recommends building developer self-service tools for cloud resources and Kubernetes to reduce frustration. Example GitLab CI/CD pipelines are shown that handle releases, deployments to ECR, and patching apps in an ArgoCD repository to sync changes. The goal is to create faster feedback loops through Git operations and automation to motivate developers.

GitOps is the best modern practice for CD with Kubernetes

GitOps is the best modern practice for CD with KubernetesVolodymyr Shynkar Evolution of infrastructure as code, a framework that can drastically improve deployment speed and development efficiency.

Youtube version: https://www.youtube.com/watch?v=z2kHFpCPum8

Building a Data Pipeline using Apache Airflow (on AWS / GCP)

Building a Data Pipeline using Apache Airflow (on AWS / GCP)Yohei Onishi This is the slide I presented at PyCon SG 2019. I talked about overview of Airflow and how we can use Airflow and the other data engineering services on AWS and GCP to build data pipelines.

Zero downtime deployment of micro-services with Kubernetes

Zero downtime deployment of micro-services with KubernetesWojciech Barczyński Talk on deployment strategies with Kubernetes covering kubernetes configuration files and the actual implementation of your service in Golang and .net core.

You will find demos for recreate, rolling updates, blue-green, and canary deployments.

Source and demos, you will find on github: https://github.com/wojciech12/talk_zero_downtime_deployment_with_kubernetes

Kubernetes Architecture and Introduction

Kubernetes Architecture and IntroductionStefan Schimanski - Archeology: before and without Kubernetes

- Deployment: kube-up, DCOS, GKE

- Core Architecture: the apiserver, the kubelet and the scheduler

- Compute Model: the pod, the service and the controller

Production Grade Kubernetes Applications

Production Grade Kubernetes ApplicationsNarayanan Krishnamurthy Just because Containerized, Kubernetized and Cloudified doesnt mean you application is production grade and disruption free. You will have to utilize all the features provided by Kubernetes to really make it produciton ready.

In these slides, I will try to explain what could be possible disruptions that can happen in your Kubernetes cluster that can impact your application or workloads. And then will try to explain features or configuration of Kubernetes that will help in making your application production grade.

Kubeadm Deep Dive (Kubecon Seattle 2018)

Kubeadm Deep Dive (Kubecon Seattle 2018)Liz Frost A deep dive into the cluster setup and upgrade tool Kubeadm, presented at Kubecon 2018 by Liz Frost and Tim StClair

Ad

More Related Content

What's hot (20)

Kubernetes 101

Kubernetes 101Winton Winton This document discusses microservices and containers, and how Kubernetes can be used for container orchestration. It begins with an overview of microservices and the benefits of breaking monolithic applications into independent microservices. It then discusses how containers can be used to package and deploy microservices, and introduces Docker as a container platform. Finally, it explains that as container usage grows, an orchestrator like Kubernetes is needed to manage multiple containers and microservices, and provides a high-level overview of Kubernetes' architecture and capabilities for scheduling, self-healing, scaling, and other management of containerized applications.

Hbase hivepig

Hbase hivepigRadha Krishna This document discusses NoSQL and big data processing. It provides background on scaling relational databases and introduces key concepts like the CAP theorem. The CAP theorem states that a distributed data store can only provide two out of three guarantees around consistency, availability, and partition tolerance. Many NoSQL systems sacrifice consistency for availability and partition tolerance, adopting an eventual consistency model instead of ACID transactions.

Local Apache NiFi Processor Debug

Local Apache NiFi Processor DebugDeon Huang The document provides steps for debugging a local NiFi processor, including getting the NiFi source code from GitHub, setting up NiFi and an IDE, and launching the IDE in debug mode to trigger breakpoints when a processor starts. It recommends using a feature branch workflow and links to Apache NiFi contribution guides.

GitOps w/argocd

GitOps w/argocdJean-Philippe Bélanger This document summarizes GitOps and the benefits of using GitOps for continuous delivery and deployment. It discusses how GitOps allows for simplified continuous delivery through using Git as a single source of truth, which can enhance productivity and experience while also increasing stability. Rollbacks are easy if issues arise by reverting commits in Git. Additional benefits include reliability, consistency, security, and auditability of changes. The document also provides an overview of ArgoCD, an open source GitOps tool for continuous delivery on Kubernetes, and its architecture.

Kubernetes (k8s).pdf

Kubernetes (k8s).pdfJaouad Assabbour Dans ce cours, vous allez apprendre les bases de Kubernetes et mettre en place un petit projet grâce à K8s.

Ansible presentation

Ansible presentationJohn Lynch This document provides an overview of Ansible, an open source tool for configuration management and application deployment. It discusses how Ansible aims to simplify infrastructure automation tasks through a model-driven approach without requiring developers to learn DevOps tools. Key points:

- Ansible uses YAML playbooks to declaratively define server configurations and deployments in an idempotent and scalable way.

- It provides ad-hoc command execution and setup facts gathering via SSH. Playbooks can target groups of servers to orchestrate complex multi-server tasks.

- Variables, templates, conditionals allow playbooks to customize configurations for different environments. Plugins support integration with cloud, monitoring, messaging tools.

- Ansible aims to reduce complexity compared

Join semantics in kafka streams

Join semantics in kafka streamsKnoldus Inc. With the recent adoption of the Confluent and Kafka Streams, organizations have experienced significantly improved system stability with real-time processing framework, as well as improved scalability and lower maintenance costs.

The focus of this webinar is:

~Different join operators in Kafka Streams.

~Exploring different options in Kafka Streams to join semantics, both with and without shared keys.

~How to put Application Owner in control by leveraging simplified app-centric architecture.

If you have any queries, contact Himani over mail [email protected]

Introduction to GCP BigQuery and DataPrep

Introduction to GCP BigQuery and DataPrepPaweł Mitruś This document provides an overview and summary of Google Cloud Platform's BigQuery and DataPrep services. For BigQuery, it covers what it is used for, pricing models, how to load and query data, best practices, and limits. For DataPrep, it discusses what it is used for, pricing, how to wrangle and transform data using recipes and flows, performance optimization tips, and limits. Live demos are provided of loading, querying data in BigQuery and using transformations in DataPrep. The presenter's contact information is also included at the end.

Nick Fisk - low latency Ceph

Nick Fisk - low latency CephShapeBlue This document discusses optimizing Ceph latency through hardware design. It finds that CPU frequency has a significant impact on latency, with higher frequencies resulting in lower latencies. Testing shows 4KB write latency of 2.4ms at 900MHz but 694us at higher frequencies. The document also discusses how CPU power states that wake slowly, like C6 at 85us, can negatively impact latency. Overall it advocates designing hardware with fast CPUs and avoiding slower cores or dual sockets to minimize latency in Ceph deployments.

Monitoring and Scaling Redis at DataDog - Ilan Rabinovitch, DataDog

Monitoring and Scaling Redis at DataDog - Ilan Rabinovitch, DataDogRedis Labs Think you have big data? What about high availability

requirements? At DataDog we process billions of data points every day including metrics and events, as we help the world

monitor the their applications and infrastructure. Being the world’s monitoring system is a big responsibility, and thanks to

Redis we are up to the task. Join us as we discuss how the DataDog team monitors and scales Redis to power our SaaS based monitoring offering. We will discuss our usage and deployment patterns, as well as dive into monitoring best practices for production Redis workloads

Introduction of cloud native CI/CD on kubernetes

Introduction of cloud native CI/CD on kubernetesKyohei Mizumoto This document discusses setting up continuous integration and continuous delivery (CI/CD) pipelines on Kubernetes using Concourse CI and Argo CD. It provides an overview of each tool, instructions for getting started with Concourse using Helm and configuring sample pipelines in YAML, and instructions for installing and configuring applications in Argo CD through application manifests.

Git Branching Model

Git Branching ModelHarun Yardımcı This document discusses Git branching models and conventions. It introduces common branches like master, release, production and hotfix branches. It explains how to create and switch branches. Feature branches are used for new development and hotfix branches are for urgent fixes. Commit messages should include the related Jira issue number. The branching model aims to separate development, release and production stages through distinct branches with clear naming conventions.

Bloat and Fragmentation in PostgreSQL

Bloat and Fragmentation in PostgreSQLMasahiko Sawada PostgreSQL uses MVCC which creates multiple versions of rows during updates and deletes. This leads to bloat and fragmentation over time as unused row versions accumulate. The VACUUM command performs garbage collection to recover space from dead rows. HOT updates and pruning help reduce bloat by avoiding index bloat during certain updates. Future improvements include parallel and eager vacuuming as well as pluggable storage engines like zheap to further reduce bloat.

Delivering Docker & K3s worloads to IoT Edge devices

Delivering Docker & K3s worloads to IoT Edge devicesAjeet Singh Raina About 94% of AI Adopters are planning to use containers in the next 1 year. What’s driving this exponential growth? Faster time to deployment and Faster AI workload processing are the two major reasons. You can use GPUs in big data applications such as machine learning, data analytics, and genome sequencing. Docker containerization makes it easier for you to package and distribute applications. You can enable GPU support when using YARN on Docker containers. In this talk, I will demonstrate how Docker accelerates the AI workload development and deployment over the IoT Edge devices in efficient manner

Distributed Caching in Kubernetes with Hazelcast

Distributed Caching in Kubernetes with HazelcastMesut Celik As Monolith to Microservices migration almost became mainstream, Engineering Teams have to think about how their caching strategies will evolve in cloud-native world. Kubernetes is clear winner in containerized world so caching solutions must be cloud-ready and natural fit for Kubernetes.

Caching is an important piece in high performance microservices and choosing right architectural pattern can be crucial for your deployments. Hazelcast is a well known caching solution in open source community and can handle caching piece in microservices based applications.

In this talk, you will learn

* Distributed Caching With Hazelcast

* Distributed Caching Patterns in Kubernetes

* Kubernetes Deployment Options and Best Practices

* How to Handle Distributed Caching Day 2 Operations

Gitlab, GitOps & ArgoCD

Gitlab, GitOps & ArgoCDHaggai Philip Zagury This document discusses improving the developer experience through GitOps and ArgoCD. It recommends building developer self-service tools for cloud resources and Kubernetes to reduce frustration. Example GitLab CI/CD pipelines are shown that handle releases, deployments to ECR, and patching apps in an ArgoCD repository to sync changes. The goal is to create faster feedback loops through Git operations and automation to motivate developers.

GitOps is the best modern practice for CD with Kubernetes

GitOps is the best modern practice for CD with KubernetesVolodymyr Shynkar Evolution of infrastructure as code, a framework that can drastically improve deployment speed and development efficiency.

Youtube version: https://www.youtube.com/watch?v=z2kHFpCPum8

Building a Data Pipeline using Apache Airflow (on AWS / GCP)

Building a Data Pipeline using Apache Airflow (on AWS / GCP)Yohei Onishi This is the slide I presented at PyCon SG 2019. I talked about overview of Airflow and how we can use Airflow and the other data engineering services on AWS and GCP to build data pipelines.

Zero downtime deployment of micro-services with Kubernetes

Zero downtime deployment of micro-services with KubernetesWojciech Barczyński Talk on deployment strategies with Kubernetes covering kubernetes configuration files and the actual implementation of your service in Golang and .net core.

You will find demos for recreate, rolling updates, blue-green, and canary deployments.

Source and demos, you will find on github: https://github.com/wojciech12/talk_zero_downtime_deployment_with_kubernetes

Kubernetes Architecture and Introduction

Kubernetes Architecture and IntroductionStefan Schimanski - Archeology: before and without Kubernetes

- Deployment: kube-up, DCOS, GKE

- Core Architecture: the apiserver, the kubelet and the scheduler

- Compute Model: the pod, the service and the controller

Similar to Understanding Kubernetes Scheduling - CNTUG 2024-10 (20)

Production Grade Kubernetes Applications

Production Grade Kubernetes ApplicationsNarayanan Krishnamurthy Just because Containerized, Kubernetized and Cloudified doesnt mean you application is production grade and disruption free. You will have to utilize all the features provided by Kubernetes to really make it produciton ready.

In these slides, I will try to explain what could be possible disruptions that can happen in your Kubernetes cluster that can impact your application or workloads. And then will try to explain features or configuration of Kubernetes that will help in making your application production grade.

Kubeadm Deep Dive (Kubecon Seattle 2018)

Kubeadm Deep Dive (Kubecon Seattle 2018)Liz Frost A deep dive into the cluster setup and upgrade tool Kubeadm, presented at Kubecon 2018 by Liz Frost and Tim StClair

SCM Puppet: from an intro to the scaling

SCM Puppet: from an intro to the scalingStanislav Osipov This short document discusses the Puppet configuration management tool. It begins with a quote about controlling the spice and universe from Dune and includes the author's name and date. The document then lists various Puppet features like scalability, flexibility, simple installation, and modules. It provides examples of large sites using Puppet like Google and describes Puppet's client-server and masterless models. In closing, it emphasizes Puppet's flexibility, community support, and over 1200 pre-built configurations.

Stop Worrying and Keep Querying, Using Automated Multi-Region Disaster Recovery

Stop Worrying and Keep Querying, Using Automated Multi-Region Disaster RecoveryDoKC Stop Worrying and Keep Querying, Using Automated Multi-Region Disaster Recovery - Shivani Gupta, Elotl & Sergey Pronin, Percona

Disaster Recovery(DR) is critical for business continuity in the face of widespread outages taking down entire data centers or cloud provider regions. DR relies on deployment to multiple locations, data replication, monitoring for failure and failover. The process is typically manual involving several moving parts, and, even in the best case, involves some downtime for end-users. A multi-cluster K8s control plane presents the opportunity to automate the DR setup as well as the failure detection and failover. Such automation can dramatically reduce RTO and improve availability for end-users. This talk (and demo) describes one such setup using the open source Percona Operator for PostgreSQL and a multi-cluster K8s orchestrator. The orchestrator will use policy driven placement to replicate the entire workload on multiple clusters (in different regions), detect failure using pluggable logic, and do failover processing by promoting the standby as well as redirecting application traffic

Container orchestration from theory to practice

Container orchestration from theory to practiceDocker, Inc. "Join Laura Frank and Stephen Day as they explain and examine technical concepts behind container orchestration systems, like distributed consensus, object models, and node topology. These concepts build the foundation of every modern orchestration system, and each technical explanation will be illustrated using SwarmKit and Kubernetes as a real-world example. Gain a deeper understanding of how orchestration systems work in practice and walk away with more insights into your production applications."

Cluster management with Kubernetes

Cluster management with KubernetesSatnam Singh On Friday 5 June 2015 I gave a talk called Cluster Management with Kubernetes to a general audience at the University of Edinburgh. The talk includes an example of a music store system with a Kibana front end UI and an Elasticsearch based back end which helps to make concrete concepts like pods, replication controllers and services.

[k8s] Kubernetes terminology (1).pdf![[k8s] Kubernetes terminology (1).pdf](https://cdn.slidesharecdn.com/ss_thumbnails/k8skubernetesterminology1-230513211357-e2d31a79-thumbnail.jpg?width=560&fit=bounds)

![[k8s] Kubernetes terminology (1).pdf](https://cdn.slidesharecdn.com/ss_thumbnails/k8skubernetesterminology1-230513211357-e2d31a79-thumbnail.jpg?width=560&fit=bounds)

![[k8s] Kubernetes terminology (1).pdf](https://cdn.slidesharecdn.com/ss_thumbnails/k8skubernetesterminology1-230513211357-e2d31a79-thumbnail.jpg?width=560&fit=bounds)

![[k8s] Kubernetes terminology (1).pdf](https://cdn.slidesharecdn.com/ss_thumbnails/k8skubernetesterminology1-230513211357-e2d31a79-thumbnail.jpg?width=560&fit=bounds)

[k8s] Kubernetes terminology (1).pdfFrederik Wouters This document provides an overview of common Kubernetes concepts including clusters, namespaces, nodes, pods, services, deployments, horizontal pod autoscaling, ingress, persistent volume claims, configmaps, statefulsets, jobs, cronjobs, monitoring, and logging. It also discusses best practices for deploying applications on Kubernetes including using deployments instead of regular pods, validating Helm upgrades, and monitoring for CPU throttling issues.

stackconf 2020 | The path to a Serverless-native era with Kubernetes by Paolo...

stackconf 2020 | The path to a Serverless-native era with Kubernetes by Paolo...NETWAYS Serverless is one of the hottest design patterns in the cloud today, i’ll cover how the Serverless paradigms are changing the way we develop applications and the cloud infrastructures and how to implement Serveless-kind workloads with Kubernetes.

We’ll go through the latest Kubernetes-based serverless technologies, covering the most important aspects including pricing, scalability, observability and best practices

Capacity Planning Infrastructure for Web Applications (Drupal)

Capacity Planning Infrastructure for Web Applications (Drupal)Ricardo Amaro In this session we will try to solve a couple of recurring problems:

Site Launch and User expectations

Imagine a customer that provides a set of needs for hardware, sets a date and launches the site, but then he forgets to warn that they have sent out some (thousands of) emails to half the world announcing their new website launch! What do you think it will happen?

Of course launching a Drupal Site involves a lot of preparation steps and there are plenty of guides out there about common Drupal Launch Readiness Checklists which is not a problem anymore.

What we are really missing here is a Plan for Capacity.

Dr Elephant: LinkedIn's Self-Service System for Detecting and Treating Hadoop...

Dr Elephant: LinkedIn's Self-Service System for Detecting and Treating Hadoop...DataWorks Summit Dr. Elephant is a self-serve performance tuning tool for Hadoop that was created by LinkedIn to address the challenges their engineers faced in optimizing Hadoop performance. It automatically monitors completed Hadoop jobs to collect diagnostic information and identifies performance issues. It provides a dashboard and search interface for users to analyze job performance and get help tuning jobs. The goal is to help every user get the best performance without imposing a heavy time burden for learning or troubleshooting.

Cloud data center and openstack

Cloud data center and openstackAndrew Yongjoon Kong This document provides an overview of Kakaocorp's cloud and data center technologies and practices. It discusses Kakaocorp's use of OpenStack for its cloud computing platform, as well as its adoption of DevOps culture and tools like Chef configuration management. The document also describes Kakaocorp's centralized CMDB, monitoring, and deployment systems that form the control plane for its data center automation. Kakaocorp's integrated information service bus called KEMI is presented as well.

Characterizing and Contrasting Kuhn-tey-ner Awr-kuh-streyt-ors

Characterizing and Contrasting Kuhn-tey-ner Awr-kuh-streyt-orsSonatype Lee Calcote, Solar Winds

Running a few containers? No problem. Running hundreds or thousands? Enter the container orchestrator. Let’s take a look at the characteristics of the four most popular container orchestrators and what makes them alike, yet unique.

Swarm

Nomad

Kubernetes

Mesos+Marathon

We’ll take a structured looked at these container orchestrators, contrasting them across these categories:

Genesis & Purpose

Support & Momentum

Host & Service Discovery

Scheduling

Modularity & Extensibility

Updates & Maintenance

Health Monitoring

Networking & Load-Balancing

High Availability & Scale

20211202 North America DevOps Group NADOG Adapting to Covid With Serverless C...

20211202 North America DevOps Group NADOG Adapting to Covid With Serverless C...Craeg Strong This case study describes how we leveraged serverless technology and the AWS serverless application model (SAM) to support the needs of virtual training classes for a major US Federal agency. Our firm was excited to be selected as the main training partner to help a major US Federal government agency roll out Agile and DevOps processes across an organization comprising more than 1500 people. And then the pandemic hit—and what was to have been a series of in-person classes turned 100% virtual! We created a set of fully populated docker images containing all of the test data, plugins, and scenarios required for the student exercises. For our initial implementation, we simply pre-loaded our docker images into elastic beanstalk and then replicated them as many times as needed to provide the necessary number of instances for a given class. While this worked out fine at first, we found a number of shortcomings as we scaled up to more students and more classes. Eventually we came up with a much easier solution using serverless technology: we stood up a single page application that could kickoff tasks using AWS step functions to run docker images in elastic container service, all running under AWS Fargate. This application is a perfect fit for serverless technology and describing our evolution to serverless and SAM may help you gain insights into how these technologies may be beneficial in your situation.

20211202 NADOG Adapting to Covid with Serverless Craeg Strong Ariel Partners

20211202 NADOG Adapting to Covid with Serverless Craeg Strong Ariel PartnersCraeg Strong This case study describes how we leveraged serverless technology and the AWS serverless application model (SAM) to support the needs of virtual training classes for a major US Federal agency. Our firm was excited to be selected as the main training partner to help a major US Federal government agency roll out Agile and DevOps processes across an organization comprising more than 1500 people. And then the pandemic hit—and what was to have been a series of in-person classes turned 100% virtual! We created a set of fully populated docker images containing all of the test data, plugins, and scenarios required for the student exercises. For our initial implementation, we simply pre-loaded our docker images into elastic beanstalk and then replicated them as many times as needed to provide the necessary number of instances for a given class. While this worked out fine at first, we found a number of shortcomings as we scaled up to more students and more classes. Eventually we came up with a much easier solution using serverless technology: we stood up a single page application that could kickoff tasks using AWS step functions to run docker images in elastic container service, all running under AWS Fargate. This application is a perfect fit for serverless technology and describing our evolution to serverless and SAM may help you gain insights into how these technologies may be beneficial in your situation.

How Kubernetes scheduler works

How Kubernetes scheduler worksHimani Agrawal Defines what Kubernetes scheduler is and discuss how it works and then there is a demo on features of Kubenetes scheduler.

Optimization and fault tolerance in distributed transaction with Node.JS Grap...

Optimization and fault tolerance in distributed transaction with Node.JS Grap...Thien Ly As I wrap up my journey at Groove Technology, I had the opportunity to share insights and advancements in optimizing and ensuring fault tolerance in Node.js GraphQL servers. My presentation, held on June 27, 2024, encapsulated the hard work and dedication of the team, highlighting key strategies and implementations that have significantly enhanced server performance and reliability.

Kubernetes vs dockers swarm supporting onap oom on multi-cloud multi-stack en...

Kubernetes vs dockers swarm supporting onap oom on multi-cloud multi-stack en...Arthur Berezin Kubernetes vs Dockers Swarm supporting ONAP-OOM on multi-cloud multi-stack environment

Description: ONAP was set originally to support multiple container platform and cloud through TOSCA. In R1 ONAP and OOM is dependent completely on Kubernetes. As there are other container platforms such as Docker Swarm that are gaining more wider adoption as a simple alternative to Kubernetes. In addition operator may need the flexibility to choose their own container platform and be open for future platform. We need to weight the alternatives and avoid using package managers as Helm that makes K8s mandatory.

The use of TOSCA in conjunction with Kubernetes provides that "happy medium" where on one hand we can leverage Kubernetes to a full extent while at the same time be open to other alternative. In this workshop, we will compare Kubernetes with Docker Swarm and walk through an example of how ONAP can be set to support both platforms using TOSCA.

Build and Deploy Cloud Native Camel Quarkus routes with Tekton and Knative

Build and Deploy Cloud Native Camel Quarkus routes with Tekton and KnativeOmar Al-Safi In this talk, we will leverage all cloud native stacks and tools to build Camel Quarkus routes natively using GraalVM native-image on Tekton pipeline and deploy these routes to Kubernetes cluster with Knative installed. We will dive into the following topics in the talk: - Introduction to Camel - Introduction to Camel Quarkus - Introduction to GraalVM Native Image - Introduction to Tekon - Introduction to Knative - Demo shows how to deploy end to end a Camel Quarkus route which include the following steps: - Look at whole deployment pipeline for Cloud Native Camel Quarkus routes - Build Camel Quarkus routes with GraalVM native-image on Tekton pipeline. - Deploy Camel Quarkus routes to Kubernetes cluster with Knative Targeted Audience: Users with basic Camel knowledge

Salvatore Incandela, Fabio Marinelli - Using Spinnaker to Create a Developmen...

Salvatore Incandela, Fabio Marinelli - Using Spinnaker to Create a Developmen...Codemotion Out of the box Kubernetes is an Operations platform which is great for flexibility but creates friction for deploying simple applications. Along comes Spinnaker which allows you to easily create custom workflows for testing, building, and deploying your application on Kubernetes. Salvatore Incandela and Fabio Marinelli will give an introduction to Containers and Kubernetes and the default development/deployment workflows that it enables. They will then show you how you can use Spinnaker to simplify and streamline your workflow and help provide a full #gitops style CI/CD.

Cloud Composer workshop at Airflow Summit 2023.pdf

Cloud Composer workshop at Airflow Summit 2023.pdfLeah Cole Cloud Composer workshop agenda includes:

- Introductions from engineering managers and staff

- Setting up workshop projects and GCP credits for participants

- Introduction to Cloud Composer architecture and features

- Disaster recovery process using Cloud Composer snapshots for high availability

- Demonstrating data lineage capabilities between Cloud Composer, BigQuery and Dataproc

Ad

Recently uploaded (20)

Lidar for Autonomous Driving, LiDAR Mapping for Driverless Cars.pptx

Lidar for Autonomous Driving, LiDAR Mapping for Driverless Cars.pptxRishavKumar530754 LiDAR-Based System for Autonomous Cars

Autonomous Driving with LiDAR Tech

LiDAR Integration in Self-Driving Cars

Self-Driving Vehicles Using LiDAR

LiDAR Mapping for Driverless Cars

Raish Khanji GTU 8th sem Internship Report.pdf

Raish Khanji GTU 8th sem Internship Report.pdfRaishKhanji This report details the practical experiences gained during an internship at Indo German Tool

Room, Ahmedabad. The internship provided hands-on training in various manufacturing technologies, encompassing both conventional and advanced techniques. Significant emphasis was placed on machining processes, including operation and fundamental

understanding of lathe and milling machines. Furthermore, the internship incorporated

modern welding technology, notably through the application of an Augmented Reality (AR)

simulator, offering a safe and effective environment for skill development. Exposure to

industrial automation was achieved through practical exercises in Programmable Logic Controllers (PLCs) using Siemens TIA software and direct operation of industrial robots

utilizing teach pendants. The principles and practical aspects of Computer Numerical Control

(CNC) technology were also explored. Complementing these manufacturing processes, the

internship included extensive application of SolidWorks software for design and modeling tasks. This comprehensive practical training has provided a foundational understanding of

key aspects of modern manufacturing and design, enhancing the technical proficiency and readiness for future engineering endeavors.

Structural Response of Reinforced Self-Compacting Concrete Deep Beam Using Fi...

Structural Response of Reinforced Self-Compacting Concrete Deep Beam Using Fi...Journal of Soft Computing in Civil Engineering Analysis of reinforced concrete deep beam is based on simplified approximate method due to the complexity of the exact analysis. The complexity is due to a number of parameters affecting its response. To evaluate some of this parameters, finite element study of the structural behavior of the reinforced self-compacting concrete deep beam was carried out using Abaqus finite element modeling tool. The model was validated against experimental data from the literature. The parametric effects of varied concrete compressive strength, vertical web reinforcement ratio and horizontal web reinforcement ratio on the beam were tested on eight (8) different specimens under four points loads. The results of the validation work showed good agreement with the experimental studies. The parametric study revealed that the concrete compressive strength most significantly influenced the specimens’ response with the average of 41.1% and 49 % increment in the diagonal cracking and ultimate load respectively due to doubling of concrete compressive strength. Although the increase in horizontal web reinforcement ratio from 0.31 % to 0.63 % lead to average of 6.24 % increment on the diagonal cracking load, it does not influence the ultimate strength and the load-deflection response of the beams. Similar variation in vertical web reinforcement ratio leads to an average of 2.4 % and 15 % increment in cracking and ultimate load respectively with no appreciable effect on the load-deflection response.

IntroSlides-April-BuildWithAI-VertexAI.pdf

IntroSlides-April-BuildWithAI-VertexAI.pdfLuiz Carneiro ☁️ GDG Cloud Munich: Build With AI Workshop - Introduction to Vertex AI! ☁️

Join us for an exciting #BuildWithAi workshop on the 28th of April, 2025 at the Google Office in Munich!

Dive into the world of AI with our "Introduction to Vertex AI" session, presented by Google Cloud expert Randy Gupta.

AI-assisted Software Testing (3-hours tutorial)

AI-assisted Software Testing (3-hours tutorial)Vəhid Gəruslu Invited tutorial at the Istanbul Software Testing Conference (ISTC) 2025 https://iststc.com/

15th International Conference on Computer Science, Engineering and Applicatio...

15th International Conference on Computer Science, Engineering and Applicatio...IJCSES Journal #computerscience #programming #coding #technology #programmer #python #computer #developer #tech #coder #javascript #java #codinglife #html #code #softwaredeveloper #webdeveloper #software #cybersecurity #linux #computerengineering #webdevelopment #softwareengineer #machinelearning #hacking #engineering #datascience #css #programmers #pythonprogramming

Development of MLR, ANN and ANFIS Models for Estimation of PCUs at Different ...

Development of MLR, ANN and ANFIS Models for Estimation of PCUs at Different ...Journal of Soft Computing in Civil Engineering Passenger car unit (PCU) of a vehicle type depends on vehicular characteristics, stream characteristics, roadway characteristics, environmental factors, climate conditions and control conditions. Keeping in view various factors affecting PCU, a model was developed taking a volume to capacity ratio and percentage share of particular vehicle type as independent parameters. A microscopic traffic simulation model VISSIM has been used in present study for generating traffic flow data which some time very difficult to obtain from field survey. A comparison study was carried out with the purpose of verifying when the adaptive neuro-fuzzy inference system (ANFIS), artificial neural network (ANN) and multiple linear regression (MLR) models are appropriate for prediction of PCUs of different vehicle types. From the results observed that ANFIS model estimates were closer to the corresponding simulated PCU values compared to MLR and ANN models. It is concluded that the ANFIS model showed greater potential in predicting PCUs from v/c ratio and proportional share for all type of vehicles whereas MLR and ANN models did not perform well.

Compiler Design_Lexical Analysis phase.pptx

Compiler Design_Lexical Analysis phase.pptxRushaliDeshmukh2 The role of the lexical analyzer

Specification of tokens

Finite state machines

From a regular expressions to an NFA

Convert NFA to DFA

Transforming grammars and regular expressions

Transforming automata to grammars

Language for specifying lexical analyzers

DATA-DRIVEN SHOULDER INVERSE KINEMATICS YoungBeom Kim1 , Byung-Ha Park1 , Kwa...

DATA-DRIVEN SHOULDER INVERSE KINEMATICS YoungBeom Kim1 , Byung-Ha Park1 , Kwa...charlesdick1345 This paper proposes a shoulder inverse kinematics (IK) technique. Shoulder complex is comprised of the sternum, clavicle, ribs, scapula, humerus, and four joints.

Artificial Intelligence (AI) basics.pptx

Artificial Intelligence (AI) basics.pptxaditichinar its all about Artificial Intelligence(Ai) and Machine Learning and not on advanced level you can study before the exam or can check for some information on Ai for project

Compiler Design Unit1 PPT Phases of Compiler.pptx

Compiler Design Unit1 PPT Phases of Compiler.pptxRushaliDeshmukh2 Compiler phases

Lexical analysis

Syntax analysis

Semantic analysis

Intermediate (machine-independent) code generation

Intermediate code optimization

Target (machine-dependent) code generation

Target code optimization

railway wheels, descaling after reheating and before forging

railway wheels, descaling after reheating and before forgingJavad Kadkhodapour railway wheels, descaling after reheating and before forging

Introduction to FLUID MECHANICS & KINEMATICS

Introduction to FLUID MECHANICS & KINEMATICSnarayanaswamygdas Fluid mechanics is the branch of physics concerned with the mechanics of fluids (liquids, gases, and plasmas) and the forces on them. Originally applied to water (hydromechanics), it found applications in a wide range of disciplines, including mechanical, aerospace, civil, chemical, and biomedical engineering, as well as geophysics, oceanography, meteorology, astrophysics, and biology.

It can be divided into fluid statics, the study of various fluids at rest, and fluid dynamics.

Fluid statics, also known as hydrostatics, is the study of fluids at rest, specifically when there's no relative motion between fluid particles. It focuses on the conditions under which fluids are in stable equilibrium and doesn't involve fluid motion.

Fluid kinematics is the branch of fluid mechanics that focuses on describing and analyzing the motion of fluids, such as liquids and gases, without considering the forces that cause the motion. It deals with the geometrical and temporal aspects of fluid flow, including velocity and acceleration. Fluid dynamics, on the other hand, considers the forces acting on the fluid.

Fluid dynamics is the study of the effect of forces on fluid motion. It is a branch of continuum mechanics, a subject which models matter without using the information that it is made out of atoms; that is, it models matter from a macroscopic viewpoint rather than from microscopic.

Fluid mechanics, especially fluid dynamics, is an active field of research, typically mathematically complex. Many problems are partly or wholly unsolved and are best addressed by numerical methods, typically using computers. A modern discipline, called computational fluid dynamics (CFD), is devoted to this approach. Particle image velocimetry, an experimental method for visualizing and analyzing fluid flow, also takes advantage of the highly visual nature of fluid flow.

Fundamentally, every fluid mechanical system is assumed to obey the basic laws :

Conservation of mass

Conservation of energy

Conservation of momentum

The continuum assumption

For example, the assumption that mass is conserved means that for any fixed control volume (for example, a spherical volume)—enclosed by a control surface—the rate of change of the mass contained in that volume is equal to the rate at which mass is passing through the surface from outside to inside, minus the rate at which mass is passing from inside to outside. This can be expressed as an equation in integral form over the control volume.

The continuum assumption is an idealization of continuum mechanics under which fluids can be treated as continuous, even though, on a microscopic scale, they are composed of molecules. Under the continuum assumption, macroscopic (observed/measurable) properties such as density, pressure, temperature, and bulk velocity are taken to be well-defined at "infinitesimal" volume elements—small in comparison to the characteristic length scale of the system, but large in comparison to molecular length scale

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...Journal of Soft Computing in Civil Engineering In tube drawing process, a tube is pulled out through a die and a plug to reduce its diameter and thickness as per the requirement. Dimensional accuracy of cold drawn tubes plays a vital role in the further quality of end products and controlling rejection in manufacturing processes of these end products. Springback phenomenon is the elastic strain recovery after removal of forming loads, causes geometrical inaccuracies in drawn tubes. Further, this leads to difficulty in achieving close dimensional tolerances. In the present work springback of EN 8 D tube material is studied for various cold drawing parameters. The process parameters in this work include die semi-angle, land width and drawing speed. The experimentation is done using Taguchi’s L36 orthogonal array, and then optimization is done in data analysis software Minitab 17. The results of ANOVA shows that 15 degrees die semi-angle,5 mm land width and 6 m/min drawing speed yields least springback. Furthermore, optimization algorithms named Particle Swarm Optimization (PSO), Simulated Annealing (SA) and Genetic Algorithm (GA) are applied which shows that 15 degrees die semi-angle, 10 mm land width and 8 m/min drawing speed results in minimal springback with almost 10.5 % improvement. Finally, the results of experimentation are validated with Finite Element Analysis technique using ANSYS.

Structural Response of Reinforced Self-Compacting Concrete Deep Beam Using Fi...

Structural Response of Reinforced Self-Compacting Concrete Deep Beam Using Fi...Journal of Soft Computing in Civil Engineering

Development of MLR, ANN and ANFIS Models for Estimation of PCUs at Different ...

Development of MLR, ANN and ANFIS Models for Estimation of PCUs at Different ...Journal of Soft Computing in Civil Engineering

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...Journal of Soft Computing in Civil Engineering

Ad

Understanding Kubernetes Scheduling - CNTUG 2024-10

- 3. Agenda kube-scheduler What is the role of kube-scheduler? Scheduling Framework What is the process in kube-scheduler? kube-scheduler-simulator What is the result of our scheduling strategy?

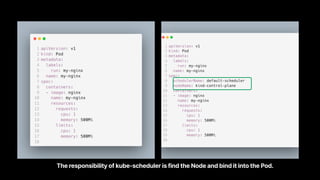

- 5. Control Plane API-server The central interface that manages and exposes the cluster’s data. etcd A distributed, reliable key-value store that provides persistent data storage for cluster state. kube-controller-manager Executes control loops to maintain the desired state of cluster components. kube-scheduler Determines which node for deploying pods.

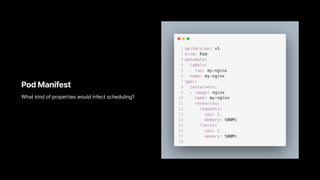

- 6. Pod Manifest What kind of properties would infect scheduling?

- 7. Image with description First thing Add a quick description of each thing, with enough context to understand what’s up. Second thing Keep ‘em short and sweet, so they’re easy to scan and remember. The responsibility of kube-scheduler is find the Node and bind it into the Pod.

- 8. “How does Kubernetes schedules workloads evenly across nodes in a cluster?” Adam · No Where

- 9. Scheduling Balanced Scheduling Imbalanced Scheduling

- 10. Pod Manifest What kind of properties would infect scheduling?

- 11. containers.resources.requests The Node must have such allocatable resources.

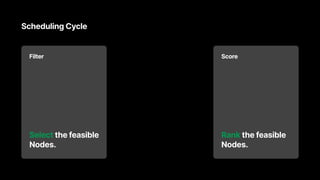

- 13. Scheduling Cycle Filter Select the feasible Nodes. Score Rank the feasible Nodes.

- 14. containers.resources.requests The Node must have such allocatable resources.

- 15. NodeResourcesFit in Filter and Score Filter Select nodes with for the pod. sufficient resources Score Rank the feasible nodes based on . resource availability

- 16. NodeResourcesFit in Filter and Score Filter Select nodes with for the pod. sufficient resources Score Rank the feasible nodes based on . resource availability

- 17. Cloud charge cross-zone Traffic Cloud charges the traffic between different Zone.

- 18. “The primary Database deploy on Zone A. In Kubernetes, how to schedule the workload to Zone-A?” Adam · No Where

- 19. “NodeSelector!” Adam · No Where

- 20. NodeSelector Kubernetes scheudules the workload into Zone A. MUST

- 22. Zone A outage Zone A Zone B Zone C

- 23. Schedule with Nodeselector Filter Select the feasible Nodes which is in Zone A. Score Rank the feasible Nodes.

- 24. Failover When anything works fine Schedule the workload into Zone A. When something got wrong. Schedule the workload into Zone B. Zone A Zone A Zone B Zone B Zone C Zone C

- 25. NodeAffinity Kubernetes schedules the workload into Zone A. PREFER

- 26. Schedule with NodeAffinity Filter Select the feasible Nodes Score Rank prefer Zone A,but Zone B is fine the feasible Nodes,

- 27. Schedule with NodeAffinity Filter Select in Zone A the feasible Nodes Score Rank prefer Zone A. the feasible Nodes,

- 28. Happy Disaster Some product go viral, increase the loading of workload rapidly.

- 29. Problem Even we deploy the HPA and clusterautoscaler, but the scaled node might takes minutes to join cluster.

- 30. “How to scale workload ASAP?” Adam · No Where

- 32. Over-provisoning Proactive Node standby Schedule low priority Pod on Proactive Node Proactive Node services High priority Pod preempts low priority Pod on Proactive Node Node A Node A Node B Node B Node C Node C High Priority Pod Log Priority Pod

- 33. Scheduling Cycle Filter Select the feasible Nodes. Score Rank the feasible Nodes.

- 34. Scheduling Cycle Filter Select the feasible Nodes. Post-filter Preemption runs here. Score Rank the feasible Nodes. If feasible Nodes NO

- 36. “Sounds wonderful, but how to test it?” Adam · No Where

- 37. Three problem about test scheduling Cost If you want to build a test environment for testing scheduling strategy, it might be expensive. Test at Large Scale Private Cloud might not have enough machines, even in Public Cloud, you might need some time to build a large cluster. Trouble shooting The scheduling would like to know the all nodes in the Cluster, complicated Scheduling strategy is hard to test.

- 39. Three problem about test scheduling Cost kss can test scheduling without resources. Test at Large Scale kss can test scheduling at Large Scale easy. Trouble shooting kss provides debuggable scheduler, it logs all result in stages about Scheduling Framework.

- 40. Demo Using kube-scheduler-simulator to verify the over-provisioning pattern. Scale out Node → 10 Deploy Backend x 40 Deploy low-priority-class and low-priority-pod x 12 Observe LPP Pending Simulate the behavior of clusterautoscaler:Scale out Node -> 13 Observe LPP, it should be deployed to the Proactive Node Simulate the behavior of HPA:Scale out backend 40 → 52 Observe Backend preempt LPP

- 41. “I heard every stages in Scheduling Framework is an extension point, how can we extend it?” Adam · No Where

- 43. Now about extending scheduler Scheduler Profile If default scheduler fits your needs, but you want some custom. For example, the parameter of some Plugin, or high weight of specific Plugin. Scheduler Extender bad performance, bad error handling. Plugins You need to build your scheduler with the custom plugins.

- 44. Demo Using kube-scheduler-simulator to verify the custom plugin. Enable the custom plugin in KubeSchedulerConfiguration Deploy new Pod Verify the score of custom plugin

- 45. Trend about scheduler Descheduler Obey the scheduling policy after deployment. Evict the pod before the resources usage hit the limits. Batch Job for ML Batch Job for ML is different from normal. Kueue and QueueHint. kube-scheduler-wasm- extension Extend kube-scheduler with WASM.

- 46. Takeaway Understand Scheduling gives us more choices about actions.

- 47. Bonus

- 48. Three Common Misconception First pod.containers.resources.requests only works during scheduling. Second Usage above won’t cause any problem. pod.containers.resources.limits Third Only Memory would cause OOMKilled.

- 49. Three Common Misconception First pod.containers.resources.requests only works during scheduling. The requests.cpu would affect the cgroup. The limits.cpu would cause the CPU throttling. The GC Thread might not GC completely because the CPU throttling, it might trigger OOMKilled. Second Usage above won’t cause any problem. pod.containers.resources.limits Third Only Memory would cause . OOMKilled

![[기초] GIT 교육 자료](https://cdn.slidesharecdn.com/ss_thumbnails/git-160711233512-thumbnail.jpg?width=560&fit=bounds)