Ad

Unit2 CPU Scheduling 24252 (sssssss1).ppt

- 1. Unit 2: CPU/ Process Scheduling

- 2. Relate!!

- 3. Basic Concept Imagine you're at a busy cafe, Orders must be managed efficiently, ensuring prompt customer service. Similarly, Modern operating systems rely on CPU scheduling, optimizing task execution and resource utilization efficiently. This effectively determines CPU task order, balancing performance, fairness, and responsiveness.

- 4. Basic Concept CPU Scheduling is: Basis of Multi-programmed OS Objective of Multi-programming: To have some processes running all the time to maximize CPU Utilization.

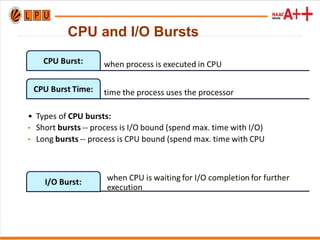

- 5. CPU and I/O Bursts

- 6. Sequence of CPU and I/O Bursts

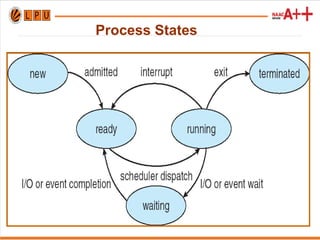

- 7. CPU Scheduling Decisions 1. When process switches from Running State to Waiting State (i/o request or wait) 2. When process switches from Running to Ready State (interrupt) 3. When process switches from Waiting State to Ready State (at completion of i/o) 4. When a process terminates 1. Non-preemptive 2. Pre-emptive 3. Pre-emptive 4. Non-Preemptive (allow)

- 10. Non-Preemptive Once CPU is allocated to process, process retains CPU until completion. It may switch to a waiting state. Example: Windows 3.x, Apple Macintosh systems. Process executes till it finishes. Example: First In, First Out scheduling.

- 11. Preemptive Scheduling The running process is interrupted briefly. It resumes once priority task finishes. CPU or resources are taken away. A high-priority process needs immediate execution. Lower-priority processes must wait for turn. This ensures critical tasks are completed efficiently.

- 12. Dispatcher Dispatcher manages CPU control, enabling process execution. Functions include context switching, user mode switching. Restarts program at proper memory location. Dispatcher must operate efficiently, minimizing delays. Invoked during every process switch promptly. Dispatch latency measures process-switch time duration.

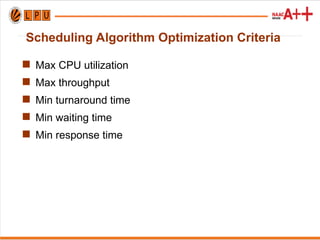

- 13. Scheduling Criteria Which algorithm to use in a particular situation 1. CPU Utilization: CPU should be busy to the fullest 2. Throughput: No. of processes completed per unit of time. 3. Turnaround Time: The time interval from submitting a process to the time of completion. Turnaround Time= Time spent to get into memory + waiting in ready queue + doing I/O + executing on CPU (It is the amount of time taken to execute a particular process)

- 14. Scheduling Criteria 4. Waiting Time: Time a process spends in a ready queue. Amount of time a process has been waiting in the ready queue to acquire control on the CPU. 5. Response Time: Time from the submission of a request to the first response, Not Output 6. Load Average: It is the average number of processes residing in the ready queue waiting for their turn to get into the CPU.

- 15. Scheduling Algorithm Optimization Criteria Max CPU utilization Max throughput Min turnaround time Min waiting time Min response time

- 16. Formula Turn around time= Completion Time- Arrival Time Waiting Time= Turn around Time-Burst Time OR Turnaround time = Burst time + Waiting time

- 17. First-Come, First-Served (FCFS) Processes that request CPU first, are allocated the CPU first It is non-preemptive scheduling algorithm FCFS is implemented with FIFO queue. A process is allocated the CPU according to their arrival times.

- 18. First-Come, First-Served (FCFS) When process enters the ready queue, its PCB is attached to the Tail of queue. When CPU is free, it is allocated to the process selected from Head/Front of queue. FCFS leads to Convoy Effect

- 19. First-Come, First-Served (Example) Example: Consider three processes arrive in order P1, P2, and P3. P1 burst time: 24 P2 burst time: 3 P3 burst time: 3 Draw the Gantt Chart and compute Average Waiting Time and Average Turn Around Time. Sol: 19

- 21. First-Come, First-Served (Example) Process A T BT CT TAT WT P1 0 2 P2 3 1 P3 5 6 Calculate avg. CT, TAT, WT

- 22. First-Come, First-Served (Example) Process A T BT CT TAT WT P1 0 4 P2 1 3 P3 2 1 P4 3 2 P5 4 5 H.W Calculate avg. CT, TAT,WT

- 23. (2) Shortest Job First Processes with least burst time (BT) are selected. CPU assigned to process with less BT. SJF has two types: 1. Non-Preemptive. CPU stays until process completion only.

- 24. (2) Shortest Job First 2. Preemption: New process enters the scheduling queue. Scheduler compares execution time with current. A shorter process preempts an already running process. Efficient scheduling ensures minimized waiting time.

- 25. Shortest Job First(Preemptive) Q1. Consider foll. Processes with A.T and B.T Process A.T B.T P1 0 4 P2 0 6 P3 0 4 Cal. Completion time, turn around time and avg. waiting time. SJF(Pre-emptive)-> SRTF

- 26. Q1. Consider foll. Processes with A.T and B.T Process A.T B.T P1 0 9 P2 1 4 P3 2 9 Cal. Completion time, turn around time and avg. waiting time. SJF(Pre-emptive)-> SRTF Shortest Job First(Preemptive)

- 27. Q1. Consider foll. Processes with A.T and B.T Process A.T B.T P1 0 5 P2 1 7 P3 3 4 Cal. Completion time, turn around time and avg. waiting time. SJF(Pre-emptive)-> SRTF Shortest Job First(Preemptive)

- 28. Q1. Consider foll. Processes with A.T and B.T Process A.T B.T P1 0 5 P2 1 3 P3 2 3 P4 3 1 Cal. Completion time, turn around time and avg. waiting time. Shortest Job First(Preemptive)

- 29. Shortest Job First (Non-Preemption) P1 burst time: 15 P2 burst time: 8 P3 burst time: 10 P4 burst time: 3 Processes arrived at the same time. 29

- 30. Q1. Consider foll. Processes with A.T and B.T Process A.T B.T P1 1 7 P2 2 5 P3 3 1 P4 4 2 P5 5 8 Cal. Completion time, turn around time and avg. waiting time. Practice: Shortest Job First (Non Preemption)

- 31. SRTF Example CPU idle time: (idle time/ total time spent) * 100

- 32. SRTF Example Consider three processes, all arriving at time zero, with total execution time of 10, 20 and 30 units, respectively. Each process spends the first 20% of execution time doing I/O, the next 70% of time doing computation, and the last 10% of time doing I/O again. The operating system uses a shortest remaining compute time first scheduling algorithm and schedules a new process either when the running process gets blocked on I/O or when the running process finishes its compute burst. Assume that all I/O operations can be overlapped as much as possible. For what percentage of time does the CPU remain idle? (A) 0% (B) 10.6% (C) 30.0% (D) 89.4%

- 33. SRTF Example Explanation: Let three processes be p0, p1 and p2. Their execution time is 10, 20 and 30 respectively. p0 spends first 2 time units in I/O, 7 units of CPU time and finally 1 unit in I/O. p1 spends first 4 units in I/O, 14 units of CPU time and finally 2 units in I/O. p2 spends first 6 units in I/O, 21 units of CPU time and finally 3 units in I/O. idle p0 p1 p2 idle 0 2 9 23 44 47 Total time spent = 47 Idle time = 2 + 3 = 5 Percentage of idle time = (5/47)*100 = 10.6 %

- 34. Priority Scheduling Priority is associated with each process. CPU is allocated to the process with highest priority. If 2 processes have same priority FCFS Disadvantage: Starvation (Low priority Processes wait for long) Solution of Starvation: Aging: The priority of a process is gradually increased over time to prevent it from starving (waiting indefinitely).

- 35. Priority Scheduling (Preemptive) Process Arrival Time Priority Burst Time Completion Time P1 1 5 4 P2 2 7 2 P3 3 4 3 Consider 4 as Highest and 7 as Lowest Priority

- 36. Priority Scheduling (Preemptive) Process Arrival Time Priority Burst Time Completion Time P1 0 2 10 P2 2 1 5 P3 3 0 2 P4 5 3 20 Consider 0 as Lowest and 3 as Highest Priority

- 37. Priority Scheduling (Preemptive) Process Arrival Time Priority Burst Time Completion Time P1 0 2 10 P2 2 1 5 P3 3 0 2 P4 5 3 20 Consider 3 as Lowest and 0 as Highest Priority

- 38. Round Robin Scheduling A Time Quantum is associated to all processes Time Quantum: Maximum amount of time for which process can run once it is scheduled. RR scheduling is always Pre-emptive.

- 39. Round Robin Process Arrival Time Burst Time Completion Time P1 0 5 P2 1 7 P3 2 1 TQ: 2 Process Arrival Time Burst Time Completion Time P1 0 3 P2 3 4 P3 4 6

- 40. Round Robin Process Arrival Time Burst Time Completion Time P1 0 4 P2 1 5 P3 2 2 P4 3 1 P5 4 6 P6 6 3 TQ: 2

- 41. Round Robin Process Arrival Time Burst Time Completion Time P1 0 4 P2 1 5 P3 2 2 P4 3 1 P5 4 6 P6 6 3 TQ: 2

- 42. Multilevel Queue Scheduling Partition the ready queue into several separate queues. For Example: a multilevel queue scheduling algorithm with five queue: 1. System processes 2. Interactive processes 3. Interactive editing processes 4. Batch processes 5. Student/ user processes

- 43. Multilevel Queue A process moves between various queues. Multilevel Queue Scheduler defined by parameters. Number of queues is defined here. Scheduling algorithms for each queue specified. Method determines when to upgrade process. Method decides which queue gets service.

- 44. Multilevel Queue

- 45. Multilevel Queue

- 46. Multilevel Queue 1. System Processes: These are programs that belong to OS (System Files) 2. Interactive Processes: Real-Time Processes e.g. playing games online, listening to music online, etc. 3. Batch Processes: Lots of processes are pooled and one process at a time is selected for execution. I/O by Punch Cards 4. Student/User Processes

- 47. Multilevel Queue No process in the batch queue could run unless the other queues for the system were empty. If an interactive editing process entered the ready queue while a batch process was running, the batch process would be preempted.

- 48. Multilevel Queue Processes can be : Foreground Process: processes that are running currently Background Process: Processes that are running in the background but their effects are not visible to the user. Processes are permanently assigned to one queue on some properties: memory size, process priority, process type. Each queue has its own scheduling algorithm

- 49. Multilevel Queue Different types of processes exist, So Cannot apply same scheduling algorithm. Disadvantages: 1. Until high priority queue is not empty, 2. No process from lower priority queues will be selected. 3. Starvation for lower priority processes. Advantage: Can apply separate scheduling algorithm for each queue.

- 50. Multilevel Feedback Queue Solution is: Multilevel Feedback Queue If a process is taking too long to execute.. Pre-empt it send it to low priority queue. Don’t allow a low priority process to wait for long. After some time move the least priority process to high priority queue Aging

- 53. Multi-processor Scheduling Concerns: • Multiple CPUs enable load sharing. • Focus is on homogeneous processors' systems. • Use any processor for any process. • Various types of limitations exist. • Systems with I/O devices have restrictions. • Devices must run on specific processors.

- 55. Approaches to Multiple-Processor Scheduling 1. Asymmetric multiprocessing •Scheduling decisions, I/O processing handled by server. •Other processors execute only user code. •One processor accesses system data structures. •Reduces the need for data sharing. •Single processor controls system activities efficiently. •Improves data management, reducing conflicts.

- 56. Approaches to Multiple-Processor Scheduling 2. Symmetric multiprocessing (SMP) Each processor is self-scheduling and efficient. All processes may share a common queue. Each processor may have a private queue. Schedulers examine queues and select processes. Modern operating systems widely support SMP. Like, Windows, Linux, Mac OS X included.

- 57. Issues concerning SMP systems 1. Processor Affinity (a process has an affinity for the processor on which it is currently running.) Consider what happens to cache memory when a process has been running on a specific processor. The data most recently accessed by the process populate the cache for the processor. As a result, successive memory accesses by the process are often in cache memory.

- 58. Issues concerning SMP systems 1. Processor Affinity If the process migrates to another processor. The contents of cache memory must be invalidated for the first processor, and the cache for the second processor must be repopulated.

- 59. Issues concerning SMP systems 1. Processor Affinity High cost of invalidating and repopulating caches. Most SMP systems avoid process migration entirely. They attempt to keep processes running consistently. This concept is called processor affinity generally. A process has affinity for its processor. It runs on the same processor continuously.

- 60. Issues concerning SMP systems Forms of Processor Affinity 1. Soft affinity • An OS tries keeping processes on the same processor. • This is called soft affinity policy. 2. Hard affinity • The OS tries to keep processes stable. • Processes may migrate between different processors.

- 61. Issues concerning SMP systems 2. Load Balancing •On SMP systems, workload balance is important. •All processors should be fully utilized efficiently. •Load balancing prevents some processors from idling. •High workloads may burden specific processors excessively. •Idle processors waste system resources and efficiency. •Balanced workloads maximize the system's overall performance.

- 62. Issues concerning SMP systems 2. Load Balancing Two approaches to load balancing: push migration and pull migration. 1. Push migration: a specific task periodically checks the load on each processor and—if it finds an imbalance—evenly distributes the load by moving (or pushing) processes from overloaded to idle or less-busy processors. 2. Pull migration: occurs when an idle processor pulls a waiting task from a busy processor.

- 63. Issues concerning SMP systems 3. Multicore Processors •A multi-core processor has independent cores. •It reads and executes program instructions. •A CPU may contain multiple cores. •A core includes registers and cache. •Core handles processor tasks, not entire. •It's essential for efficient task performance.

- 64. Issues concerning SMP systems 3. Multicore Processors Multicore processors may complicate scheduling issues: When a processor accesses memory, it spends a significant amount of time waiting for the data to become available. This situation, known as a memory stall. Memory Stall may occur due to a cache miss (accessing data that are not in cache memory).

- 65. Issues concerning SMP systems Memory Stall the processor can spend up to 50 percent of its time waiting for data to become available from memory.

- 66. Real Time Scheduling Analysis and testing of the scheduler system and the algorithms used in real-time applications a) soft real-time systems b) hard real-time systems a)Soft real-time systems provide no guarantee as to when a critical real-time process will be scheduled. b)Hard real-time systems have stricter requirements. A task must be serviced by its deadline;

- 67. Rate-Monotonic Scheduling • Rate-monotonic scheduling assigns tasks' priorities statically. • It uses a preemption-based scheduling policy efficiently. • RMS operates in real-time operating systems seamlessly. • Static priorities depend on task cycle duration. • Shorter cycles lead to higher job priority. • This ensures periodic tasks are scheduled optimally.

- 68. Rate-Monotonic Scheduling rate-monotonic scheduling assumes that the processing time of a periodic process is the same for each CPU burst. For example Consider P1, P2 with time period 50,100 resp. and B.T 20,35 resp. Cal. CPU utilization of each process and total CPU utilization. Sol: CPU utilization=(Burst time/Time period)= (Ti/Pi) For P1: (20/50)=0.40 i.e 40% For P2: (35/100)=0.35 i.e 35% Total CPU utilization is 75%

- 69. Earliest Deadline First Scheduling Earliest-deadline-first (EDF) scheduling dynamically assigns priorities according to deadline. The earlier the deadline, the higher the priority the later the deadline, the lower the priority. Under the EDF policy, when a process becomes runnable, it must announce its deadline requirements to the system.

- 70. Practice MCQ: