Video+Language: From Classification to Description

- 1. Video + Language Jiebo Luo Department of Computer Science

- 2. Introduction • Video has become ubiquitous on the Internet, TV, as well as personal devices. • Recognition of video content has been a fundamental challenge in computer vision for decades, where previous research predominantly focused on understanding videos using a predefined yet limited vocabulary. • Thanks to the recent development of deep learning techniques, researchers in both computer vision and multimedia communities are now striving to bridge video with natural language, which can be regarded as the ultimate goal of video understanding. • We present recent advances in exploring the synergy of video understanding and language processing, including video- language alignment, video captioning, and video emotion analysis.

- 3. From Classification to Description Recognizing Realistic Actions from Videos "in the Wild" UCF-11 to UCF-101 (CVPR 2009) Similarity btw Videos Cross-Domain Learning Visual Event Recognition in Videos by Learning from Web Data (CVPR2010 Best Student Paper) Heterogeneous Feature Machine For Visual Recognition (ICCV 2009)

- 4. From Classification to Description 4 Semantic Video Entity Linking (ICCV2015)

- 5. Exploring Coherent Motion Patterns via Structured Trajectory Learning for Crowd Mood Modeling (IEEE T-CSVT 2016) From Classification to Description

- 6. Aligning Language Descriptions with Videos Iftekhar Naim, Young Chol Song, Qiguang Liu Jiebo Luo, Dan Gildea, Henry Kautz link

- 7. Unsupervised Alignment of Actions in Video with Text Descriptions Y. Song, I. Naim, A. Mamun, K. Kulkarni, P. Singla J. Luo, D. Gildea, H. Kautz

- 8. OverviewOverview Unsupervised alignment of video with text Motivations Generate labels from data (reduce burden of manual labeling) Learn new actions from only parallel video+text Extend noun/object matching to verbs and actions Unsupervised alignment of video with text Motivations Generate labels from data (reduce burden of manual labeling) Learn new actions from only parallel video+text Extend noun/object matching to verbs and actions Matching Verbs to Actions The person takes out a knife and cutting board Matching Nouns to Objects [Naim et al., 2015] An overview of the text and video alignment framework

- 9. Hyperfeatures for ActionsHyperfeatures for Actions High-level features required for alignment with text → Motion features are generally low-level Hyperfeatures, originally used for image recognition extended for use with motion features → Use temporal domain instead of spatial domain for vector quantization (clustering) High-level features required for alignment with text → Motion features are generally low-level Hyperfeatures, originally used for image recognition extended for use with motion features → Use temporal domain instead of spatial domain for vector quantization (clustering) Originally described in “Hyperfeatures: Multilevel Local Coding for Visual Recog‐ nition” Agarwal, A. (ECCV 06), for images Hyperfeatures for actions

- 10. Hyperfeatures for ActionsHyperfeatures for Actions From low-level motion features, create high-level representations that can easily align with verbs in text From low-level motion features, create high-level representations that can easily align with verbs in text Cluster 3 at time t Accumulate over frame at time t & cluster Conduct vector quantization of the histogram at time t Cluster 3, 5, …,5,20 = Hyperfeature 6 Each color code is a vector quantized STIP point Vector quantized STIP point histogram at time t Accumulate clusters over window (t‐w/2, t+w/2] and conduct vector quantization → first‐level hyperfeatures Align hyperfeatures with verbs from text (using LCRF)

- 11. Latent-variable CRF AlignmentLatent-variable CRF Alignment CRF where the latent variable is the alignment N pairs of video/text observations {(xi, yi)}i=1 (indexed by i) Xi,m represents nouns and verbs extracted from the mth sentence Yi,n represents blobs and actions in interval n in the video Conditional likelihood conditional probability of Learning weights w Stochastic gradient descent CRF where the latent variable is the alignment N pairs of video/text observations {(xi, yi)}i=1 (indexed by i) Xi,m represents nouns and verbs extracted from the mth sentence Yi,n represents blobs and actions in interval n in the video Conditional likelihood conditional probability of Learning weights w Stochastic gradient descent where feature function More details in Naim et al. 2015 NAACL Paper ‐ Discriminative unsupervised alignment of natural language instructions with corresponding video segments N

- 12. Experiments: Wetlab DatasetExperiments: Wetlab Dataset RGB-Depth video with lab protocols in text Compare addition of hyperfeatures generated from motion features to previous results (Naim et al. 2015) Small improvement over previous results Activities already highly correlated with object-use RGB-Depth video with lab protocols in text Compare addition of hyperfeatures generated from motion features to previous results (Naim et al. 2015) Small improvement over previous results Activities already highly correlated with object-use Detection of objects in 3D space using color and point‐cloud Previous results using object/noun alignment only Addition of different types of motion features 2DTraj: Dense trajectories *Using hyperfeature window size w=150

- 13. Experiments: TACoS DatasetExperiments: TACoS Dataset RGB video with crowd-sourced text descriptions Activities such as “making a salad,” “baking a cake” No object recognition, alignment using actions only Uniform: Assume each sentence takes the same amount of time over the entire sequence Segmented LCRF: Assume the segmentation of actions is known, infer only the action labels Unsupervised LCRF: Both segmentation and alignment are unknown Effect of window size and number of clusters Consistent with average action length: 150 frames RGB video with crowd-sourced text descriptions Activities such as “making a salad,” “baking a cake” No object recognition, alignment using actions only Uniform: Assume each sentence takes the same amount of time over the entire sequence Segmented LCRF: Assume the segmentation of actions is known, infer only the action labels Unsupervised LCRF: Both segmentation and alignment are unknown Effect of window size and number of clusters Consistent with average action length: 150 frames *Using hyperfeature window size w=150 *d(2)=64

- 14. Experiments: TACoS DatasetExperiments: TACoS Dataset Segmentation from a sequence in the dataset Segmentation from a sequence in the dataset Crowd‐sourced descriptions Example of text and video alignment generated by the system on the TACoS corpus for sequence s13‐d28

- 15. Image Captioning with Semantic Attention (CVPR 2016) Quanzeng You, Jiebo Luo Hailin Jin, Zhaowen Wang and Chen Fang

- 16. Image Captioning • Motivations – Real-world Usability • Help visually impaired people, learning-impaired – Improving Image Understanding • Classification, Objection detection – Image Retrieval 1. a young girl inhales with the intent of blowing out a candle 2. girl blowing out the candle on an ice cream 1. A shot from behind home plate of children playing baseball 2. A group of children playing baseball in the rain 3. Group of baseball players playing on a wet field

- 17. Introduction of Image Captioning • Machine learning as an approach to solve the problem

- 18. Overview • Brief overview of current approaches • Our main motivation • The proposed semantic attention model • Evaluation results

- 19. Brief Introduction of Recurrent Neural Network • Different from CNN 11),( ttttt BhAxhxfh tt Chy • Unfolding over time Feedforward network Backpropagation through time Inputs Hidden Units Outputs xt ht-1 ht yt Inputs Hidden Units Outputs C yt Inputs Hidden Units Inputs Hidden Units B B A A A B t-1 t-2

- 20. Applications of Recurrent Neural Networks • Machine Translation • Reads input sentence “ABC” and produces “WXYZ” Decoder RNNEncoder RNN

- 21. Encoder-Decoder Framework for Captioning • Inspired by neural network based machine translation • Loss function N t tt wwIwp IwpL 1 10 ),,,|(log )|(log

- 22. Our Motivation • Additional textual information – Own noisy title, tags or captions (Web)

- 23. Our Motivation • Additional textual information – Own noisy title, tags or captions (Web) – Visually similar nearest neighbor images

- 24. Our Motivation • Additional textual information – Own noisy title, tags or captions (Web) – Visually similar nearest neighbor images – Success of low-level tasks • Visual attributes detection

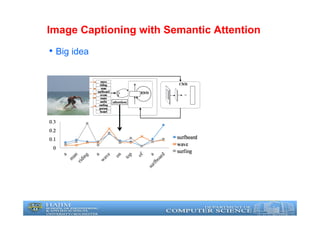

- 25. Image Captioning with Semantic Attention • Big idea

- 26. First Idea • Provide additional knowledge at each input node • Concatenate the input word and the extra attributes K • Each image has a fixed keyword list )],,([),( 11 tktttt hbKWwfhxfh Visual Features: 1024 GoogleNet LSTM Hidden states: 512 Training details: 1. 256 image/sentence pairs 2. RMS-Prob

- 27. Using Attributes along with Visual Features • Provide additional knowledge at each input node • Concatenate the visual embedding and keywords for h0 ];[),( 10 bKWvWhvfh kiv

- 28. Attention Model on Attributes • Instead of using the same set of attributes at every step • At each step, select the attributes (attention) m mtmt kKwatt ),( )softmax VK(wT tt ))],,(;([),( 11 tttttt hKwattxfhxfh

- 29. Overall Framework • Training with a bilinear/bilateral attention model ht pt xt v {Ai} Yt~ RNN Image CNN AttrDet 1 AttrDet 2 AttrDet 3 AttrDet N t = 0 Word

- 30. Visual Attributes • A secondary contribution • We try different approaches

- 31. Performance • Examples showing the impact of visual attributes on captions

- 32. Performance on the Testing Dataset • Publicly available split

- 33. Performance • MS-COCO Image Captioning Challenge

- 34. TGIF: A New Dataset and Benchmark on Animated GIF Description Yuncheng Li, Yale Song, Liangliang Cao, Joel Tetreault, Larry Goldberg, Jiebo Luo

- 35. Overview

- 36. Comparison with Existing Datasets

- 37. Examples a skate boarder is doing trick on his skate board. a gloved hand opens to reveal a golden ring. a sport car is swinging on the race playground the vehicle is moving fast into the tunnel

- 38. Contributions • A large scale animated GIF description dataset for promoting image sequence modeling and research • Performing automatic validation to collect natural language descriptions from crowd workers • Establishing baseline image captioning methods for future benchmarking • Comparison with existing datasets, highlighting the benefits with animated GIFs

- 39. In Comparison with Existing Datasets • The language in our dataset is closer to common language • Our dataset has an emphasis on the verbs • Animated GIFs are more coherent and self contained • Our dataset can be used to solve more difficult movie description problem

- 40. Machine Generated Sentence Examples

- 41. Machine Generated Sentence Examples

- 42. Machine Generated Sentence Examples

- 43. Comparing Professionals and Crowd-workers Crowd worker: two people are kissing on a boat. Professional: someone glances at a kissing couple then steps to a railing overlooking the ocean an older man and woman stand beside him. Crowd worker: two men got into their car and not able to go anywhere because the wheels were locked. Professional: someone slides over the camaros hood then gets in with his partner he starts the engine the revving vintage car starts to backup then lurches to a halt. Crowd worker: a man in a shirt and tie sits beside a person who is covered in a sheet. Professional: he makes eye contact with the woman for only a second. More: http://beta- web2.cloudapp.net/ls mdc_sentence_comp arison.html

- 44. Movie Descriptions versus TGIF • Crowd workers are encouraged to describe the major visual content directly, and not to use overly descriptive language • Because our animated GIFs are presented to crowd workers without any context, the sentences in our dataset are more self-contained • Animated GIFs are perfectly segmented since they are carefully curated by online users to create a coherent visual story

- 45. Code & Dataset • Yahoo! webscope (Coming soon!) • Animated GIFs and sentences • Code and models for LSTM baseline • Pipeline for syntactic and semantic validation to collect natural languages from crowd workers

- 46. Thanks Q & A Visual Intelligence & Social Multimedia Analytics Google *** Baidu Sogou Bing XiaoIce How Intelligent Are the AI Systems Today?

![OverviewOverview

Unsupervised alignment of video with text

Motivations

Generate labels from data (reduce burden of manual labeling)

Learn new actions from only parallel video+text

Extend noun/object matching to verbs and actions

Unsupervised alignment of video with text

Motivations

Generate labels from data (reduce burden of manual labeling)

Learn new actions from only parallel video+text

Extend noun/object matching to verbs and actions

Matching Verbs to Actions

The person takes out a knife

and cutting board

Matching Nouns to Objects

[Naim et al., 2015]

An overview of the text and

video alignment framework](https://image.slidesharecdn.com/videolanguage-compimage-160921153334/85/Video-Language-From-Classification-to-Description-8-320.jpg)

![Hyperfeatures for ActionsHyperfeatures for Actions

From low-level motion features, create high-level

representations that can easily align with verbs in text

From low-level motion features, create high-level

representations that can easily align with verbs in text

Cluster 3

at time t

Accumulate over

frame at time t

& cluster

Conduct vector

quantization

of the histogram

at time t

Cluster 3, 5, …,5,20

= Hyperfeature 6

Each color code

is a vector

quantized

STIP point

Vector quantized

STIP point histogram at time t

Accumulate clusters over

window (t‐w/2, t+w/2]

and conduct vector

quantization

→ first‐level hyperfeatures

Align hyperfeatures

with verbs from text

(using LCRF)](https://image.slidesharecdn.com/videolanguage-compimage-160921153334/85/Video-Language-From-Classification-to-Description-10-320.jpg)

![First Idea

• Provide additional knowledge at each input node

• Concatenate the input word and the extra attributes K

• Each image has a fixed keyword list

)],,([),( 11 tktttt hbKWwfhxfh

Visual Features: 1024

GoogleNet

LSTM Hidden states: 512

Training details:

1. 256 image/sentence

pairs

2. RMS-Prob](https://image.slidesharecdn.com/videolanguage-compimage-160921153334/85/Video-Language-From-Classification-to-Description-26-320.jpg)

![Using Attributes along with Visual Features

• Provide additional knowledge at each input node

• Concatenate the visual embedding and keywords for h0

];[),( 10 bKWvWhvfh kiv ](https://image.slidesharecdn.com/videolanguage-compimage-160921153334/85/Video-Language-From-Classification-to-Description-27-320.jpg)

![Attention Model on Attributes

• Instead of using the same set of attributes at every

step

• At each step, select the attributes (attention)

m mtmt kKwatt ),(

)softmax VK(wT

tt

))],,(;([),( 11 tttttt hKwattxfhxfh](https://image.slidesharecdn.com/videolanguage-compimage-160921153334/85/Video-Language-From-Classification-to-Description-28-320.jpg)