Ad

What's New in PostgreSQL 17? - Mydbops MyWebinar Edition 35

- 1. What’s New in PostgreSQL 17 Presented by Mukul Mahto Mydbops Mydbops MyWebinar - 35 Aug 23rd, 2024

- 2. Consulting Services Consulting Services Managed Services ❏ Database Management and consultancy provider ❏ Founded in 2016 ❏ Assisted 800+ happy customers ❏ AWS partners ❏ PCI & ISO certified About Us

- 3. ❏ Introduction to PostgreSQL 17 ❏ Performance Optimization ❏ Partitioning & Distributed Workloads ❏ Developer Workflow Enhancement ❏ Security Features ❏ Backup and Export Management ❏ Monitoring Agenda

- 4. PostgreSQL 17 Key Enhancements - Performance Boosts - High Availability - Developer Features - Security Why Upgrade? - Boosted Efficiency - High Availability - Cutting-Edge Features

- 6. ❏ Improved Vacuum Mechanism New Data Structure: Efficient radix tree replaces old array. Dynamic Memory Allocation: No 1GB limit. Faster TID Lookup: Speedier Tuple ID searches. 20x Memory Reduction with New Data Structure ❏ Reduced WAL Volume for Vacuum Single record for freezing and pruning. Faster Sync: Reduced sync and write time. Mark Items as LP_UNUSED Instead of LP_DEAD: Reduces WAL volume

- 7. Comparison Performance of Postgres 16 vs 17 maintenance_work_mem is set to 64 megabytes on both of these. Postgres 16:- Postgres 17:- CREATE TABLE test_16 (id INT); INSERT INTO test_16 (id) SELECT generate_series(1, 10000000); CREATE INDEX ON test_16(id); UPDATE test_16 SET id = id - 1; CREATE TABLE test_17 (id INT); INSERT INTO test_17 (id) SELECT generate_series(1, 10000000); CREATE INDEX ON test_17(id); UPDATE test_17 SET id = id - 1;

- 8. Postgres 17 Postgres 16 -[ RECORD 1 ]------+--------------- pid | 21830 datid | 16384 datname | pg16 relid | 16389 phase | vacuuming heap heap_blks_total | 88496 heap_blks_scanned | 88496 heap_blks_vacuumed| 7478 index_vacuum_count | 1 max_dead_tuples | 11184809 num_dead_tuples | 10000000 -[ RECORD 1 ]--------+--------------- pid | 21971 datid | 16389 datname | pg17 relid | 16468 phase | vacuuming heap heap_blks_total | 88496 heap_blks_scanned | 88496 heap_blks_vacuumed | 27040 index_vacuum_count | 0 max_dead_tuple_byte | 67108864 dead_tuple_bytes | 2556928 num_dead_item_ids | 10000000 indexes_total | 0 indexes_processed | 0

- 9. Postgres 16 Postgres 17 2024-08-19 10:49:17.351 UTC [21830] LOG: automatic vacuum of table "pg16.public.test_16": index scans: 1 pages: 0 removed, 88496 remain, 88496 scanned (100.00% of total) tuples: 3180676 removed, 10000000 remain, 0 are dead but not yet removable removable cutoff: 747, which was 0 XIDs old when operation ended new relfrozenxid: 745, which is 2 XIDs ahead of previous value frozen: 0 pages from table (0.00% of total) had 0 tuples frozen index scan needed: 44248 pages from table (50.00% of total) had 10000000 dead item identifiers removed index "test_16_id_idx": pages: 54840 in total, 0 newly deleted, 0 currently deleted, 0 reusable avg read rate: 24.183 MB/s, avg write rate: 22.317 MB/s buffer usage: 119566 hits, 156585 misses, 144501 dirtied WAL usage: 201465 records, 60976 full page images, 233670948 bytes system usage: CPU: user: 9.04 s, system: 3.56 s, elapsed: 50.58 s 2024-08-19 10:51:54.081 UTC [21959] LOG: automatic vacuum of table "pg17.public.test_17": index scans: 0 pages: 0 removed, 76831 remain, 76831 scanned (100.00% of total) tuples: 0 removed, 10000000 remain, 0 are dead but not yet removable removable cutoff: 827, which was 1 XIDs old when operation ended new relfrozenxid: 825, which is 1 XIDs ahead of previous value frozen: 0 pages from table (0.00% of total) had 0 tuples frozen index scan not needed: 0 pages from table (0.00% of total) had 0 dead item identifiers removed avg read rate: 34.779 MB/s, avg write rate: 19.580 MB/s buffer usage: 79090 hits, 74631 misses, 42016 dirtied WAL usage: 4969 records, 2 full page images, 309554 bytes system usage: CPU: user: 2.71 s, system: 1.14 s, elapsed: 16.76 s

- 10. Streaming I/O in Pg 17 ❏ Streaming I/O with I/O combining - Reads multiple pages in one go - Increases read size to 128K(default) - New GUC: io_combine_limit ❏ Streaming I/O Enhancements for Sequential Scans - Faster sequential scans with 128K reads - Reduces system calls for improved speed - Improves SELECT query performance ❏ Streaming I/O Benefits for ANALYZE - Efficient data reading via prefetching - Improved performance for table statistics - Supports faster query planning 1 2 3 1 2 3 8k 8k 8k 24k Postgres 16 Postgres 17 OS OS

- 11. Postgres 16 pg16=# EXPLAIN (ANALYZE, BUFFERS) SELECT COUNT(*) FROM test_16; QUERY PLAN ------------------------------------------------------------------------------------------------------------------------------ Aggregate (cost=212496.60..212496.61 rows=1 width=8) (actual time=2869.400..2869.401 rows=1 loops=1) Buffers: shared hit=416 read=88080 -> Seq Scan on test_16 (cost=0.00..187696.48 rows=9920048 width=0) (actual time=145.205..2082.923 rows=10000000 loops=1) Buffers: shared hit=416 read=88080 Planning Time: 0.059 ms JIT: Functions: 2 Options: Inlining false, Optimization false, Expressions true, Deforming true Timing: Generation 0.316 ms, Inlining 0.000 ms, Optimization 0.120 ms, Emission 1.452 ms, Total 1.888 ms Execution Time: 2869.794 ms (10 rows)

- 12. Postgres 17 pg17=# EXPLAIN (ANALYZE, BUFFERS) SELECT COUNT(*) FROM test_17; QUERY PLAN ------------------------------------------------------------------------------------------------------------------------------------------------ Finalize Aggregate (cost=142142.30..142142.31 rows=1 width=8) (actual time=1287.047..1287.196 rows=1 loops=1) Buffers: shared hit=4544 read=83952 -> Gather (cost=142142.09..142142.30 rows=2 width=8) (actual time=1281.208..1287.189 rows=3 loops=1) Workers Planned: 2 Workers Launched: 2 Buffers: shared hit=4544 read=83952 -> Partial Aggregate (cost=141142.09..141142.10 rows=1 width=8) (actual time=1268.267..1268.268 rows=1 loops=3) Buffers: shared hit=4544 read=83952 -> Parallel Seq Scan on test_17 (cost=0.00..130612.87 rows=4211687 width=0) (actual time=51.831..836.598 rows=3333333 loops=3) Buffers: shared hit=4544 read=83952 Planning: Buffers: shared hit=61 Planning Time: 0.416 ms Execution Time: 1287.960 ms (14 rows)

- 13. ● Configurable Cache Sizes: Adjustable SLRU cache. ● Banked Cache: Efficient data eviction by bank. ● Separate Locks: Each bank uses its own lock. Improved SLRU Performance

- 14. Query Optimization ❏ Faster B-Tree Index Scan - Improved IN clause performance. ❏ 3x Performance boost

- 15. Postgres 16 Postgres 17 pg16=# EXPLAIN (ANALYZE, BUFFERS) SELECT * FROM test_16 WHERE id IN (1, 2, 3, 4, 45, 6, 10007); QUERY PLAN ------------------------------------------------------------------------------------------------------------------------------ Index Only Scan using test_16_id_idx on test_16 (cost=0.43..31.17 rows=7 width=4) (actual time=0.052..1.277 rows=7 loops=1) Index Cond: (id = ANY ('{1,2,3,4,45,6,10007}'::integer[])) Heap Fetches: 0 Buffers: shared hit=24 read=1 Planning: Buffers: shared hit=61 Planning Time: 0.401 ms Execution Time: 1.293 ms (8 rows) pg17=# EXPLAIN (ANALYZE, BUFFERS) SELECT * FROM test_17 WHERE id IN (1, 2, 3, 4, 45, 6, 10007); QUERY PLAN ------------------------------------------------------------------------------------------------------------------------------ Index Only Scan using test_17_id_idx on test_17 (cost=0.43..31.17 rows=7 width=4) (actual time=0.056..0.542 rows=7 loops=1) Index Cond: (id = ANY ('{1,2,3,4,45,6,10007}'::integer[])) Heap Fetches: 0 Buffers: shared hit=6 read=1 Planning: Buffers: shared hit=70 Planning Time: 0.452 ms Execution Time: 0.564 ms (8 rows)

- 16. Performance Boost with Constraints ❏ NULL Constraint Optimization - Skips unnecessary checks for faster queries. ❏ Impact of NOT NULL on Execution - Reduces processing for more efficient scans.

- 17. Execution Plans without NOT NULL Constraint pg17=# explain (analyze, buffers, costs off) SELECT count(*) FROM employees WHERE name IS NULL; QUERY PLAN ----------------------------------------------------------------------- Aggregate (actual time=0.022..0.023 rows=1 loops=1) Buffers: shared hit=1 -> Seq Scan on employees (actual time=0.016..0.017 rows=2 loops=1) Filter: (name IS NULL) Rows Removed by Filter: 8 Buffers: shared hit=1 Planning Time: 0.068 ms Execution Time: 0.058 ms pg17=# explain (analyze, buffers, costs off) SELECT count(*) FROM employees WHERE name IS NOT NULL; QUERY PLAN ----------------------------------------------------------------------- Aggregate (actual time=0.021..0.022 rows=1 loops=1) Buffers: shared hit=1 -> Seq Scan on employees (actual time=0.008..0.015 rows=8 loops=1) Filter: (name IS NOT NULL) Rows Removed by Filter: 2 Buffers: shared hit=1 Planning Time: 0.038 ms Execution Time: 0.039 ms (8 rows)

- 18. Execution Plans with NOT NULL Constraint pg17=# ALTER TABLE employees ALTER COLUMN name SET NOT NULL; ALTER TABLE pg17=# pg17=# explain (analyze, buffers, costs off) SELECT count(*) FROM employees WHERE name IS NULL; QUERY PLAN -------------------------------------------------------- Aggregate (actual time=0.004..0.004 rows=1 loops=1) -> Result (actual time=0.002..0.002 rows=0 loops=1) One-Time Filter: false Planning: Buffers: shared hit=4 Planning Time: 0.089 ms Execution Time: 0.038 ms (7 rows) pg17=# explain (analyze, buffers, costs off) SELECT count(*) FROM employees WHERE name IS NOT NULL; QUERY PLAN ----------------------------------------------------------------------- Aggregate (actual time=0.014..0.015 rows=1 loops=1) Buffers: shared hit=1 -> Seq Scan on employees (actual time=0.009..0.010 rows=8 loops=1) Buffers: shared hit=1 Planning Time: 0.119 ms Execution Time: 0.028 ms (6 rows)

- 19. Faster BRIN Index Creation ❏ Multi-Worker Support ❏ Parallel Processing ❏ Sorted Summary Streams ❏ Leader Integration ❏ Efficient Resource Use

- 20. Partitioned and distributed workloads enhancements

- 21. Partition Management SPLIT PARTITION - Break large partitions into smaller ones. MERGE PARTITIONS - Merge two or more partitions into one. Note:- Access Exclusive Lock

- 22. Exclusion Constraints on Partitioned Tables Exclusion constraints prevent conflicting data in different partitions of a table. pg17=# CREATE TABLE orders ( order_id serial, customer_id int, order_date date, total_amount numeric, UNIQUE (customer_id) ) PARTITION BY RANGE (order_date); ERROR: unique constraint on partitioned table must include all partitioning columns DETAIL: UNIQUE constraint on table "orders" lacks column "order_date" which is part of the partition key. pg17=# CREATE TABLE orders ( order_id serial, customer_id int, order_date date, total_amount numeric, UNIQUE (order_date) ) PARTITION BY RANGE (order_date); CREATE TABLE pg17=#

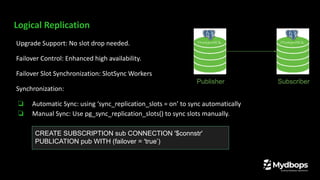

- 23. Logical Replication Upgrade Support: No slot drop needed. Failover Control: Enhanced high availability. Failover Slot Synchronization: SlotSync Workers Synchronization: ❏ Automatic Sync: using ‘sync_replication_slots = on’ to sync automatically ❏ Manual Sync: Use pg_sync_replication_slots() to sync slots manually. Publisher Subscriber CREATE SUBSCRIPTION sub CONNECTION '$connstr' PUBLICATION pub WITH (failover = 'true’)

- 24. Upgrade Logical Replication Nodes ❏ Seamless Upgrades - Migrate replication slots during an upgrade without losing data. ❏ Preserve State - Keeps the replication state unchanged during an upgrade. ❏ Upgrading Subscribers - Allow Write operations on the primary continue during subscriber upgrades. ❏ Version Requirement - All cluster members must run PostgreSQL 17.0+ for migration.

- 25. New Tool: pg_createsubscriber command. ❏ Helps create logical replicas from physical standbys. ❏ The pg_createsubscriber command speeds up the setup of logical subscribers. ❏ Simplifies the process of upgrading PostgreSQL instances. New Columns in ‘pg_replication_slots’ ❏ inactive_since :- Tracks when a replication slot became inactive. ❏ Invalidation_reason :- Indicates the reason for invalidation of replication slots.

- 26. Postgres 16 Postgres 17 pg16=# SELECT * FROM pg_replication_slots WHERE slot_name = 'mydbops_testing_slot'; -[ RECORD 1 ]----------+--------------------- slot_name | mydbops_testing_slot plugin | slot_type | physical datoid | database | temporary | f active | f active_pid | xmin | catalog_xmin | restart_lsn | confirmed_flush_lsn | wal_status | safe_wal_size | two_phase | f conflicting | pg17=# SELECT * FROM pg_replication_slots WHERE slot_name = 'mydbops_testing_slot'; -[ RECORD 1 ]-------+------------------------------ slot_name | mydbops_testing_slot plugin | slot_type | physical datoid | database | temporary | f active | f active_pid | xmin | catalog_xmin | restart_lsn | confirmed_flush_lsn | wal_status | safe_wal_size | two_phase | f inactive_since | 2024-08-20 04:29:36.446812+00 conflicting | invalidation_reason | failover | f synced | f

- 28. MERGE Command Handling unmatched rows with the WHEN NOT MATCHED BY SOURCE clause. Returning detailed actions with the RETURNING clause and merge_action(). - merge_action Function: report the DML that generated the row. Views must be consistent for MERGE ❏ Trigger-updatable views need INSTEAD OF triggers for actions (INSERT, UPDATE, DELETE). ❏ Auto-updatable views should not have any triggers. MERGE

- 29. Table product_catalog Table price_updates pg17=# CREATE TABLE product_catalog ( pg17(# product_id BIGINT, pg17(# product_name VARCHAR(100), pg17(# price DECIMAL(10, 2), pg17(# last_updated TIMESTAMP, pg17(# status TEXT pg17(# ); CREATE TABLE pg17=# pg17=# INSERT INTO product_catalog (product_id, product_name, price, last_updated, status) pg17-# VALUES pg17-# (1, 'Laptop', 1000.00, '2024-08-01 12:00:00', 'active'), pg17-# (2, 'Smartphone', 500.00, '2024-08-01 12:00:00', 'active'), pg17-# (3, 'Tablet', 300.00, '2024-08-01 12:00:00', 'active'), pg17-# (4, 'Smartwatch', 200.00, '2024-08-01 12:00:00', 'active'); INSERT 0 4 pg17=# CREATE TABLE price_updates ( pg17(# product_id BIGINT, pg17(# new_price DECIMAL(10, 2), pg17(# update_type VARCHAR(10) pg17(# ); CREATE TABLE pg17=# pg17=# INSERT INTO price_updates (product_id, new_price, update_type) pg17-# VALUES pg17-# (1, 950.00, 'decrease'), pg17-# (2, 550.00, 'increase'), pg17-# (5, 250.00, 'new'), pg17-# (3, NULL, 'discontinue'); INSERT 0 4 pg17=#

- 30. pg17=# WITH merge AS ( pg17(# MERGE INTO product_catalog trg pg17(# USING price_updates src pg17(# ON src.product_id = trg.product_id pg17(# WHEN MATCHED AND src.update_type = 'increase' pg17(# THEN UPDATE pg17(# SET price = src.new_price, pg17(# last_updated = CURRENT_TIMESTAMP, pg17(# status = 'price increased' pg17(# WHEN MATCHED AND src.update_type = 'decrease' pg17(# THEN UPDATE pg17(# SET price = src.new_price, pg17(# last_updated = CURRENT_TIMESTAMP, pg17(# status = 'price decreased' pg17(# WHEN MATCHED AND src.update_type = 'discontinue' pg17(# THEN UPDATE pg17(# SET status = 'discontinued', pg17(# last_updated = CURRENT_TIMESTAMP pg17(# WHEN NOT MATCHED BY TARGET AND src.update_type = 'new' pg17(# THEN INSERT (product_id, product_name, price, last_updated, status) pg17(# VALUES (src.product_id, 'Unknown Product', src.new_price, CURRENT_TIMESTAMP, 'new product') pg17(# RETURNING merge_action(), trg.* pg17(# ) pg17-# SELECT * FROM merge; merge_action | product_id | product_name | price | last_updated | status --------------------+-----------------+--------------------------+----------------------------------------------------+----------------------- UPDATE | 1 | Laptop | 950.00 | 2024-08-19 21:47:23.507675 | price decreased UPDATE | 2 | Smartphone | 550.00 | 2024-08-19 21:47:23.507675 | price increased INSERT | 5 | Unknown Product | 250.00 | 2024-08-19 21:47:23.507675 | new product UPDATE | 3 | Tablet | 300.00 | 2024-08-19 21:47:23.507675 | discontinued (4 rows)

- 31. COPY Improvements ❏ Managing data type incompatibilities using ON_ERROR options - ignore(text, csv), stop ❏ Enhanced logging using LOG_VERBOSITY - Handle mismatched data types. - New attribute added ‘tuples_skipped’ in pg_stat_progress_copy view

- 32. root@ip-172-31-3-170:/var/log/csv_test# cat /var/log/csv_test/data_issues.csv id,name,age,email,is_active,signup_date,last_login 1,Rahul Sharma,30,[email protected],true,2024-01-01,2024-01-01 12:00:00 2,Neha Verma,-1,[email protected],false,2024-01-02,2024-01-02 12:00:00 3,,abc,[email protected],true,2024-01-03,2024-01-03 12:00:00 4,Amit Patel,40,[email protected],maybe,2024-01-03,2024-01-03 12:00:00 5,Pooja Singh,25,[email protected],true,2024-01-04,2024-01-04 12:00:00 root@ip-172-31-3-170:/var/log/csv_test# pg17=# SELECT * FROM example3; id | name | age | email | is_active | signup_date | last_login ----+------+-----+-------+-----------+-------------+------------ (0 rows) pg17=# pg17=# copy example3 FROM '/var/log/csv_test/data_issues.csv' (ON_ERROR ignore, LOG_VERBOSITY verbose, FORMAT csv, HEADER); NOTICE: skipping row due to data type incompatibility at line 4 for column age: "abc" NOTICE: skipping row due to data type incompatibility at line 5 for column is_active: "maybe" NOTICE: 2 rows were skipped due to data type incompatibility COPY 3 pg17=# SELECT * FROM example3; id | name | age | email | is_active | signup_date | last_login ----+----------------------+-----+-----------------------------------------+-----------+---------------------+--------------------- 1 | Rahul Sharma | 30 | [email protected] | t | 2024-01-01 | 2024-01-01 12:00:00 2 | Neha Verma | 1 | [email protected] | f | 2024-01-02 | 2024-01-02 12:00:00 5 | Pooja Singh | 25 | [email protected] | t | 2024-01-04 | 2024-01-04 12:00:00 (3 rows)

- 33. JSON_TABLE: Convert JSON to table. Converting JSON data into a table format pg17=# CREATE TABLE customer_orders (order_data jsonb); CREATE TABLE pg17=# INSERT INTO customer_orders VALUES ( '{ "orders": [ { "product": "Laptop", "quantity": 2 }, { "product": "Mouse", "quantity": 5 } ] }' ); INSERT 0 1 pg17=# SELECT jt.* FROM customer_orders, JSON_TABLE(order_data, '$.orders[*]' pg17(# COLUMNS ( id FOR ORDINALITY, product text PATH '$.product', quantity int PATH '$.quantity' )) AS jt; id | product | quantity ----+---------+---------- 1 | Laptop | 2 2 | Mouse | 5 (2 rows) pg17=# SQL/JSON Enhancements

- 34. New Functions: JSON constructor - JSON(): Converts a string into a JSON value. - JSON_SERIALIZE(): Converts a JSON value into a binary format. - JSON_SCALAR(): Converts a simple value into JSON format. SELECT JSON('{"setting": "high", "volume": 10, "mute": false}'); {"setting": "high", "volume": 10, "mute": false} SELECT JSON_SCALAR(99.99); 99.99 SELECT JSON_SERIALIZE('{"user": "Alice", "age": 30}' RETURNING bytea); x7b2275736572223a2022416c696365222c2022616765223a2033307d

- 35. JSON Query Functions - JSON_EXISTS(): Checks if a specific path exists in JSON - JSON_QUERY(): Extracts a part of the JSON data. - JSON_VALUE(): Extracts a single value from JSON. SELECT JSON_EXISTS(jsonb '{"user": "Alice", "roles": ["admin", "user"]}', '$.roles[?(@ == "admin")]'); t(true) SELECT JSON_QUERY(jsonb '{"user": "Bob", "roles": ["user", "editor"]}', '$.roles'); ["user", "editor"] SELECT JSON_VALUE(jsonb '{"user": "Charlie", "age": 25}', '$.user'); “Charlie”

- 37. Reduced Need for Superuser Privileges pg_maintain Role (VACUUM, ANALYZE, CLUSTER, REFRESH MATERIALIZED VIEW, REINDEX, and LOCK TABLE on all relations.) - Allows users to perform maintenance tasks like VACUUM and REINDEX without superuser access. Safe Maintenance Operations - Grants specific users maintenance permissions on individual tables. GRANT pg_maintain TO specific_user; GRANT MAINTAIN ON TABLE specific_table TO specific_user;

- 38. pg_maintain Role pg17=# create role mydbops; CREATE ROLE pg17=# set role mydbops; SET pg17=> pg17=> dt List of relations Schema | Name | Type | Owner --------+----------------------------+-------+---------- public | customer_orders | table | postgres public | test_17 | table | postgres (2 rows) pg17=> vacuum test_17; WARNING: permission denied to vacuum "test_17", skipping it VACUUM pg17=# GRANT pg_maintain TO mydbops; GRANT ROLE pg17=# set role mydbops; SET pg17=> vacuum test_17; VACUUM pg17=>

- 39. MAINTAIN Privilege pg17=# GRANT MAINTAIN ON TABLE role_test TO mydbops; GRANT pg17=# set role mydbops; SET pg17=> pg17=> dt List of relations Schema | Name | Type | Owner --------+----------------------------+--------+---------- public | customer_orders | table | postgres public | role_test | table | postgres public | test_17 | table | postgres (3 rows) pg17=> vacuum customer_orders; WARNING: permission denied to vacuum "customer_orders", skipping it VACUUM pg17=> pg17=> vacuum role_test; VACUUM pg17=>

- 40. Improved ALTER SYSTEM Command: ALTER SYSTEM Enhancements ❏ Control Over ALTER SYSTEM - allow_alter_system

- 41. Faster TLS Connections ● Direct TLS Setup: - sslnegotiation=direct option, ● ALPN Requirement ● Only works on PostgreSQL 17 and newer versions.

- 42. Backup and Export Management

- 43. Incremental pg_basebackup ❏ Efficient for Large Databases ❏ Using pg_basebackup --incremental ❏ Tracking Changes with WAL - summarize_wal ❏ Restoration with pg_combinebackup

- 44. l Enable summarize_wal Taken Full backup Taken --incremental backup using pg_basebackup Scenario pg17=# show summarize_wal; summarize_wal --------------- on postgres@ip-172-31-3-170:/var/log$ pg_basebackup -D /var/log/full_bck17 -p 5433 -U postgres postgres@ip-172-31-3-170:/var/log$ pg_basebackup -D incr_bck17 -p 5433 -U postgres --incremental=/var/log/full_bck17/backup_manifest -v

- 45. Taken full backup including incremental backup using pg_combinebackup postgres@ip-172-31-3-170:/var/log$ /usr/lib/postgresql/17/bin/pg_combinebackup --output=combine_incr_full_bck full_bck17 incr_bck17 postgres@ip-172-31-3-170:/var/log/combine_incr_full_bck$ tail -10 backup_manifest { "Path": "global/6303", "Size": 16384, "Last-Modified": "2024-08-18 13:26:43 GMT", "Checksum-Algorithm": "CRC32C", "Checksum": "e8d85722" }, { "Path": "global/6245", "Size": 8192, "Last-Modified": "2024-08-18 13:26:43 GMT", "Checksum-Algorithm": "CRC32C", "Checksum": "21597da0" }, { "Path": "global/6115", "Size": 8192, "Last-Modified": "2024-08-18 13:26:43 GMT", "Checksum-Algorithm": "CRC32C", "Checksum": "b5a46221" }, { "Path": "global/6246", "Size": 8192, "Last-Modified": "2024-08-18 13:26:43 GMT", "Checksum-Algorithm": "CRC32C", "Checksum": "865872e0" }, { "Path": "global/6243", "Size": 0, "Last-Modified": "2024-08-18 13:26:43 GMT", "Checksum-Algorithm": "CRC32C", "Checksum": "00000000" } ], "WAL-Ranges": [ { "Timeline": 1, "Start-LSN": "2/B000028", "End-LSN": "2/B000120" } ], "Manifest-Checksum": "0f16e87ab7bd400434c2725cf468d77bbcb702a49d38d749cd0e5af47f8f5fac"} postgres@ip-172-31-3-170:/var/log/combine_incr_full_bck$

- 46. Now started the PostgreSQL server using the newly restored data directory. postgres@ip-172-31-3-170:/home/mydbops$ /usr/lib/postgresql/17/bin/pg_ctl -D /var/lib/postgresql/17 -l logfile stop waiting for server to shut down.... done server stopped postgres@ip-172-31-3-170:/var/log/combine_incr_full_bck$ /usr/lib/postgresql/17/bin/pg_ctl -D /var/log/combine_incr_full_bck -l logfile start waiting for server to start.... done server started postgres@ip-172-31-3-170:/var/log/combine_incr_full_bck$ psql -hlocalhost -p 5433 -d postgres psql (17beta3) Type "help" for help. postgres=# c pg17 You are now connected to database "pg17" as user "postgres". pg17=# dt List of relations Schema | Name | Type | Owner -------------+--------------------------+-------+---------- public | customer_orders | table | postgres public | incrment_test | table | postgres public | role_test | table | postgres public | test_17 | table | postgres (4 rows)

- 47. Monitoring

- 48. EXPLAIN Command Enhancements ❏ SERIALIZE: Network data conversion time. ❏ MEMORY: Optimizer memory usage. pg17=# EXPLAIN (ANALYZE, SERIALIZE, MEMORY, BUFFERS) pg17-# SELECT * FROM test_table_17 WHERE data LIKE '%data%'; QUERY PLAN ----------------------------------------------------------------------------------------------------------------- Seq Scan on test_table_17 (cost=0.00..189.00 rows=9999 width=20) (actual time=0.031..2.401 rows=10000 loops=1) Filter: (data ~~ '%data%'::text) Buffers: shared hit=64 Planning: Buffers: shared hit=17 Memory: used=9kB allocated=16kB Planning Time: 0.155 ms Serialization: time=2.028 ms output=291kB format=text Execution Time: 5.526 ms (9 rows)

- 49. VACUUM Progress Reporting ❏ Indexes_total: Total indexes on the table being vacuumed ❏ Indexes_processed: Shows how many indexes have been already vacuumed select * from pg_stat_progress_vacuum; -[ RECORD 1 ]--------+------------------ pid | 7454 datid | 16389 datname | pg17 relid | 16394 phase | vacuuming indexes heap_blks_total | 88496 heap_blks_scanned | 88496 heap_blks_vacuumed | 0 index_vacuum_count | 0 max_dead_tuple_bytes| 67108864 dead_tuple_bytes | 2556928 num_dead_item_ids | 10000000 indexes_total | 1 indexes_processed | 0

- 50. pg_wait_events View - Shows wait event details and description. pg17=# SELECT * FROM pg_wait_events; -[ RECORD 1 ]----------------------------------------------------------------------------------------------------------------------------- type | Activity name | AutovacuumMain description | Waiting in main loop of autovacuum launcher process

- 51. Pg_stat_checkpointer ❏ Displays statistics related to the checkpointer process. ❏ Replaced some of the old stats found in pg_stat_bgwriter. pg17=# SELECT * FROM pg_stat_checkpointer; -[ RECORD 1 ]-------+------------------------------ num_timed | 345 num_requested | 21 restartpoints_timed | 0 restartpoints_req | 0 restartpoints_done | 0 write_time | 1192795 sync_time | 1868 buffers_written | 56909 stats_reset | 2024-08-14 10:29:39.606191+00 pg17=#

- 52. Pg_stat_statements CALL Normalization: Fewer entries for procedures. pg16=# SELECT userid, dbid, toplevel, queryid, query, total_exec_time, min_exec_time, max_exec_time, mean_exec_time, stddev_exec_time FROM pg_stat_statements WHERE query LIKE 'CALL update_salary%'; -[ RECORD 1 ]-----+------------------------------- userid | 10 dbid | 16384 toplevel | t queryid | -6663051425227154753 query | CALL update_salary('HR', 1200) total_exec_time | 0.10497 min_exec_time | 0.10497 max_exec_time | 0.10497 mean_exec_time | 0.10497 stddev_exec_time | 0 -[ RECORD 2 ]----+------------------------------- pg17=# SELECT userid, dbid, toplevel, queryid, query, total_exec_time, min_exec_time, max_exec_time, mean_exec_time, stddev_exec_time, minmax_stats_since, stats_since FROM pg_stat_statements WHERE query LIKE 'CALL update_salary%'; -[ RECORD 1 ]------+------------------------------ userid | 10 dbid | 16389 toplevel | t queryid | 3335012495428089609 query | CALL update_salary($1, $2) total_exec_time | 1.957936 min_exec_time | 0.06843 max_exec_time | 1.694025 mean_exec_time | 0.489484 stddev_exec_time | 0.6955936256612045 minmax_stats_since | 2024-08-20 14:19:29.726603+00 stats_since | 2024-08-20 14:19:29.726603+00 pg17=# Postgres 16 Postgres 17

- 53. Removed old_snapshot_threshold Parameter ● Behavior on Querying Old Data - "Snapshot too old" error ● Reason for Removal

- 54. Any Questions ?

- 56. Consulting Services Consulting Services Connect with us ! Reach us at : [email protected]

- 57. Thank you!

![Postgres 17

Postgres 16

-[ RECORD 1 ]------+---------------

pid | 21830

datid | 16384

datname | pg16

relid | 16389

phase | vacuuming heap

heap_blks_total | 88496

heap_blks_scanned | 88496

heap_blks_vacuumed| 7478

index_vacuum_count | 1

max_dead_tuples | 11184809

num_dead_tuples | 10000000

-[ RECORD 1 ]--------+---------------

pid | 21971

datid | 16389

datname | pg17

relid | 16468

phase | vacuuming heap

heap_blks_total | 88496

heap_blks_scanned | 88496

heap_blks_vacuumed | 27040

index_vacuum_count | 0

max_dead_tuple_byte | 67108864

dead_tuple_bytes | 2556928

num_dead_item_ids | 10000000

indexes_total | 0

indexes_processed | 0](https://image.slidesharecdn.com/whatsnewinpostgresql17-240826042706-f72fe239/85/What-s-New-in-PostgreSQL-17-Mydbops-MyWebinar-Edition-35-8-320.jpg)

![Postgres 16 Postgres 17

2024-08-19 10:49:17.351 UTC [21830] LOG: automatic vacuum of table

"pg16.public.test_16": index scans: 1

pages: 0 removed, 88496 remain, 88496 scanned (100.00% of total)

tuples: 3180676 removed, 10000000 remain, 0 are dead but not

yet removable

removable cutoff: 747, which was 0 XIDs old when operation ended

new relfrozenxid: 745, which is 2 XIDs ahead of previous value

frozen: 0 pages from table (0.00% of total) had 0 tuples frozen

index scan needed: 44248 pages from table (50.00% of total) had

10000000 dead item identifiers removed

index "test_16_id_idx": pages: 54840 in total, 0 newly deleted, 0

currently deleted, 0 reusable

avg read rate: 24.183 MB/s, avg write rate: 22.317 MB/s

buffer usage: 119566 hits, 156585 misses, 144501 dirtied

WAL usage: 201465 records, 60976 full page images, 233670948

bytes

system usage: CPU: user: 9.04 s, system: 3.56 s, elapsed: 50.58 s

2024-08-19 10:51:54.081 UTC [21959] LOG: automatic vacuum of table

"pg17.public.test_17": index scans: 0

pages: 0 removed, 76831 remain, 76831 scanned (100.00% of total)

tuples: 0 removed, 10000000 remain, 0 are dead but not yet

removable

removable cutoff: 827, which was 1 XIDs old when operation ended

new relfrozenxid: 825, which is 1 XIDs ahead of previous value

frozen: 0 pages from table (0.00% of total) had 0 tuples frozen

index scan not needed: 0 pages from table (0.00% of total) had 0

dead item identifiers removed

avg read rate: 34.779 MB/s, avg write rate: 19.580 MB/s

buffer usage: 79090 hits, 74631 misses, 42016 dirtied

WAL usage: 4969 records, 2 full page images, 309554 bytes

system usage: CPU: user: 2.71 s, system: 1.14 s, elapsed: 16.76 s](https://image.slidesharecdn.com/whatsnewinpostgresql17-240826042706-f72fe239/85/What-s-New-in-PostgreSQL-17-Mydbops-MyWebinar-Edition-35-9-320.jpg)

![Postgres 16

Postgres 17

pg16=# EXPLAIN (ANALYZE, BUFFERS) SELECT * FROM test_16 WHERE id IN (1, 2, 3, 4, 45, 6, 10007);

QUERY PLAN

------------------------------------------------------------------------------------------------------------------------------

Index Only Scan using test_16_id_idx on test_16 (cost=0.43..31.17 rows=7 width=4) (actual time=0.052..1.277 rows=7 loops=1)

Index Cond: (id = ANY ('{1,2,3,4,45,6,10007}'::integer[]))

Heap Fetches: 0

Buffers: shared hit=24 read=1

Planning:

Buffers: shared hit=61

Planning Time: 0.401 ms

Execution Time: 1.293 ms

(8 rows)

pg17=# EXPLAIN (ANALYZE, BUFFERS) SELECT * FROM test_17 WHERE id IN (1, 2, 3, 4, 45, 6, 10007);

QUERY PLAN

------------------------------------------------------------------------------------------------------------------------------

Index Only Scan using test_17_id_idx on test_17 (cost=0.43..31.17 rows=7 width=4) (actual time=0.056..0.542 rows=7 loops=1)

Index Cond: (id = ANY ('{1,2,3,4,45,6,10007}'::integer[]))

Heap Fetches: 0

Buffers: shared hit=6 read=1

Planning:

Buffers: shared hit=70

Planning Time: 0.452 ms

Execution Time: 0.564 ms

(8 rows)](https://image.slidesharecdn.com/whatsnewinpostgresql17-240826042706-f72fe239/85/What-s-New-in-PostgreSQL-17-Mydbops-MyWebinar-Edition-35-15-320.jpg)

![Postgres 16 Postgres 17

pg16=# SELECT * FROM pg_replication_slots WHERE

slot_name = 'mydbops_testing_slot';

-[ RECORD 1 ]----------+---------------------

slot_name | mydbops_testing_slot

plugin |

slot_type | physical

datoid |

database |

temporary | f

active | f

active_pid |

xmin |

catalog_xmin |

restart_lsn |

confirmed_flush_lsn |

wal_status |

safe_wal_size |

two_phase | f

conflicting |

pg17=# SELECT * FROM pg_replication_slots WHERE

slot_name = 'mydbops_testing_slot';

-[ RECORD 1 ]-------+------------------------------

slot_name | mydbops_testing_slot

plugin |

slot_type | physical

datoid |

database |

temporary | f

active | f

active_pid |

xmin |

catalog_xmin |

restart_lsn |

confirmed_flush_lsn |

wal_status |

safe_wal_size |

two_phase | f

inactive_since | 2024-08-20 04:29:36.446812+00

conflicting |

invalidation_reason |

failover | f

synced | f](https://image.slidesharecdn.com/whatsnewinpostgresql17-240826042706-f72fe239/85/What-s-New-in-PostgreSQL-17-Mydbops-MyWebinar-Edition-35-26-320.jpg)

![JSON_TABLE: Convert JSON to table.

Converting JSON data into a table format

pg17=# CREATE TABLE customer_orders (order_data jsonb);

CREATE TABLE

pg17=# INSERT INTO customer_orders VALUES ( '{ "orders": [ { "product": "Laptop", "quantity": 2 }, { "product": "Mouse",

"quantity": 5 } ] }' );

INSERT 0 1

pg17=# SELECT jt.* FROM customer_orders, JSON_TABLE(order_data, '$.orders[*]'

pg17(# COLUMNS ( id FOR ORDINALITY, product text PATH '$.product', quantity int PATH '$.quantity' )) AS jt;

id | product | quantity

----+---------+----------

1 | Laptop | 2

2 | Mouse | 5

(2 rows)

pg17=#

SQL/JSON Enhancements](https://image.slidesharecdn.com/whatsnewinpostgresql17-240826042706-f72fe239/85/What-s-New-in-PostgreSQL-17-Mydbops-MyWebinar-Edition-35-33-320.jpg)

![JSON Query Functions

- JSON_EXISTS(): Checks if a specific path exists in JSON

- JSON_QUERY(): Extracts a part of the JSON data.

- JSON_VALUE(): Extracts a single value from JSON.

SELECT JSON_EXISTS(jsonb '{"user": "Alice", "roles": ["admin", "user"]}', '$.roles[?(@ == "admin")]');

t(true)

SELECT JSON_QUERY(jsonb '{"user": "Bob", "roles": ["user", "editor"]}', '$.roles');

["user", "editor"]

SELECT JSON_VALUE(jsonb '{"user": "Charlie", "age": 25}', '$.user');

“Charlie”](https://image.slidesharecdn.com/whatsnewinpostgresql17-240826042706-f72fe239/85/What-s-New-in-PostgreSQL-17-Mydbops-MyWebinar-Edition-35-35-320.jpg)

![Taken full backup including incremental backup using pg_combinebackup

postgres@ip-172-31-3-170:/var/log$ /usr/lib/postgresql/17/bin/pg_combinebackup --output=combine_incr_full_bck

full_bck17 incr_bck17

postgres@ip-172-31-3-170:/var/log/combine_incr_full_bck$ tail -10 backup_manifest

{ "Path": "global/6303", "Size": 16384, "Last-Modified": "2024-08-18 13:26:43 GMT", "Checksum-Algorithm": "CRC32C", "Checksum":

"e8d85722" },

{ "Path": "global/6245", "Size": 8192, "Last-Modified": "2024-08-18 13:26:43 GMT", "Checksum-Algorithm": "CRC32C", "Checksum":

"21597da0" },

{ "Path": "global/6115", "Size": 8192, "Last-Modified": "2024-08-18 13:26:43 GMT", "Checksum-Algorithm": "CRC32C", "Checksum":

"b5a46221" },

{ "Path": "global/6246", "Size": 8192, "Last-Modified": "2024-08-18 13:26:43 GMT", "Checksum-Algorithm": "CRC32C", "Checksum":

"865872e0" },

{ "Path": "global/6243", "Size": 0, "Last-Modified": "2024-08-18 13:26:43 GMT", "Checksum-Algorithm": "CRC32C", "Checksum":

"00000000" }

],

"WAL-Ranges": [

{ "Timeline": 1, "Start-LSN": "2/B000028", "End-LSN": "2/B000120" }

],

"Manifest-Checksum": "0f16e87ab7bd400434c2725cf468d77bbcb702a49d38d749cd0e5af47f8f5fac"}

postgres@ip-172-31-3-170:/var/log/combine_incr_full_bck$](https://image.slidesharecdn.com/whatsnewinpostgresql17-240826042706-f72fe239/85/What-s-New-in-PostgreSQL-17-Mydbops-MyWebinar-Edition-35-45-320.jpg)

![VACUUM Progress Reporting

❏ Indexes_total: Total indexes on the table being vacuumed

❏ Indexes_processed: Shows how many indexes have been already vacuumed

select * from pg_stat_progress_vacuum;

-[ RECORD 1 ]--------+------------------

pid | 7454

datid | 16389

datname | pg17

relid | 16394

phase | vacuuming indexes

heap_blks_total | 88496

heap_blks_scanned | 88496

heap_blks_vacuumed | 0

index_vacuum_count | 0

max_dead_tuple_bytes| 67108864

dead_tuple_bytes | 2556928

num_dead_item_ids | 10000000

indexes_total | 1

indexes_processed | 0](https://image.slidesharecdn.com/whatsnewinpostgresql17-240826042706-f72fe239/85/What-s-New-in-PostgreSQL-17-Mydbops-MyWebinar-Edition-35-49-320.jpg)

![pg_wait_events View

- Shows wait event details and description.

pg17=# SELECT * FROM pg_wait_events;

-[ RECORD 1

]-----------------------------------------------------------------------------------------------------------------------------

type | Activity

name | AutovacuumMain

description | Waiting in main loop of autovacuum launcher process](https://image.slidesharecdn.com/whatsnewinpostgresql17-240826042706-f72fe239/85/What-s-New-in-PostgreSQL-17-Mydbops-MyWebinar-Edition-35-50-320.jpg)

![Pg_stat_checkpointer

❏ Displays statistics related to the checkpointer process.

❏ Replaced some of the old stats found in pg_stat_bgwriter.

pg17=# SELECT * FROM pg_stat_checkpointer;

-[ RECORD 1 ]-------+------------------------------

num_timed | 345

num_requested | 21

restartpoints_timed | 0

restartpoints_req | 0

restartpoints_done | 0

write_time | 1192795

sync_time | 1868

buffers_written | 56909

stats_reset | 2024-08-14 10:29:39.606191+00

pg17=#](https://image.slidesharecdn.com/whatsnewinpostgresql17-240826042706-f72fe239/85/What-s-New-in-PostgreSQL-17-Mydbops-MyWebinar-Edition-35-51-320.jpg)

![Pg_stat_statements

CALL Normalization: Fewer entries for procedures.

pg16=# SELECT userid, dbid, toplevel, queryid, query,

total_exec_time, min_exec_time, max_exec_time,

mean_exec_time, stddev_exec_time FROM

pg_stat_statements WHERE query LIKE 'CALL

update_salary%';

-[ RECORD 1 ]-----+-------------------------------

userid | 10

dbid | 16384

toplevel | t

queryid | -6663051425227154753

query | CALL update_salary('HR', 1200)

total_exec_time | 0.10497

min_exec_time | 0.10497

max_exec_time | 0.10497

mean_exec_time | 0.10497

stddev_exec_time | 0

-[ RECORD 2 ]----+-------------------------------

pg17=# SELECT userid, dbid, toplevel, queryid, query,

total_exec_time, min_exec_time, max_exec_time,

mean_exec_time, stddev_exec_time, minmax_stats_since,

stats_since FROM pg_stat_statements WHERE query LIKE

'CALL update_salary%';

-[ RECORD 1 ]------+------------------------------

userid | 10

dbid | 16389

toplevel | t

queryid | 3335012495428089609

query | CALL update_salary($1, $2)

total_exec_time | 1.957936

min_exec_time | 0.06843

max_exec_time | 1.694025

mean_exec_time | 0.489484

stddev_exec_time | 0.6955936256612045

minmax_stats_since | 2024-08-20 14:19:29.726603+00

stats_since | 2024-08-20 14:19:29.726603+00

pg17=#

Postgres 16 Postgres 17](https://image.slidesharecdn.com/whatsnewinpostgresql17-240826042706-f72fe239/85/What-s-New-in-PostgreSQL-17-Mydbops-MyWebinar-Edition-35-52-320.jpg)