What We Learned Building an R-Python Hybrid Predictive Analytics Pipeline

- 1. What We Learned Building an R-Python Hybrid Analytics Pipeline Niels Bantilan, Pegged Software NY R Conference April 8th 2016

- 2. Help healthcare organizations recruit better Pegged Software’s Mission:

- 3. Core Activities ● Build, evaluate, refine, and deploy predictive models ● Work with Engineering to ingest, validate, and store data ● Work with Product Management to develop data-driven feature sets

- 4. How might we build a predictive analytics pipeline that is reproducible, maintainable, and statistically rigorous?

- 5. Anchor Yourself to Problem Statements / Use Cases 1. Define Problem statement 2. Scope out solution space and trade-offs 3. Make decision, justify it, document it 4. Implement chosen solution 5. Evaluate working solution against problem statement 6. Rinse and repeat Problem-solving Heuristic

- 6. R-Python Pipeline Read Data Preprocess Build Model Evaluate Deploy

- 7. Data Science Stack Need R Python Maintainable codebase Git Git Sync package dependencies Packrat Pip, Pyenv Call R from Python - subprocess Automated Testing Testthat Nose Reproducible pipeline Makefile Makefile

- 8. Need R Python Maintainable codebase Git Git Sync package dependencies Packrat Pip, Pyenv Call R from Python - subprocess Automated Testing Testthat Nose Reproducible pipeline Makefile Makefile

- 9. ● Code quality ● Incremental Knowledge Transfer ● Sanity check Git Why? Because Version Control

- 10. Need R Python Maintainable codebase Git Git Sync package dependencies Packrat Pip, Pyenv Call R from Python - subprocess Automated Testing Testthat Nose Reproducible pipeline Makefile Makefile

- 11. Dependency Management Why Pip + Pyenv? 1. Easily sync Python package dependencies 2. Easily manage multiple Python versions 3. Create and manage virtual environments

- 12. Why Packrat? From RStudio 1. Isolated: separate system environment and repo environment 2. Portable: easily sync dependencies across data science team 3. Reproducible: easily add/remove/upgrade/downgrade as needed. Dependency Management

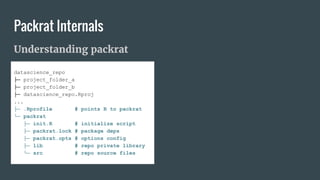

- 13. Packrat Internals datascience_repo ├─ project_folder_a ├─ project_folder_b ├─ datascience_repo.Rproj ... ├─ .Rprofile # points R to packrat └─ packrat ├─ init.R # initialize script ├─ packrat.lock # package deps ├─ packrat.opts # options config ├─ lib # repo private library └─ src # repo source files Understanding packrat

- 14. PackratFormat: 1.4 PackratVersion: 0.4.6.1 RVersion: 3.2.3 Repos:CRAN=https://cran.rstudio.com/ ... Package: ggplot2 Source: CRAN Version: 2.0.0 Hash: 5befb1e7a9c7d0692d6c35fa02a29dbf Requires: MASS, digest, gtable, plyr, reshape2, scales datascience_repo ├─ project_folder_a ├─ project_folder_b ├─ datascience_repo.Rproj ... ├─ .Rprofile └─ packrat ├─ init.R ├─ packrat.lock # package deps ├─ packrat.opts ├─ lib └─ src packrat.lock: package version and deps Packrat Internals

- 15. auto.snapshot: TRUE use.cache: FALSE print.banner.on.startup: auto vcs.ignore.lib: TRUE vcs.ignore.src: TRUE load.external.packages.on.startup: TRUE quiet.package.installation: TRUE snapshot.recommended.packages: FALSE packrat.opts: project-specific configuration Packrat Internals datascience_repo ├─ project_folder_a ├─ project_folder_b ├─ datascience_repo.Rproj ... ├─ .Rprofile └─ packrat ├─ init.R ├─ packrat.lock ├─ packrat.opts # options config ├─ lib └─ src

- 16. ● Initialize packrat with packrat::init() ● Toggle packrat in R session with packrat::on() / off() ● Save current state of project with packrat::snapshot() ● Reconstitute your project with packrat::restore() ● Removing unused libraries with packrat::clean() Packrat Workflow

- 18. Problem: Unable to find source packages when restoring Happens when there is a new version of a package on an R package repository like CRAN Packrat Issues > packrat::restore() Installing knitr (1.11) ... FAILED Error in getSourceForPkgRecord(pkgRecord, srcDir(project), availablePkgs, : Couldn't find source for version 1.11 of knitr (1.10.5 is current)

- 19. Solution 1: Use R’s Installation Procedure Packrat Issues > install.packages(<package_name>) > packrat::snapshot() Solution 2: Manually Download Source File $ wget -P repo/packrat/src <package_source_url> > packrat::restore()

- 20. Need R Python Maintainable codebase Git Git Sync package dependencies Packrat Pip, Pyenv Call R from Python - subprocess Automated Testing Testthat Nose Reproducible pipeline Makefile Makefile

- 21. Call R from Python: Data Pipeline Read Data Preprocess Build Model Evaluate Deploy

- 22. # model_builder.R cmdargs <- commandArgs(trailingOnly = TRUE) data_filepath <- cmdargs[1] model_type <- cmdargs[2] formula <- cmdargs[3] build.model <- function(data_filepath, model_type, formula) { df <- read.data(data_filepath) model <- train.model(df, model_type, formula) model } Call R from Python: Example # model_pipeline.py import subprocess subprocess.call([‘path/to/R/executable’, 'path/to/model_builder.R’, data_filepath, model_type, formula])

- 23. Why subprocess? 1. Python for control flow, data manipulation, IO handling 2. R for model build and evaluation computations 3. main.R script (model_builder.R) as the entry point into R layer 4. No need for tight Python-R integration Call R from Python

- 24. Need R Python Maintainable codebase Git Git Sync package dependencies Packrat Pip, Pyenv Call R from Python - subprocess Automated Testing Testthat Nose Reproducible pipeline Makefile Makefile

- 25. Tolerance to Change Are we confident that a modification to the codebase will not silently introduce new bugs? Automated Testing

- 26. Working Effectively with Legacy Code - Michael Feathers 1. Identify change points 2. Break dependencies 3. Write tests 4. Make changes 5. Refactor Automated Testing

- 27. Need R Python Maintainable codebase Git Git Sync package dependencies Packrat Pip, Pyenv Call R from Python - subprocess Automated Testing Testthat Nose Reproducible pipeline Makefile Makefile

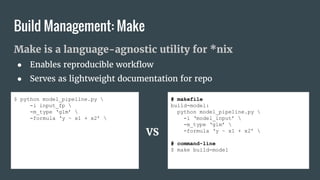

- 28. Make is a language-agnostic utility for *nix ● Enables reproducible workflow ● Serves as lightweight documentation for repo # makefile build-model: python model_pipeline.py -i ‘model_input’ -m_type ‘glm’ -formula ‘y ~ x1 + x2’ # command-line $ make build-model Build Management: Make $ python model_pipeline.py -i input_fp -m_type ‘glm’ -formula ‘y ~ x1 + x2’ VS

- 29. By adopting the above practices, we: 1. Can maintain the codebase more easily 2. Reduce cognitive load and context switching 3. Improve code quality and correctness 4. Facilitate knowledge transfer among team members 5. Encourage reproducible workflows Big Wins

- 30. Necessary Time Investment 1. The learning curve 2. Breaking old habits 3. Create fixes for issues that come with chosen solutions Costs

- 31. How might we build a predictive analytics pipeline that is reproducible, maintainable, and statistically rigorous?

![# model_builder.R

cmdargs <- commandArgs(trailingOnly = TRUE)

data_filepath <- cmdargs[1]

model_type <- cmdargs[2]

formula <- cmdargs[3]

build.model <- function(data_filepath, model_type, formula) {

df <- read.data(data_filepath)

model <- train.model(df, model_type, formula)

model

}

Call R from Python: Example

# model_pipeline.py

import subprocess

subprocess.call([‘path/to/R/executable’,

'path/to/model_builder.R’,

data_filepath, model_type, formula])](https://image.slidesharecdn.com/friday1055amnielsbantilan-160511203244/85/What-We-Learned-Building-an-R-Python-Hybrid-Predictive-Analytics-Pipeline-22-320.jpg)