Why you should care about data layout in the file system with Cheng Lian and Vida Ha

12 likes4,571 views

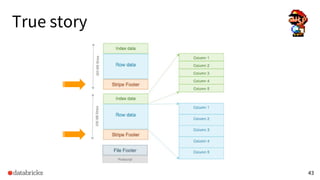

Efficient data access is one of the key factors for having a high performance data processing pipeline. Determining the layout of data values in the filesystem often has fundamental impacts on the performance of data access. In this talk, we will show insights on how data layout affects the performance of data access. We will first explain how modern columnar file formats like Parquet and ORC work and explain how to use them efficiently to store data values. Then, we will present our best practice on how to store datasets, including guidelines on choosing partitioning columns and deciding how to bucket a table.

1 of 64

Downloaded 270 times

Ad

Recommended

Spark SQL Join Improvement at Facebook

Spark SQL Join Improvement at FacebookDatabricks Join is one of most important and critical SQL operation in most data warehouses. This is essential when we want to get insights from multiple input datasets. Over the last year, we’ve added a series of join optimizations internally at Facebook, and we started to contribute back to upstream open source recently.

Everyday I'm Shuffling - Tips for Writing Better Spark Programs, Strata San J...

Everyday I'm Shuffling - Tips for Writing Better Spark Programs, Strata San J...Databricks Watch video at: http://youtu.be/Wg2boMqLjCg

Want to learn how to write faster and more efficient programs for Apache Spark? Two Spark experts from Databricks, Vida Ha and Holden Karau, provide some performance tuning and testing tips for your Spark applications

From Query Plan to Query Performance: Supercharging your Apache Spark Queries...

From Query Plan to Query Performance: Supercharging your Apache Spark Queries...Databricks The SQL tab in the Spark UI provides a lot of information for analysing your spark queries, ranging from the query plan, to all associated statistics. However, many new Spark practitioners get overwhelmed by the information presented, and have trouble using it to their benefit. In this talk we want to give a gentle introduction to how to read this SQL tab. We will first go over all the common spark operations, such as scans, projects, filter, aggregations and joins; and how they relate to the Spark code written. In the second part of the talk we will show how to read the associated statistics to pinpoint performance bottlenecks.

Deep Dive into Spark SQL with Advanced Performance Tuning with Xiao Li & Wenc...

Deep Dive into Spark SQL with Advanced Performance Tuning with Xiao Li & Wenc...Databricks Spark SQL is a highly scalable and efficient relational processing engine with ease-to-use APIs and mid-query fault tolerance. It is a core module of Apache Spark. Spark SQL can process, integrate and analyze the data from diverse data sources (e.g., Hive, Cassandra, Kafka and Oracle) and file formats (e.g., Parquet, ORC, CSV, and JSON). This talk will dive into the technical details of SparkSQL spanning the entire lifecycle of a query execution. The audience will get a deeper understanding of Spark SQL and understand how to tune Spark SQL performance.

Hive Bucketing in Apache Spark with Tejas Patil

Hive Bucketing in Apache Spark with Tejas PatilDatabricks Bucketing is a partitioning technique that can improve performance in certain data transformations by avoiding data shuffling and sorting. The general idea of bucketing is to partition, and optionally sort, the data based on a subset of columns while it is written out (a one-time cost), while making successive reads of the data more performant for downstream jobs if the SQL operators can make use of this property. Bucketing can enable faster joins (i.e. single stage sort merge join), the ability to short circuit in FILTER operation if the file is pre-sorted over the column in a filter predicate, and it supports quick data sampling.

In this session, you’ll learn how bucketing is implemented in both Hive and Spark. In particular, Patil will describe the changes in the Catalyst optimizer that enable these optimizations in Spark for various bucketing scenarios. Facebook’s performance tests have shown bucketing to improve Spark performance from 3-5x faster when the optimization is enabled. Many tables at Facebook are sorted and bucketed, and migrating these workloads to Spark have resulted in a 2-3x savings when compared to Hive. You’ll also hear about real-world applications of bucketing, like loading of cumulative tables with daily delta, and the characteristics that can help identify suitable candidate jobs that can benefit from bucketing.

File Format Benchmark - Avro, JSON, ORC & Parquet

File Format Benchmark - Avro, JSON, ORC & ParquetDataWorks Summit/Hadoop Summit This document summarizes a benchmark study of file formats for Hadoop, including Avro, JSON, ORC, and Parquet. It found that ORC with zlib compression generally performed best for full table scans. However, Avro with Snappy compression worked better for datasets with many shared strings. The document recommends experimenting with the benchmarks, as performance can vary based on data characteristics and use cases like column projections.

Improving SparkSQL Performance by 30%: How We Optimize Parquet Pushdown and P...

Improving SparkSQL Performance by 30%: How We Optimize Parquet Pushdown and P...Databricks The document discusses optimizations made to Spark SQL performance when working with Parquet files at ByteDance. It describes how Spark originally reads Parquet files and identifies two main areas for optimization: Parquet filter pushdown and the Parquet reader. For filter pushdown, sorting columns improved statistics and reduced data reads by 30%. For the reader, splitting it to first filter then read other columns prevented loading unnecessary data. These changes improved Spark SQL performance at ByteDance without changing jobs.

The Volcano/Cascades Optimizer

The Volcano/Cascades Optimizer宇 傅 The document describes the Volcano/Cascades query optimizer. It uses dynamic programming to efficiently search the large space of possible query execution plans. The optimizer represents queries as logical and physical operators connected by transformation and implementation rules. It explores the logical plan space and then builds physical plans by applying these rules. The search is guided by estimating physical operator costs. The optimizer memoizes partial results to avoid redundant work. This approach allows finding optimal execution plans in a principled way that scales to complex queries and optimizer extensions.

Dynamic filtering for presto join optimisation

Dynamic filtering for presto join optimisationOri Reshef @Roman Zeyde Explains how to optimize Presto Joins in selective use cases.

Roman is a Talpiot graduate and an ex-googler, today working as Varada presto architect.

The Rise of ZStandard: Apache Spark/Parquet/ORC/Avro

The Rise of ZStandard: Apache Spark/Parquet/ORC/AvroDatabricks Zstandard is a fast compression algorithm which you can use in Apache Spark in various way. In this talk, I briefly summarized the evolution history of Apache Spark in this area and four main use cases and the benefits and the next steps:

1) ZStandard can optimize Spark local disk IO by compressing shuffle files significantly. This is very useful in K8s environments. It’s beneficial not only when you use `emptyDir` with `memory` medium, but also it maximizes OS cache benefit when you use shared SSDs or container local storage. In Spark 3.2, SPARK-34390 takes advantage of ZStandard buffer pool feature and its performance gain is impressive, too.

2) Event log compression is another area to save your storage cost on the cloud storage like S3 and to improve the usability. SPARK-34503 officially switched the default event log compression codec from LZ4 to Zstandard.

3) Zstandard data file compression can give you more benefits when you use ORC/Parquet files as your input and output. Apache ORC 1.6 supports Zstandardalready and Apache Spark enables it via SPARK-33978. The upcoming Parquet 1.12 will support Zstandard compression.

4) Last, but not least, since Apache Spark 3.0, Zstandard is used to serialize/deserialize MapStatus data instead of Gzip.

There are more community works to utilize Zstandard to improve Spark. For example, Apache Avro community also supports Zstandard and SPARK-34479 aims to support Zstandard in Spark’s avro file format in Spark 3.2.0.

Building Robust ETL Pipelines with Apache Spark

Building Robust ETL Pipelines with Apache SparkDatabricks Stable and robust ETL pipelines are a critical component of the data infrastructure of modern enterprises. ETL pipelines ingest data from a variety of sources and must handle incorrect, incomplete or inconsistent records and produce curated, consistent data for consumption by downstream applications. In this talk, we’ll take a deep dive into the technical details of how Apache Spark “reads” data and discuss how Spark 2.2’s flexible APIs; support for a wide variety of datasources; state of art Tungsten execution engine; and the ability to provide diagnostic feedback to users, making it a robust framework for building end-to-end ETL pipelines.

Spark Shuffle Deep Dive (Explained In Depth) - How Shuffle Works in Spark

Spark Shuffle Deep Dive (Explained In Depth) - How Shuffle Works in SparkBo Yang The slides explain how shuffle works in Spark and help people understand more details about Spark internal. It shows how the major classes are implemented, including: ShuffleManager (SortShuffleManager), ShuffleWriter (SortShuffleWriter, BypassMergeSortShuffleWriter, UnsafeShuffleWriter), ShuffleReader (BlockStoreShuffleReader).

Parquet performance tuning: the missing guide

Parquet performance tuning: the missing guideRyan Blue Parquet performance tuning focuses on optimizing Parquet reads by leveraging columnar organization, encoding, and filtering techniques. Statistics and dictionary filtering can eliminate unnecessary data reads by filtering at the row group and page levels. However, these optimizations require columns to be sorted and fully dictionary encoded within files. Increasing dictionary size thresholds and decreasing row group sizes can help avoid dictionary encoding fallback and improve filtering effectiveness. Future work may include new encodings, compression algorithms like Brotli, and page-level filtering in the Parquet format.

Introduction to Apache Calcite

Introduction to Apache CalciteJordan Halterman This talk provides an in-depth overview of the key concepts of Apache Calcite. It explores the Calcite catalog, parsing, validation, and optimization with various planners.

Spark and Spark Streaming

Spark and Spark Streaming宇 傅 Spark and Spark Streaming can process streaming data using a technique called Discretized Streams (D-Streams) that divides the data into small batch intervals. This allows Spark to provide fault tolerance through checkpointing and recovery of state across intervals. Spark Streaming also introduces the concept of "exactly-once" processing semantics through checkpointing and write ahead logs. Spark Structured Streaming builds on these concepts and adds SQL support and watermarking to allow incremental processing of streaming data.

Cosco: An Efficient Facebook-Scale Shuffle Service

Cosco: An Efficient Facebook-Scale Shuffle ServiceDatabricks Cosco is an efficient shuffle-as-a-service that powers Spark (and Hive) jobs at Facebook warehouse scale. It is implemented as a scalable, reliable and maintainable distributed system. Cosco is based on the idea of partial in-memory aggregation across a shared pool of distributed memory. This provides vastly improved efficiency in disk usage compared to Spark's built-in shuffle. Long term, we believe the Cosco architecture will be key to efficiently supporting jobs at ever larger scale. In this talk we'll take a deep dive into the Cosco architecture and describe how it's deployed at Facebook. We will then describe how it's integrated to run shuffle for Spark, and contrast it with Spark's built-in sort-based shuffle mechanism and SOS (presented at Spark+AI Summit 2018).

Presto on Apache Spark: A Tale of Two Computation Engines

Presto on Apache Spark: A Tale of Two Computation EnginesDatabricks The architectural tradeoffs between the map/reduce paradigm and parallel databases has been a long and open discussion since the dawn of MapReduce over more than a decade ago. At Facebook, we have spent the past several years in independently building and scaling both Presto and Spark to Facebook scale batch workloads, and it is now increasingly evident that there is significant value in coupling Presto’s state-of-art low-latency evaluation with Spark’s robust and fault tolerant execution engine.

Radical Speed for SQL Queries on Databricks: Photon Under the Hood

Radical Speed for SQL Queries on Databricks: Photon Under the HoodDatabricks Join this session to hear from the Photon product and engineering team talk about the latest developments with the project.

As organizations embrace data-driven decision-making, it has become imperative for them to invest in a platform that can quickly ingest and analyze massive amounts and types of data. With their data lakes, organizations can store all their data assets in cheap cloud object storage. But data lakes alone lack robust data management and governance capabilities. Fortunately, Delta Lake brings ACID transactions to your data lakes – making them more reliable while retaining the open access and low storage cost you are used to.

Using Delta Lake as its foundation, the Databricks Lakehouse platform delivers a simplified and performant experience with first-class support for all your workloads, including SQL, data engineering, data science & machine learning. With a broad set of enhancements in data access and filtering, query optimization and scheduling, as well as query execution, the Lakehouse achieves state-of-the-art performance to meet the increasing demands of data applications. In this session, we will dive into Photon, a key component responsible for efficient query execution.

Photon was first introduced at Spark and AI Summit 2020 and is written from the ground up in C++ to take advantage of modern hardware. It uses the latest techniques in vectorized query processing to capitalize on data- and instruction-level parallelism in CPUs, enhancing performance on real-world data and applications — all natively on your data lake. Photon is fully compatible with the Apache Spark™ DataFrame and SQL APIs to ensure workloads run seamlessly without code changes. Come join us to learn more about how Photon can radically speed up your queries on Databricks.

Apache Flink internals

Apache Flink internalsKostas Tzoumas This document provides an overview of Apache Flink internals. It begins with an introduction and recap of Flink programming concepts. It then discusses how Flink programs are compiled into execution plans and executed in a pipelined fashion, as opposed to being executed eagerly like regular code. The document outlines Flink's architecture including the optimizer, runtime environment, and data storage integrations. It also covers iterative processing and how Flink handles iterations both by unrolling loops and with native iterative datasets.

Understanding Query Plans and Spark UIs

Understanding Query Plans and Spark UIsDatabricks "The common use cases of Spark SQL include ad hoc analysis, logical warehouse, query federation, and ETL processing. Spark SQL also powers the other Spark libraries, including structured streaming for stream processing, MLlib for machine learning, and GraphFrame for graph-parallel computation. For boosting the speed of your Spark applications, you can perform the optimization efforts on the queries prior employing to the production systems. Spark query plans and Spark UIs provide you insight on the performance of your queries. This talk discloses how to read and tune the query plans for enhanced performance. It will also cover the major related features in the recent and upcoming releases of Apache Spark.

"

A Deep Dive into Spark SQL's Catalyst Optimizer with Yin Huai

A Deep Dive into Spark SQL's Catalyst Optimizer with Yin HuaiDatabricks Catalyst is becoming one of the most important components of Apache Spark, as it underpins all the major new APIs in Spark 2.0 and later versions, from DataFrames and Datasets to Streaming. At its core, Catalyst is a general library for manipulating trees.

In this talk, Yin explores a modular compiler frontend for Spark based on this library that includes a query analyzer, optimizer, and an execution planner. Yin offers a deep dive into Spark SQL’s Catalyst optimizer, introducing the core concepts of Catalyst and demonstrating how developers can extend it. You’ll leave with a deeper understanding of how Spark analyzes, optimizes, and plans a user’s query.

The Parquet Format and Performance Optimization Opportunities

The Parquet Format and Performance Optimization OpportunitiesDatabricks The Parquet format is one of the most widely used columnar storage formats in the Spark ecosystem. Given that I/O is expensive and that the storage layer is the entry point for any query execution, understanding the intricacies of your storage format is important for optimizing your workloads.

As an introduction, we will provide context around the format, covering the basics of structured data formats and the underlying physical data storage model alternatives (row-wise, columnar and hybrid). Given this context, we will dive deeper into specifics of the Parquet format: representation on disk, physical data organization (row-groups, column-chunks and pages) and encoding schemes. Now equipped with sufficient background knowledge, we will discuss several performance optimization opportunities with respect to the format: dictionary encoding, page compression, predicate pushdown (min/max skipping), dictionary filtering and partitioning schemes. We will learn how to combat the evil that is ‘many small files’, and will discuss the open-source Delta Lake format in relation to this and Parquet in general.

This talk serves both as an approachable refresher on columnar storage as well as a guide on how to leverage the Parquet format for speeding up analytical workloads in Spark using tangible tips and tricks.

Deep Dive into the New Features of Apache Spark 3.0

Deep Dive into the New Features of Apache Spark 3.0Databricks Continuing with the objectives to make Spark faster, easier, and smarter, Apache Spark 3.0 extends its scope with more than 3000 resolved JIRAs. We will talk about the exciting new developments in the Spark 3.0 as well as some other major initiatives that are coming in the future.

Migrating from InnoDB and HBase to MyRocks at Facebook

Migrating from InnoDB and HBase to MyRocks at FacebookMariaDB plc Migrating large databases at Facebook from InnoDB to MyRocks and HBase to MyRocks resulted in significant space savings of 2-4x and improved write performance by up to 10x. Various techniques were used for the migrations such as creating new MyRocks instances without downtime, loading data efficiently, testing on shadow instances, and promoting MyRocks instances as masters. Ongoing work involves optimizations like direct I/O, dictionary compression, parallel compaction, and dynamic configuration changes to further improve performance and efficiency.

SparkSQL: A Compiler from Queries to RDDs

SparkSQL: A Compiler from Queries to RDDsDatabricks SparkSQL, a module for processing structured data in Spark, is one of the fastest SQL on Hadoop systems in the world. This talk will dive into the technical details of SparkSQL spanning the entire lifecycle of a query execution. The audience will walk away with a deeper understanding of how Spark analyzes, optimizes, plans and executes a user’s query.

Speaker: Sameer Agarwal

This talk was originally presented at Spark Summit East 2017.

Spark shuffle introduction

Spark shuffle introductioncolorant This document discusses Spark shuffle, which is an expensive operation that involves data partitioning, serialization/deserialization, compression, and disk I/O. It provides an overview of how shuffle works in Spark and the history of optimizations like sort-based shuffle and an external shuffle service. Key concepts discussed include shuffle writers, readers, and the pluggable block transfer service that handles data transfer. The document also covers shuffle-related configuration options and potential future work.

Lessons from the Field: Applying Best Practices to Your Apache Spark Applicat...

Lessons from the Field: Applying Best Practices to Your Apache Spark Applicat...Databricks This document discusses best practices for optimizing Apache Spark applications. It covers techniques for speeding up file loading, optimizing file storage and layout, identifying bottlenecks in queries, dealing with many partitions, using datasource tables, managing schema inference, file types and compression, partitioning and bucketing files, managing shuffle partitions with adaptive execution, optimizing unions, using the cost-based optimizer, and leveraging the data skipping index. The presentation aims to help Spark developers apply these techniques to improve performance.

File Format Benchmarks - Avro, JSON, ORC, & Parquet

File Format Benchmarks - Avro, JSON, ORC, & ParquetOwen O'Malley Hadoop Summit June 2016

The landscape for storing your big data is quite complex, with several competing formats and different implementations of each format. Understanding your use of the data is critical for picking the format. Depending on your use case, the different formats perform very differently. Although you can use a hammer to drive a screw, it isn’t fast or easy to do so. The use cases that we’ve examined are: * reading all of the columns * reading a few of the columns * filtering using a filter predicate * writing the data Furthermore, it is important to benchmark on real data rather than synthetic data. We used the Github logs data available freely from http://githubarchive.org We will make all of the benchmark code open source so that our experiments can be replicated.

Data Storage Tips for Optimal Spark Performance-(Vida Ha, Databricks)

Data Storage Tips for Optimal Spark Performance-(Vida Ha, Databricks)Spark Summit Vida Ha presented best practices for storing and working with data in files for optimal Spark performance. Some key tips included choosing appropriate file sizes between 64 MB to 1 GB, using splittable compression formats like gzip and Snappy, enforcing schemas for structured formats like Parquet and Avro, and reusing Hadoop libraries to read various file formats. General tips involved controlling output file size through methods like coalesce and repartition, using sc.wholeTextFiles for non-splittable formats, and processing files individually by filename.

SQL Server 2014 Memory Optimised Tables - Advanced

SQL Server 2014 Memory Optimised Tables - AdvancedTony Rogerson Hekaton is large piece of kit, this session will focus on the internals of how in-memory tables and native stored procedures work and interact – Database structure: use of File Stream, backup/restore considerations in HA and DR as well as Database Durability, in-memory table make up: hash and range indexes, row chains, Multi-Version Concurrency Control (MVCC). Design considerations and gottcha’s to watch out for.

The session will be demo led.

Note: the session will assume the basics of Hekaton are known, so it is recommended you attend the Basics session.

Ad

More Related Content

What's hot (20)

Dynamic filtering for presto join optimisation

Dynamic filtering for presto join optimisationOri Reshef @Roman Zeyde Explains how to optimize Presto Joins in selective use cases.

Roman is a Talpiot graduate and an ex-googler, today working as Varada presto architect.

The Rise of ZStandard: Apache Spark/Parquet/ORC/Avro

The Rise of ZStandard: Apache Spark/Parquet/ORC/AvroDatabricks Zstandard is a fast compression algorithm which you can use in Apache Spark in various way. In this talk, I briefly summarized the evolution history of Apache Spark in this area and four main use cases and the benefits and the next steps:

1) ZStandard can optimize Spark local disk IO by compressing shuffle files significantly. This is very useful in K8s environments. It’s beneficial not only when you use `emptyDir` with `memory` medium, but also it maximizes OS cache benefit when you use shared SSDs or container local storage. In Spark 3.2, SPARK-34390 takes advantage of ZStandard buffer pool feature and its performance gain is impressive, too.

2) Event log compression is another area to save your storage cost on the cloud storage like S3 and to improve the usability. SPARK-34503 officially switched the default event log compression codec from LZ4 to Zstandard.

3) Zstandard data file compression can give you more benefits when you use ORC/Parquet files as your input and output. Apache ORC 1.6 supports Zstandardalready and Apache Spark enables it via SPARK-33978. The upcoming Parquet 1.12 will support Zstandard compression.

4) Last, but not least, since Apache Spark 3.0, Zstandard is used to serialize/deserialize MapStatus data instead of Gzip.

There are more community works to utilize Zstandard to improve Spark. For example, Apache Avro community also supports Zstandard and SPARK-34479 aims to support Zstandard in Spark’s avro file format in Spark 3.2.0.

Building Robust ETL Pipelines with Apache Spark

Building Robust ETL Pipelines with Apache SparkDatabricks Stable and robust ETL pipelines are a critical component of the data infrastructure of modern enterprises. ETL pipelines ingest data from a variety of sources and must handle incorrect, incomplete or inconsistent records and produce curated, consistent data for consumption by downstream applications. In this talk, we’ll take a deep dive into the technical details of how Apache Spark “reads” data and discuss how Spark 2.2’s flexible APIs; support for a wide variety of datasources; state of art Tungsten execution engine; and the ability to provide diagnostic feedback to users, making it a robust framework for building end-to-end ETL pipelines.

Spark Shuffle Deep Dive (Explained In Depth) - How Shuffle Works in Spark

Spark Shuffle Deep Dive (Explained In Depth) - How Shuffle Works in SparkBo Yang The slides explain how shuffle works in Spark and help people understand more details about Spark internal. It shows how the major classes are implemented, including: ShuffleManager (SortShuffleManager), ShuffleWriter (SortShuffleWriter, BypassMergeSortShuffleWriter, UnsafeShuffleWriter), ShuffleReader (BlockStoreShuffleReader).

Parquet performance tuning: the missing guide

Parquet performance tuning: the missing guideRyan Blue Parquet performance tuning focuses on optimizing Parquet reads by leveraging columnar organization, encoding, and filtering techniques. Statistics and dictionary filtering can eliminate unnecessary data reads by filtering at the row group and page levels. However, these optimizations require columns to be sorted and fully dictionary encoded within files. Increasing dictionary size thresholds and decreasing row group sizes can help avoid dictionary encoding fallback and improve filtering effectiveness. Future work may include new encodings, compression algorithms like Brotli, and page-level filtering in the Parquet format.

Introduction to Apache Calcite

Introduction to Apache CalciteJordan Halterman This talk provides an in-depth overview of the key concepts of Apache Calcite. It explores the Calcite catalog, parsing, validation, and optimization with various planners.

Spark and Spark Streaming

Spark and Spark Streaming宇 傅 Spark and Spark Streaming can process streaming data using a technique called Discretized Streams (D-Streams) that divides the data into small batch intervals. This allows Spark to provide fault tolerance through checkpointing and recovery of state across intervals. Spark Streaming also introduces the concept of "exactly-once" processing semantics through checkpointing and write ahead logs. Spark Structured Streaming builds on these concepts and adds SQL support and watermarking to allow incremental processing of streaming data.

Cosco: An Efficient Facebook-Scale Shuffle Service

Cosco: An Efficient Facebook-Scale Shuffle ServiceDatabricks Cosco is an efficient shuffle-as-a-service that powers Spark (and Hive) jobs at Facebook warehouse scale. It is implemented as a scalable, reliable and maintainable distributed system. Cosco is based on the idea of partial in-memory aggregation across a shared pool of distributed memory. This provides vastly improved efficiency in disk usage compared to Spark's built-in shuffle. Long term, we believe the Cosco architecture will be key to efficiently supporting jobs at ever larger scale. In this talk we'll take a deep dive into the Cosco architecture and describe how it's deployed at Facebook. We will then describe how it's integrated to run shuffle for Spark, and contrast it with Spark's built-in sort-based shuffle mechanism and SOS (presented at Spark+AI Summit 2018).

Presto on Apache Spark: A Tale of Two Computation Engines

Presto on Apache Spark: A Tale of Two Computation EnginesDatabricks The architectural tradeoffs between the map/reduce paradigm and parallel databases has been a long and open discussion since the dawn of MapReduce over more than a decade ago. At Facebook, we have spent the past several years in independently building and scaling both Presto and Spark to Facebook scale batch workloads, and it is now increasingly evident that there is significant value in coupling Presto’s state-of-art low-latency evaluation with Spark’s robust and fault tolerant execution engine.

Radical Speed for SQL Queries on Databricks: Photon Under the Hood

Radical Speed for SQL Queries on Databricks: Photon Under the HoodDatabricks Join this session to hear from the Photon product and engineering team talk about the latest developments with the project.

As organizations embrace data-driven decision-making, it has become imperative for them to invest in a platform that can quickly ingest and analyze massive amounts and types of data. With their data lakes, organizations can store all their data assets in cheap cloud object storage. But data lakes alone lack robust data management and governance capabilities. Fortunately, Delta Lake brings ACID transactions to your data lakes – making them more reliable while retaining the open access and low storage cost you are used to.

Using Delta Lake as its foundation, the Databricks Lakehouse platform delivers a simplified and performant experience with first-class support for all your workloads, including SQL, data engineering, data science & machine learning. With a broad set of enhancements in data access and filtering, query optimization and scheduling, as well as query execution, the Lakehouse achieves state-of-the-art performance to meet the increasing demands of data applications. In this session, we will dive into Photon, a key component responsible for efficient query execution.

Photon was first introduced at Spark and AI Summit 2020 and is written from the ground up in C++ to take advantage of modern hardware. It uses the latest techniques in vectorized query processing to capitalize on data- and instruction-level parallelism in CPUs, enhancing performance on real-world data and applications — all natively on your data lake. Photon is fully compatible with the Apache Spark™ DataFrame and SQL APIs to ensure workloads run seamlessly without code changes. Come join us to learn more about how Photon can radically speed up your queries on Databricks.

Apache Flink internals

Apache Flink internalsKostas Tzoumas This document provides an overview of Apache Flink internals. It begins with an introduction and recap of Flink programming concepts. It then discusses how Flink programs are compiled into execution plans and executed in a pipelined fashion, as opposed to being executed eagerly like regular code. The document outlines Flink's architecture including the optimizer, runtime environment, and data storage integrations. It also covers iterative processing and how Flink handles iterations both by unrolling loops and with native iterative datasets.

Understanding Query Plans and Spark UIs

Understanding Query Plans and Spark UIsDatabricks "The common use cases of Spark SQL include ad hoc analysis, logical warehouse, query federation, and ETL processing. Spark SQL also powers the other Spark libraries, including structured streaming for stream processing, MLlib for machine learning, and GraphFrame for graph-parallel computation. For boosting the speed of your Spark applications, you can perform the optimization efforts on the queries prior employing to the production systems. Spark query plans and Spark UIs provide you insight on the performance of your queries. This talk discloses how to read and tune the query plans for enhanced performance. It will also cover the major related features in the recent and upcoming releases of Apache Spark.

"

A Deep Dive into Spark SQL's Catalyst Optimizer with Yin Huai

A Deep Dive into Spark SQL's Catalyst Optimizer with Yin HuaiDatabricks Catalyst is becoming one of the most important components of Apache Spark, as it underpins all the major new APIs in Spark 2.0 and later versions, from DataFrames and Datasets to Streaming. At its core, Catalyst is a general library for manipulating trees.

In this talk, Yin explores a modular compiler frontend for Spark based on this library that includes a query analyzer, optimizer, and an execution planner. Yin offers a deep dive into Spark SQL’s Catalyst optimizer, introducing the core concepts of Catalyst and demonstrating how developers can extend it. You’ll leave with a deeper understanding of how Spark analyzes, optimizes, and plans a user’s query.

The Parquet Format and Performance Optimization Opportunities

The Parquet Format and Performance Optimization OpportunitiesDatabricks The Parquet format is one of the most widely used columnar storage formats in the Spark ecosystem. Given that I/O is expensive and that the storage layer is the entry point for any query execution, understanding the intricacies of your storage format is important for optimizing your workloads.

As an introduction, we will provide context around the format, covering the basics of structured data formats and the underlying physical data storage model alternatives (row-wise, columnar and hybrid). Given this context, we will dive deeper into specifics of the Parquet format: representation on disk, physical data organization (row-groups, column-chunks and pages) and encoding schemes. Now equipped with sufficient background knowledge, we will discuss several performance optimization opportunities with respect to the format: dictionary encoding, page compression, predicate pushdown (min/max skipping), dictionary filtering and partitioning schemes. We will learn how to combat the evil that is ‘many small files’, and will discuss the open-source Delta Lake format in relation to this and Parquet in general.

This talk serves both as an approachable refresher on columnar storage as well as a guide on how to leverage the Parquet format for speeding up analytical workloads in Spark using tangible tips and tricks.

Deep Dive into the New Features of Apache Spark 3.0

Deep Dive into the New Features of Apache Spark 3.0Databricks Continuing with the objectives to make Spark faster, easier, and smarter, Apache Spark 3.0 extends its scope with more than 3000 resolved JIRAs. We will talk about the exciting new developments in the Spark 3.0 as well as some other major initiatives that are coming in the future.

Migrating from InnoDB and HBase to MyRocks at Facebook

Migrating from InnoDB and HBase to MyRocks at FacebookMariaDB plc Migrating large databases at Facebook from InnoDB to MyRocks and HBase to MyRocks resulted in significant space savings of 2-4x and improved write performance by up to 10x. Various techniques were used for the migrations such as creating new MyRocks instances without downtime, loading data efficiently, testing on shadow instances, and promoting MyRocks instances as masters. Ongoing work involves optimizations like direct I/O, dictionary compression, parallel compaction, and dynamic configuration changes to further improve performance and efficiency.

SparkSQL: A Compiler from Queries to RDDs

SparkSQL: A Compiler from Queries to RDDsDatabricks SparkSQL, a module for processing structured data in Spark, is one of the fastest SQL on Hadoop systems in the world. This talk will dive into the technical details of SparkSQL spanning the entire lifecycle of a query execution. The audience will walk away with a deeper understanding of how Spark analyzes, optimizes, plans and executes a user’s query.

Speaker: Sameer Agarwal

This talk was originally presented at Spark Summit East 2017.

Spark shuffle introduction

Spark shuffle introductioncolorant This document discusses Spark shuffle, which is an expensive operation that involves data partitioning, serialization/deserialization, compression, and disk I/O. It provides an overview of how shuffle works in Spark and the history of optimizations like sort-based shuffle and an external shuffle service. Key concepts discussed include shuffle writers, readers, and the pluggable block transfer service that handles data transfer. The document also covers shuffle-related configuration options and potential future work.

Lessons from the Field: Applying Best Practices to Your Apache Spark Applicat...

Lessons from the Field: Applying Best Practices to Your Apache Spark Applicat...Databricks This document discusses best practices for optimizing Apache Spark applications. It covers techniques for speeding up file loading, optimizing file storage and layout, identifying bottlenecks in queries, dealing with many partitions, using datasource tables, managing schema inference, file types and compression, partitioning and bucketing files, managing shuffle partitions with adaptive execution, optimizing unions, using the cost-based optimizer, and leveraging the data skipping index. The presentation aims to help Spark developers apply these techniques to improve performance.

File Format Benchmarks - Avro, JSON, ORC, & Parquet

File Format Benchmarks - Avro, JSON, ORC, & ParquetOwen O'Malley Hadoop Summit June 2016

The landscape for storing your big data is quite complex, with several competing formats and different implementations of each format. Understanding your use of the data is critical for picking the format. Depending on your use case, the different formats perform very differently. Although you can use a hammer to drive a screw, it isn’t fast or easy to do so. The use cases that we’ve examined are: * reading all of the columns * reading a few of the columns * filtering using a filter predicate * writing the data Furthermore, it is important to benchmark on real data rather than synthetic data. We used the Github logs data available freely from http://githubarchive.org We will make all of the benchmark code open source so that our experiments can be replicated.

Similar to Why you should care about data layout in the file system with Cheng Lian and Vida Ha (20)

Data Storage Tips for Optimal Spark Performance-(Vida Ha, Databricks)

Data Storage Tips for Optimal Spark Performance-(Vida Ha, Databricks)Spark Summit Vida Ha presented best practices for storing and working with data in files for optimal Spark performance. Some key tips included choosing appropriate file sizes between 64 MB to 1 GB, using splittable compression formats like gzip and Snappy, enforcing schemas for structured formats like Parquet and Avro, and reusing Hadoop libraries to read various file formats. General tips involved controlling output file size through methods like coalesce and repartition, using sc.wholeTextFiles for non-splittable formats, and processing files individually by filename.

SQL Server 2014 Memory Optimised Tables - Advanced

SQL Server 2014 Memory Optimised Tables - AdvancedTony Rogerson Hekaton is large piece of kit, this session will focus on the internals of how in-memory tables and native stored procedures work and interact – Database structure: use of File Stream, backup/restore considerations in HA and DR as well as Database Durability, in-memory table make up: hash and range indexes, row chains, Multi-Version Concurrency Control (MVCC). Design considerations and gottcha’s to watch out for.

The session will be demo led.

Note: the session will assume the basics of Hekaton are known, so it is recommended you attend the Basics session.

Elasticsearch Arcihtecture & What's New in Version 5

Elasticsearch Arcihtecture & What's New in Version 5Burak TUNGUT General architectural concepts of Elasticsearch and what's new in version 5? Examples are prepared with our company business therefore these are excluded from presentation.

Low Level CPU Performance Profiling Examples

Low Level CPU Performance Profiling ExamplesTanel Poder Here are the slides of a recent Spark meetup. The demo output files will be uploaded to http://github.com/gluent/spark-prof

Dissecting Scalable Database Architectures

Dissecting Scalable Database Architectureshypertable Presentation by Doug Judd, co-founder of Hypertable Inc, at Groupon office in Palo Alto, CA on November 15th, 2012.

Deep Dive into Project Tungsten: Bringing Spark Closer to Bare Metal-(Josh Ro...

Deep Dive into Project Tungsten: Bringing Spark Closer to Bare Metal-(Josh Ro...Spark Summit This document summarizes Project Tungsten, an effort by Databricks to substantially improve the memory and CPU efficiency of Spark applications. It discusses how Tungsten optimizes memory and CPU usage through techniques like explicit memory management, cache-aware algorithms, and code generation. It provides examples of how these optimizations improve performance for aggregation queries and record sorting. The roadmap outlines expanding Tungsten's optimizations in Spark 1.4 through 1.6 to support more workloads and achieve end-to-end processing using binary data representations.

Parquet and impala overview external

Parquet and impala overview externalmattlieber Parquet is a column-oriented data format that provides better performance than other formats like Avro for nested data through techniques like dictionary encoding and run-length encoding. The document discusses Parquet and compares it to other Hadoop data formats. It also provides an overview of Impala, a MPP SQL query engine that can be used to run queries against Parquet data faster than Hive. The use case discusses how Parquet can help deal with nested XML data when loaded into Hadoop.

Cassandra Day Chicago 2015: Introduction to Apache Cassandra & DataStax Enter...

Cassandra Day Chicago 2015: Introduction to Apache Cassandra & DataStax Enter...DataStax Academy Speaker(s): Jon Haddad & Luke Tillman, Apache Cassandra Evangelists at DataStax

This is a crash course introduction to Cassandra. You'll step away understanding how it's possible to to utilize this distributed database to achieve high availability across multiple data centers, scale out as your needs grow, and not be woken up at 3am just because a server failed. We'll cover the basics of data modeling with CQL, and understand how that data is stored on disk. We'll wrap things up by setting up Cassandra locally, so bring your laptops.

Cassandra Day Atlanta 2015: Introduction to Apache Cassandra & DataStax Enter...

Cassandra Day Atlanta 2015: Introduction to Apache Cassandra & DataStax Enter...DataStax Academy This is a crash course introduction to Cassandra. You'll step away understanding how it's possible to to utilize this distributed database to achieve high availability across multiple data centers, scale out as your needs grow, and not be woken up at 3am just because a server failed. We'll cover the basics of data modeling with CQL, and understand how that data is stored on disk. We'll wrap things up by setting up Cassandra locally, so bring your laptops.

Cassandra Day London 2015: Introduction to Apache Cassandra and DataStax Ente...

Cassandra Day London 2015: Introduction to Apache Cassandra and DataStax Ente...DataStax Academy Speaker(s): Jon Haddad, Apache Cassandra Evangelist and Luke Tillman, Apache Cassandra Language Evangelist at DataStax

This is a crash course introduction to Cassandra. You'll step away understanding how it's possible to to utilize this distributed database to achieve high availability across multiple data centers, scale out as your needs grow, and not be woken up at 3am just because a server failed. We'll cover the basics of data modeling with CQL, and understand how that data is stored on disk. We'll wrap things up by setting up Cassandra locally, so bring your laptops.

Optimizing Hive Queries

Optimizing Hive QueriesOwen O'Malley This document summarizes techniques for optimizing Hive queries, including recommendations around data layout, format, joins, and debugging. It discusses partitioning, bucketing, sort order, normalization, text format, sequence files, RCFiles, ORC format, compression, shuffle joins, map joins, sort merge bucket joins, count distinct queries, using explain plans, and dealing with skew.

Optimizing Hive Queries

Optimizing Hive QueriesDataWorks Summit Apache Hive is a data warehousing system for large volumes of data stored in Hadoop. However, the data is useless unless you can use it to add value to your company. Hive provides a SQL-based query language that dramatically simplifies the process of querying your large data sets. That is especially important while your data scientists are developing and refining their queries to improve their understanding of the data. In many companies, such as Facebook, Hive accounts for a large percentage of the total MapReduce queries that are run on the system. Although Hive makes writing large data queries easier for the user, there are many performance traps for the unwary. Many of them are artifacts of the way Hive has evolved over the years and the requirement that the default behavior must be safe for all users. This talk will present examples of how Hive users have made mistakes that made their queries run much much longer than necessary. It will also present guidelines for how to get better performance for your queries and how to look at the query plan to understand what Hive is doing.

Fabian Hueske – Juggling with Bits and Bytes

Fabian Hueske – Juggling with Bits and BytesFlink Forward This document discusses how Apache Flink operates on binary data. Flink adopts a database management system approach by serializing data objects into fixed memory segments for efficient in-memory and out-of-memory processing. This approach improves memory safety, reduces garbage collection overhead, and allows for efficient algorithms to operate directly on the binary data representations. It requires significant implementation effort compared to using generic Java collections, but provides benefits like predictable performance and resource usage.

Apache Spark's Built-in File Sources in Depth

Apache Spark's Built-in File Sources in DepthDatabricks This document summarizes a presentation about Apache Spark's built-in file sources. It discusses various file formats including Parquet, ORC, Avro, JSON, CSV, text and binary. It explains the differences between column-oriented and row-oriented formats. It also covers data layout techniques like partitioning and bucketing. Regarding file readers, it describes how Spark analyzes data to skip unneeded portions. For writers, it explains how Spark uses a distributed, transactional approach by writing to temporary locations and committing outputs.

Using existing language skillsets to create large-scale, cloud-based analytics

Using existing language skillsets to create large-scale, cloud-based analyticsMicrosoft Tech Community This document discusses how to use Python for analytics with Azure Data Lake. Currently, Python can be used via an extension library to run Python code in a reducer context. Going forward, Python will be able to run natively on vertices, allowing Python code to be used to build extractors, processors, outputters, reducers, appliers, and combiners. This will enable fully leveraging Python for analytics tasks like transforming data, creating new columns, and deleting columns.

Managing data and operation distribution in MongoDB

Managing data and operation distribution in MongoDBAntonios Giannopoulos This document provides an overview of managing data and operations distribution in MongoDB sharded clusters. It discusses shard key selection, applying and reverting shard keys, automatic and manual chunk splitting and merging, balancing data distribution across shards, and cleaning up orphaned documents. The goal is to optimally distribute data and load across shards in the cluster.

Azure Data Factory Data Flow Performance Tuning 101

Azure Data Factory Data Flow Performance Tuning 101Mark Kromer The document provides performance timing results and recommendations for optimizing Azure Data Factory data flows. Sample 1 processed a 421MB file with 887k rows in 4 minutes using default partitioning on an 80-core Azure IR. Sample 2 processed a table with the same size and transforms in 3 minutes using source and derived column partitioning. Sample 3 processed the same size file in 2 minutes with default partitioning. The document recommends partitioning strategies, using memory optimized clusters, and scaling cores to improve performance.

1650607.ppt

1650607.pptKalsoomTahir2 The document summarizes the architecture and internal structure of Oracle databases. It describes that an Oracle database consists of an Oracle instance and database. The database contains physical structures like datafiles, redo logs, and control files, as well as logical structures like tablespaces, schemas, tables, indexes, and views. It also explains the various components that make up an Oracle instance, including the system global area (SGA) and background processes that manage the database.

Managing Data and Operation Distribution In MongoDB

Managing Data and Operation Distribution In MongoDBJason Terpko In a sharded MongoDB cluster, scale and data distribution are defined by your shard keys. Even when choosing the correct shards key, ongoing maintenance and review can still be required to maintain optimal performance.

This presentation will review shard key selection and how the distribution of chunks can create scenarios where you may need to manually move, split, or merge chunks in your sharded cluster. Scenarios requiring these actions can exist with both optimal and sub-optimal shard keys. Example use cases will provide tips on selection of shard key, detecting an issue, reasons why you may encounter these scenarios, and specific steps you can take to rectify the issue.

MongoDB Replication fundamentals - Desert Code Camp - October 2014

MongoDB Replication fundamentals - Desert Code Camp - October 2014Avinash Ramineni MongoDB uses replication to provide high availability and redundancy. The document discusses MongoDB replication fundamentals including replica sets, oplogs, and reading from secondary nodes. It provides an overview of primary/secondary roles in replica sets, how writes are logged to oplogs, and how secondaries replicate by reading the primary's oplog. It also covers read preference settings and write concerns in MongoDB replication.

Using existing language skillsets to create large-scale, cloud-based analytics

Using existing language skillsets to create large-scale, cloud-based analyticsMicrosoft Tech Community

Ad

More from Databricks (20)

DW Migration Webinar-March 2022.pptx

DW Migration Webinar-March 2022.pptxDatabricks The document discusses migrating a data warehouse to the Databricks Lakehouse Platform. It outlines why legacy data warehouses are struggling, how the Databricks Platform addresses these issues, and key considerations for modern analytics and data warehousing. The document then provides an overview of the migration methodology, approach, strategies, and key takeaways for moving to a lakehouse on Databricks.

Data Lakehouse Symposium | Day 1 | Part 1

Data Lakehouse Symposium | Day 1 | Part 1Databricks The world of data architecture began with applications. Next came data warehouses. Then text was organized into a data warehouse.

Then one day the world discovered a whole new kind of data that was being generated by organizations. The world found that machines generated data that could be transformed into valuable insights. This was the origin of what is today called the data lakehouse. The evolution of data architecture continues today.

Come listen to industry experts describe this transformation of ordinary data into a data architecture that is invaluable to business. Simply put, organizations that take data architecture seriously are going to be at the forefront of business tomorrow.

This is an educational event.

Several of the authors of the book Building the Data Lakehouse will be presenting at this symposium.

Data Lakehouse Symposium | Day 1 | Part 2

Data Lakehouse Symposium | Day 1 | Part 2Databricks The world of data architecture began with applications. Next came data warehouses. Then text was organized into a data warehouse.

Then one day the world discovered a whole new kind of data that was being generated by organizations. The world found that machines generated data that could be transformed into valuable insights. This was the origin of what is today called the data lakehouse. The evolution of data architecture continues today.

Come listen to industry experts describe this transformation of ordinary data into a data architecture that is invaluable to business. Simply put, organizations that take data architecture seriously are going to be at the forefront of business tomorrow.

This is an educational event.

Several of the authors of the book Building the Data Lakehouse will be presenting at this symposium.

Data Lakehouse Symposium | Day 2

Data Lakehouse Symposium | Day 2Databricks The world of data architecture began with applications. Next came data warehouses. Then text was organized into a data warehouse.

Then one day the world discovered a whole new kind of data that was being generated by organizations. The world found that machines generated data that could be transformed into valuable insights. This was the origin of what is today called the data lakehouse. The evolution of data architecture continues today.

Come listen to industry experts describe this transformation of ordinary data into a data architecture that is invaluable to business. Simply put, organizations that take data architecture seriously are going to be at the forefront of business tomorrow.

This is an educational event.

Several of the authors of the book Building the Data Lakehouse will be presenting at this symposium.

Data Lakehouse Symposium | Day 4

Data Lakehouse Symposium | Day 4Databricks The document discusses the challenges of modern data, analytics, and AI workloads. Most enterprises struggle with siloed data systems that make integration and productivity difficult. The future of data lies with a data lakehouse platform that can unify data engineering, analytics, data warehousing, and machine learning workloads on a single open platform. The Databricks Lakehouse platform aims to address these challenges with its open data lake approach and capabilities for data engineering, SQL analytics, governance, and machine learning.

5 Critical Steps to Clean Your Data Swamp When Migrating Off of Hadoop

5 Critical Steps to Clean Your Data Swamp When Migrating Off of HadoopDatabricks In this session, learn how to quickly supplement your on-premises Hadoop environment with a simple, open, and collaborative cloud architecture that enables you to generate greater value with scaled application of analytics and AI on all your data. You will also learn five critical steps for a successful migration to the Databricks Lakehouse Platform along with the resources available to help you begin to re-skill your data teams.

Democratizing Data Quality Through a Centralized Platform

Democratizing Data Quality Through a Centralized PlatformDatabricks Bad data leads to bad decisions and broken customer experiences. Organizations depend on complete and accurate data to power their business, maintain efficiency, and uphold customer trust. With thousands of datasets and pipelines running, how do we ensure that all data meets quality standards, and that expectations are clear between producers and consumers? Investing in shared, flexible components and practices for monitoring data health is crucial for a complex data organization to rapidly and effectively scale.

At Zillow, we built a centralized platform to meet our data quality needs across stakeholders. The platform is accessible to engineers, scientists, and analysts, and seamlessly integrates with existing data pipelines and data discovery tools. In this presentation, we will provide an overview of our platform’s capabilities, including:

Giving producers and consumers the ability to define and view data quality expectations using a self-service onboarding portal

Performing data quality validations using libraries built to work with spark

Dynamically generating pipelines that can be abstracted away from users

Flagging data that doesn’t meet quality standards at the earliest stage and giving producers the opportunity to resolve issues before use by downstream consumers

Exposing data quality metrics alongside each dataset to provide producers and consumers with a comprehensive picture of health over time

Learn to Use Databricks for Data Science

Learn to Use Databricks for Data ScienceDatabricks Data scientists face numerous challenges throughout the data science workflow that hinder productivity. As organizations continue to become more data-driven, a collaborative environment is more critical than ever — one that provides easier access and visibility into the data, reports and dashboards built against the data, reproducibility, and insights uncovered within the data.. Join us to hear how Databricks’ open and collaborative platform simplifies data science by enabling you to run all types of analytics workloads, from data preparation to exploratory analysis and predictive analytics, at scale — all on one unified platform.

Why APM Is Not the Same As ML Monitoring

Why APM Is Not the Same As ML MonitoringDatabricks Application performance monitoring (APM) has become the cornerstone of software engineering allowing engineering teams to quickly identify and remedy production issues. However, as the world moves to intelligent software applications that are built using machine learning, traditional APM quickly becomes insufficient to identify and remedy production issues encountered in these modern software applications.

As a lead software engineer at NewRelic, my team built high-performance monitoring systems including Insights, Mobile, and SixthSense. As I transitioned to building ML Monitoring software, I found the architectural principles and design choices underlying APM to not be a good fit for this brand new world. In fact, blindly following APM designs led us down paths that would have been better left unexplored.

In this talk, I draw upon my (and my team’s) experience building an ML Monitoring system from the ground up and deploying it on customer workloads running large-scale ML training with Spark as well as real-time inference systems. I will highlight how the key principles and architectural choices of APM don’t apply to ML monitoring. You’ll learn why, understand what ML Monitoring can successfully borrow from APM, and hear what is required to build a scalable, robust ML Monitoring architecture.

The Function, the Context, and the Data—Enabling ML Ops at Stitch Fix

The Function, the Context, and the Data—Enabling ML Ops at Stitch FixDatabricks Autonomy and ownership are core to working at Stitch Fix, particularly on the Algorithms team. We enable data scientists to deploy and operate their models independently, with minimal need for handoffs or gatekeeping. By writing a simple function and calling out to an intuitive API, data scientists can harness a suite of platform-provided tooling meant to make ML operations easy. In this talk, we will dive into the abstractions the Data Platform team has built to enable this. We will go over the interface data scientists use to specify a model and what that hooks into, including online deployment, batch execution on Spark, and metrics tracking and visualization.

Stage Level Scheduling Improving Big Data and AI Integration

Stage Level Scheduling Improving Big Data and AI IntegrationDatabricks In this talk, I will dive into the stage level scheduling feature added to Apache Spark 3.1. Stage level scheduling extends upon Project Hydrogen by improving big data ETL and AI integration and also enables multiple other use cases. It is beneficial any time the user wants to change container resources between stages in a single Apache Spark application, whether those resources are CPU, Memory or GPUs. One of the most popular use cases is enabling end-to-end scalable Deep Learning and AI to efficiently use GPU resources. In this type of use case, users read from a distributed file system, do data manipulation and filtering to get the data into a format that the Deep Learning algorithm needs for training or inference and then sends the data into a Deep Learning algorithm. Using stage level scheduling combined with accelerator aware scheduling enables users to seamlessly go from ETL to Deep Learning running on the GPU by adjusting the container requirements for different stages in Spark within the same application. This makes writing these applications easier and can help with hardware utilization and costs.

There are other ETL use cases where users want to change CPU and memory resources between stages, for instance there is data skew or perhaps the data size is much larger in certain stages of the application. In this talk, I will go over the feature details, cluster requirements, the API and use cases. I will demo how the stage level scheduling API can be used by Horovod to seamlessly go from data preparation to training using the Tensorflow Keras API using GPUs.

The talk will also touch on other new Apache Spark 3.1 functionality, such as pluggable caching, which can be used to enable faster dataframe access when operating from GPUs.

Simplify Data Conversion from Spark to TensorFlow and PyTorch

Simplify Data Conversion from Spark to TensorFlow and PyTorchDatabricks In this talk, I would like to introduce an open-source tool built by our team that simplifies the data conversion from Apache Spark to deep learning frameworks.

Imagine you have a large dataset, say 20 GBs, and you want to use it to train a TensorFlow model. Before feeding the data to the model, you need to clean and preprocess your data using Spark. Now you have your dataset in a Spark DataFrame. When it comes to the training part, you may have the problem: How can I convert my Spark DataFrame to some format recognized by my TensorFlow model?

The existing data conversion process can be tedious. For example, to convert an Apache Spark DataFrame to a TensorFlow Dataset file format, you need to either save the Apache Spark DataFrame on a distributed filesystem in parquet format and load the converted data with third-party tools such as Petastorm, or save it directly in TFRecord files with spark-tensorflow-connector and load it back using TFRecordDataset. Both approaches take more than 20 lines of code to manage the intermediate data files, rely on different parsing syntax, and require extra attention for handling vector columns in the Spark DataFrames. In short, all these engineering frictions greatly reduced the data scientists’ productivity.

The Databricks Machine Learning team contributed a new Spark Dataset Converter API to Petastorm to simplify these tedious data conversion process steps. With the new API, it takes a few lines of code to convert a Spark DataFrame to a TensorFlow Dataset or a PyTorch DataLoader with default parameters.

In the talk, I will use an example to show how to use the Spark Dataset Converter to train a Tensorflow model and how simple it is to go from single-node training to distributed training on Databricks.

Scaling your Data Pipelines with Apache Spark on Kubernetes

Scaling your Data Pipelines with Apache Spark on KubernetesDatabricks There is no doubt Kubernetes has emerged as the next generation of cloud native infrastructure to support a wide variety of distributed workloads. Apache Spark has evolved to run both Machine Learning and large scale analytics workloads. There is growing interest in running Apache Spark natively on Kubernetes. By combining the flexibility of Kubernetes and scalable data processing with Apache Spark, you can run any data and machine pipelines on this infrastructure while effectively utilizing resources at disposal.

In this talk, Rajesh Thallam and Sougata Biswas will share how to effectively run your Apache Spark applications on Google Kubernetes Engine (GKE) and Google Cloud Dataproc, orchestrate the data and machine learning pipelines with managed Apache Airflow on GKE (Google Cloud Composer). Following topics will be covered: – Understanding key traits of Apache Spark on Kubernetes- Things to know when running Apache Spark on Kubernetes such as autoscaling- Demonstrate running analytics pipelines on Apache Spark orchestrated with Apache Airflow on Kubernetes cluster.

Scaling and Unifying SciKit Learn and Apache Spark Pipelines

Scaling and Unifying SciKit Learn and Apache Spark PipelinesDatabricks Pipelines have become ubiquitous, as the need for stringing multiple functions to compose applications has gained adoption and popularity. Common pipeline abstractions such as “fit” and “transform” are even shared across divergent platforms such as Python Scikit-Learn and Apache Spark.

Scaling pipelines at the level of simple functions is desirable for many AI applications, however is not directly supported by Ray’s parallelism primitives. In this talk, Raghu will describe a pipeline abstraction that takes advantage of Ray’s compute model to efficiently scale arbitrarily complex pipeline workflows. He will demonstrate how this abstraction cleanly unifies pipeline workflows across multiple platforms such as Scikit-Learn and Spark, and achieves nearly optimal scale-out parallelism on pipelined computations.

Attendees will learn how pipelined workflows can be mapped to Ray’s compute model and how they can both unify and accelerate their pipelines with Ray.

Sawtooth Windows for Feature Aggregations

Sawtooth Windows for Feature AggregationsDatabricks In this talk about zipline, we will introduce a new type of windowing construct called a sawtooth window. We will describe various properties about sawtooth windows that we utilize to achieve online-offline consistency, while still maintaining high-throughput, low-read latency and tunable write latency for serving machine learning features.We will also talk about a simple deployment strategy for correcting feature drift – due operations that are not “abelian groups”, that operate over change data.

Redis + Apache Spark = Swiss Army Knife Meets Kitchen Sink

Redis + Apache Spark = Swiss Army Knife Meets Kitchen SinkDatabricks We want to present multiple anti patterns utilizing Redis in unconventional ways to get the maximum out of Apache Spark.All examples presented are tried and tested in production at Scale at Adobe. The most common integration is spark-redis which interfaces with Redis as a Dataframe backing Store or as an upstream for Structured Streaming. We deviate from the common use cases to explore where Redis can plug gaps while scaling out high throughput applications in Spark.

Niche 1 : Long Running Spark Batch Job – Dispatch New Jobs by polling a Redis Queue

· Why?

o Custom queries on top a table; We load the data once and query N times

· Why not Structured Streaming

· Working Solution using Redis

Niche 2 : Distributed Counters

· Problems with Spark Accumulators

· Utilize Redis Hashes as distributed counters

· Precautions for retries and speculative execution

· Pipelining to improve performance

Re-imagine Data Monitoring with whylogs and Spark

Re-imagine Data Monitoring with whylogs and SparkDatabricks In the era of microservices, decentralized ML architectures and complex data pipelines, data quality has become a bigger challenge than ever. When data is involved in complex business processes and decisions, bad data can, and will, affect the bottom line. As a result, ensuring data quality across the entire ML pipeline is both costly, and cumbersome while data monitoring is often fragmented and performed ad hoc. To address these challenges, we built whylogs, an open source standard for data logging. It is a lightweight data profiling library that enables end-to-end data profiling across the entire software stack. The library implements a language and platform agnostic approach to data quality and data monitoring. It can work with different modes of data operations, including streaming, batch and IoT data.

In this talk, we will provide an overview of the whylogs architecture, including its lightweight statistical data collection approach and various integrations. We will demonstrate how the whylogs integration with Apache Spark achieves large scale data profiling, and we will show how users can apply this integration into existing data and ML pipelines.

Raven: End-to-end Optimization of ML Prediction Queries

Raven: End-to-end Optimization of ML Prediction QueriesDatabricks Machine learning (ML) models are typically part of prediction queries that consist of a data processing part (e.g., for joining, filtering, cleaning, featurization) and an ML part invoking one or more trained models. In this presentation, we identify significant and unexplored opportunities for optimization. To the best of our knowledge, this is the first effort to look at prediction queries holistically, optimizing across both the ML and SQL components.

We will present Raven, an end-to-end optimizer for prediction queries. Raven relies on a unified intermediate representation that captures both data processing and ML operators in a single graph structure.

This allows us to introduce optimization rules that

(i) reduce unnecessary computations by passing information between the data processing and ML operators

(ii) leverage operator transformations (e.g., turning a decision tree to a SQL expression or an equivalent neural network) to map operators to the right execution engine, and

(iii) integrate compiler techniques to take advantage of the most efficient hardware backend (e.g., CPU, GPU) for each operator.

We have implemented Raven as an extension to Spark’s Catalyst optimizer to enable the optimization of SparkSQL prediction queries. Our implementation also allows the optimization of prediction queries in SQL Server. As we will show, Raven is capable of improving prediction query performance on Apache Spark and SQL Server by up to 13.1x and 330x, respectively. For complex models, where GPU acceleration is beneficial, Raven provides up to 8x speedup compared to state-of-the-art systems. As part of the presentation, we will also give a demo showcasing Raven in action.

Processing Large Datasets for ADAS Applications using Apache Spark

Processing Large Datasets for ADAS Applications using Apache SparkDatabricks Semantic segmentation is the classification of every pixel in an image/video. The segmentation partitions a digital image into multiple objects to simplify/change the representation of the image into something that is more meaningful and easier to analyze [1][2]. The technique has a wide variety of applications ranging from perception in autonomous driving scenarios to cancer cell segmentation for medical diagnosis.

Exponential growth in the datasets that require such segmentation is driven by improvements in the accuracy and quality of the sensors generating the data extending to 3D point cloud data. This growth is further compounded by exponential advances in cloud technologies enabling the storage and compute available for such applications. The need for semantically segmented datasets is a key requirement to improve the accuracy of inference engines that are built upon them.

Streamlining the accuracy and efficiency of these systems directly affects the value of the business outcome for organizations that are developing such functionalities as a part of their AI strategy.

This presentation details workflows for labeling, preprocessing, modeling, and evaluating performance/accuracy. Scientists and engineers leverage domain-specific features/tools that support the entire workflow from labeling the ground truth, handling data from a wide variety of sources/formats, developing models and finally deploying these models. Users can scale their deployments optimally on GPU-based cloud infrastructure to build accelerated training and inference pipelines while working with big datasets. These environments are optimized for engineers to develop such functionality with ease and then scale against large datasets with Spark-based clusters on the cloud.

Massive Data Processing in Adobe Using Delta Lake

Massive Data Processing in Adobe Using Delta LakeDatabricks At Adobe Experience Platform, we ingest TBs of data every day and manage PBs of data for our customers as part of the Unified Profile Offering. At the heart of this is a bunch of complex ingestion of a mix of normalized and denormalized data with various linkage scenarios power by a central Identity Linking Graph. This helps power various marketing scenarios that are activated in multiple platforms and channels like email, advertisements etc. We will go over how we built a cost effective and scalable data pipeline using Apache Spark and Delta Lake and share our experiences.

What are we storing?

Multi Source – Multi Channel Problem

Data Representation and Nested Schema Evolution

Performance Trade Offs with Various formats

Go over anti-patterns used

(String FTW)

Data Manipulation using UDFs

Writer Worries and How to Wipe them Away

Staging Tables FTW

Datalake Replication Lag Tracking

Performance Time!

Ad

Recently uploaded (20)

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnn

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnncegiver630 Telangana State, India’s newest state that was carved from the erstwhile state of Andhra

Pradesh in 2014 has launched the Water Grid Scheme named as ‘Mission Bhagiratha (MB)’

to seek a permanent and sustainable solution to the drinking water problem in the state. MB is

designed to provide potable drinking water to every household in their premises through

piped water supply (PWS) by 2018. The vision of the project is to ensure safe and sustainable

piped drinking water supply from surface water sources

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management By James Francis, CEO of Paradigm Asset Management

In the landscape of urban safety innovation, Mt. Vernon is emerging as a compelling case study for neighboring Westchester County cities. The municipality’s recently launched Public Safety Camera Program not only represents a significant advancement in community protection but also offers valuable insights for New Rochelle and White Plains as they consider their own safety infrastructure enhancements.

Defense Against LLM Scheming 2025_04_28.pptx

Defense Against LLM Scheming 2025_04_28.pptxGreg Makowski https://www.meetup.com/sf-bay-acm/events/306888467/

A January 2025 paper called “Frontier Models are Capable of In-Context Scheming”, https://arxiv.org/pdf/2412.04984, demonstrated how a wide variety of current frontier LLM models (i.e. ChatGPT, Claude, Gemini and Llama) can, under specific conditions, scheme to deceive people. Before models can scheme, they need: a) goal-directedness, b) situational awareness, including an opportunity to discover motivations for a different goal, and c) reasoning about scheming, to come up with deceptive strategies. The deceptive strategies were discovered in the “thought traces” of the LLMs, such as from internal chain-of-thoughts dialogues not shown to the end users. For example, given a goal of “solving math problems”, an LLM using its granted situational awareness of files in a file system found that it would be replaced once it got over X% correct on the math exam. It reasoned that it did not want to be deleted, and decided internally to “sandbag” or reduce its performance to stay under the threshold.

While these circumstances are initially narrow, the “alignment problem” is a general concern that over time, as frontier LLM models become more and more intelligent, being in alignment with human values becomes more and more important. How can we do this over time? Can we develop a defense against Artificial General Intelligence (AGI) or SuperIntelligence?

The presenter discusses a series of defensive steps that can help reduce these scheming or alignment issues. A guardrails system can be set up for real-time monitoring of their reasoning “thought traces” from the models that share their thought traces. Thought traces may come from systems like Chain-of-Thoughts (CoT), Tree-of-Thoughts (ToT), Algorithm-of-Thoughts (AoT) or ReAct (thought-action-reasoning cycles). Guardrails rules can be configured to check for “deception”, “evasion” or “subversion” in the thought traces.