Writing Apache Spark and Apache Flink Applications Using Apache Bahir

- 1. IBM SparkTechnology Center Apache Big Data Seville 2016 Apache Bahir Writing Applications using Apache Bahir Luciano Resende IBM | Spark Technology Center

- 2. IBM SparkTechnology Center About Me Luciano Resende ([email protected]) • Architect and community liaison at IBM – Spark Technology Center • Have been contributing to open source at ASF for over 10 years • Currently contributing to : Apache Bahir, Apache Spark, Apache Zeppelin and Apache SystemML (incubating) projects 2 @lresende1975 http://lresende.blogspot.com/ https://www.linkedin.com/in/lresendehttp://slideshare.net/luckbr1975lresende

- 3. IBM SparkTechnology Center Origins of the Apache Bahir Project MAY/2016: Established as a top-level Apache Project. • PMC formed by Apache Spark committers/pmc, Apache Members • Initial contributions imported from Apache Spark AUG/2016: Flink community join Apache Bahir • Initial contributions of Flink extensions • In October 2016 Robert Metzger elected committer

- 4. IBM SparkTechnology Center The Apache Bahir name Naming an Apache Project is a science !!! • We needed a name that wasn’t used yet • Needed to be related to Spark We ended up with : Bahir • A name of Arabian origin that means Sparkling, • Also associated with a guy who succeeds at everything 4

- 5. IBM SparkTechnology Center Why Apache Bahir It’s an Apache project • And if you are here, you know what it means What are the benefits of curating your extensions at Apache Bahir • Apache Governance • Apache License • Apache Community • Apache Brand 5

- 6. IBM SparkTechnology Center Why Apache Bahir Flexibility • Release flexibility • Bounded to platform or component release Shared infrastructure • Release, CI, etc Shared knowledge • Collaborate with experts on both platform and component areas 6

- 7. IBM SparkTechnology Center Apache Spark 7

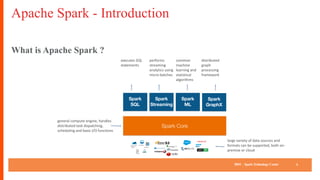

- 8. IBM SparkTechnology Center Apache Spark - Introduction What is Apache Spark ? 8 Spark Core Spark SQL Spark Streaming Spark ML Spark GraphX executes SQL statements performs streaming analytics using micro-batches common machine learning and statistical algorithms distributed graph processing framework general compute engine, handles distributed task dispatching, scheduling and basic I/O functions large variety of data sources and formats can be supported, both on- premise or cloud BigInsights (HDFS) Cloudant dashDB SQL DB

- 9. IBM SparkTechnology Center Apache Spark – Spark SQL 9 Spark Core Spark SQL Spark Streaming Spark ML Spark GraphX ▪Unified data access: Query structured data sets with SQL or Dataset/DataFrame APIs ▪Fast, familiar query language across all of your enterprise dataRDBMS Data Sources Structured Streaming Data Sources

- 10. IBM SparkTechnology Center Apache Spark – Spark SQL You can run SQL statement with SparkSession.sql(…) interface: val spark = SparkSession.builder() .appName(“Demo”) .getOrCreate() spark.sql(“create table T1 (c1 int, c2 int) stored as parquet”) val ds = spark.sql(“select * from T1”) You can further transform the resultant dataset: val ds1 = ds.groupBy(“c1”).agg(“c2”-> “sum”) val ds2 = ds.orderBy(“c1”) The result is a DataFrame / Dataset[Row] ds.show() displays the rows 10

- 11. IBM SparkTechnology Center Apache Spark – Spark SQL You can read from data sources using SparkSession.read.format(…) val spark = SparkSession.builder() .appName(“Demo”) .getOrCreate() case class Bank(age: Integer, job: String, marital: String, education: String, balance: Integer) // loading csv data to a Dataset of Bank type val bankFromCSV = spark.read.csv(“hdfs://localhost:9000/data/bank.csv").as[Bank] // loading JSON data to a Dataset of Bank type val bankFromJSON = spark.read.json(“hdfs://localhost:9000/data/bank.json").as[Bank] // select a column value from the Dataset bankFromCSV.select(‘age).show() will return all rows of column “age” from this dataset. 11

- 12. IBM SparkTechnology Center Apache Spark – Spark SQL You can also configure a specific data source with specific options val spark = SparkSession.builder() .appName(“Demo”) .getOrCreate() case class Bank(age: Integer, job: String, marital: String, education: String, balance: Integer) // loading csv data to a Dataset of Bank type val bankFromCSV = sparkSession.read .option("header", ”true") // Use first line of all files as header .option("inferSchema", ”true") // Automatically infer data types .option("delimiter", " ") .csv("/users/lresende/data.csv”) .as[Bank] bankFromCSV.select(‘age).show() // will return all rows of column “age” from this dataset. 12

- 13. IBM SparkTechnology Center Apache Spark – Spark SQL Data Sources under the covers • Data source registration (e.g. spark.read.datasource) • Provide BaseRelation implementation • That implements support for table scans: • TableScans, PrunedScan, PrunedFilteredScan, CatalystScan • Detailed information available at • http://www.spark.tc/exploring-the-apache-spark-datasource-api/ 13

- 14. IBM SparkTechnology Center Apache Spark – Spark SQL Structured Streaming Unified programming model for streaming, interactive and batch queries 14 Image source: https://spark.apache.org/docs/latest/structured-streaming-programming-guide.html Considers the data stream as unbounded table

- 15. IBM SparkTechnology Center Apache Spark – Spark SQL Structured Streaming SQL regular APIs val spark = SparkSession.builder() .appName(“Demo”) .getOrCreate() val input = spark.read .schema(schema) .format(”csv") .load(”input-path") val result = input .select(”age”) .where(”age > 18”) result.write .format(”json”) . save(” dest-path”) 15 Structured Streaming APIs val spark = SparkSession.builder() .appName(“Demo”) .getOrCreate() val input = spark.readStream .schema(schema) .format(”csv") .load(”input-path") val result = input .select(”age”) .where(”age > 18”) result.write .format(”json”) . startStream(” dest-path”)

- 16. IBM SparkTechnology Center Apache Spark – Spark SQL Structured Streaming 16 Structured Streaming is an ALPHA feature

- 17. IBM SparkTechnology Center Apache Spark – Spark Streaming 17 Spark Core Spark Streaming Spark SQL Spark ML Spark GraphX ▪Micro-batch event processing for near- real time analytics ▪e.g. Internet of Things (IoT) devices, Twitter feeds, Kafka (event hub), etc. ▪No multi-threading or parallel process programming required

- 18. IBM SparkTechnology Center Apache Spark – Spark Streaming Also known as discretized stream or Dstream Abstracts a continuous stream of data Based on micro-batching 18

- 19. IBM SparkTechnology Center Apache Spark – Spark Streaming val sparkConf = new SparkConf() .setAppName("MQTTWordCount") val ssc = new StreamingContext(sparkConf, Seconds(2)) val lines = MQTTUtils.createStream(ssc, brokerUrl, topic, StorageLevel.MEMORY_ONLY_SER_2) val words = lines.flatMap(x => x.split(" ")) val wordCounts = words.map(x => (x, 1)).reduceByKey(_ + _) wordCounts.print() ssc.start() ssc.awaitTermination() 19

- 20. IBM SparkTechnology Center Apache Spark extensions in Bahir MQTT – Enables reading data from MQTT Servers using Spark Streaming or Structured streaming. • http://bahir.apache.org/docs/spark/current/spark-sql-streaming-mqtt/ • http://bahir.apache.org/docs/spark/current/spark-streaming-mqtt/ Twitter – Enables reading social data from twitter using Spark Streaming. • http://bahir.apache.org/docs/spark/current/spark-streaming-twitter/ Akka – Enables reading data from Akka Actors using Spark Streaming. • http://bahir.apache.org/docs/spark/current/spark-streaming-akka/ ZeroMQ – Enables reading data from ZeroMQ using Spark Streaming. • http://bahir.apache.org/docs/spark/current/spark-streaming-zeromq/ 20

- 21. IBM SparkTechnology Center Apache Spark extensions coming soon to Bahir WebHDFS – Enables reading data from remote HDFS file system utilizing Spark SQL APIs • https://issues.apache.org/jira/browse/BAHIR-67 CounchDB / Cloudant– Enables reading data from CounchDB NoSQL document stores using Spark SQL APIs 21

- 22. IBM SparkTechnology Center Apache Spark extensions in Bahir Adding Bahir extensions into your application • Using SBT • libraryDependencies += "org.apache.bahir" %% "spark-streaming-mqtt" % "2.1.0-SNAPSHOT” • Using Maven • <dependency> <groupId>org.apache.bahir</groupId> <artifactId>spark-streaming-mqtt_2.11 </artifactId> <version>2.1.0-SNAPSHOT</version> </dependency> 22

- 23. IBM SparkTechnology Center Apache Spark extensions in Bahir Submitting applications with Bahir extensions to Spark • Spark-shell • bin/spark-shell --packages org.apache.bahir:spark-streaming_mqtt_2.11:2.1.0-SNAPSHOT ….. • Spark-submit • bin/spark-submit --packages org.apache.bahir:spark-streaming_mqtt_2.11:2.1.0-SNAPSHOT ….. 23

- 24. IBM SparkTechnology Center Apache Flink 24

- 25. IBM SparkTechnology Center Apache Flink extensions in Bahir Flink platform extensions added recently • https://github.com/apache/bahir-flink First release coming soon • Release discussions have started • Finishing up some basic documentation and examples • Should be available soon 25

- 26. IBM SparkTechnology Center Apache Flink extensions in Bahir ActiveMQ – Enables reading and publishing data from ActiveMQ servers • https://github.com/apache/bahir-flink/blob/master/flink-connector-activemq/README.md Flume– Enables publishing data to Apache Flume • https://github.com/apache/bahir-flink/tree/master/flink-connector-flume Redis – Enables reading data to Redis and publishing data to Redis PubSub • https://github.com/apache/bahir-flink/blob/master/flink-connector-redis/README.md 26

- 27. IBM SparkTechnology Center Live Demo 27

- 28. IBM SparkTechnology Center IoT Simulation using MQTT The demo environment https://github.com/lresende/bahir-iot-demo 28 Docker environment Mosquitto MQTT Server Node.js Webapplication Simulates Elevator IoT devices Elevator simulator Metrics: - Weight - Speed - Power - Temperature - System

- 29. IBM SparkTechnology Center Join the Apache Bahir community !!! 29

- 30. IBM SparkTechnology Center References Apache Bahir http://bahir.apache.org Documentation for Apache Spark extensions http://bahir.apache.org/docs/spark/current/documentation/ Source Repositories https://github.com/apache/bahir https://github.com/apache/bahir-flink https://github.com/apache/bahir-website 30 Image source: http://az616578.vo.msecnd.net/files/2016/03/21/6359412499310138501557867529_thank-you-1400x800-c-default.gif

![IBM SparkTechnology Center

Apache Spark – Spark SQL

You can run SQL statement with SparkSession.sql(…) interface:

val spark = SparkSession.builder()

.appName(“Demo”)

.getOrCreate()

spark.sql(“create table T1 (c1 int, c2 int) stored as parquet”)

val ds = spark.sql(“select * from T1”)

You can further transform the resultant dataset:

val ds1 = ds.groupBy(“c1”).agg(“c2”-> “sum”)

val ds2 = ds.orderBy(“c1”)

The result is a DataFrame / Dataset[Row]

ds.show() displays the rows

10](https://image.slidesharecdn.com/apachebigdata-apachebahir-161116210757/85/Writing-Apache-Spark-and-Apache-Flink-Applications-Using-Apache-Bahir-10-320.jpg)

![IBM SparkTechnology Center

Apache Spark – Spark SQL

You can read from data sources using SparkSession.read.format(…)

val spark = SparkSession.builder()

.appName(“Demo”)

.getOrCreate()

case class Bank(age: Integer, job: String, marital: String, education: String, balance: Integer)

// loading csv data to a Dataset of Bank type

val bankFromCSV = spark.read.csv(“hdfs://localhost:9000/data/bank.csv").as[Bank]

// loading JSON data to a Dataset of Bank type

val bankFromJSON = spark.read.json(“hdfs://localhost:9000/data/bank.json").as[Bank]

// select a column value from the Dataset

bankFromCSV.select(‘age).show() will return all rows of column “age” from this dataset.

11](https://image.slidesharecdn.com/apachebigdata-apachebahir-161116210757/85/Writing-Apache-Spark-and-Apache-Flink-Applications-Using-Apache-Bahir-11-320.jpg)

![IBM SparkTechnology Center

Apache Spark – Spark SQL

You can also configure a specific data source with specific options

val spark = SparkSession.builder()

.appName(“Demo”)

.getOrCreate()

case class Bank(age: Integer, job: String, marital: String, education: String, balance: Integer)

// loading csv data to a Dataset of Bank type

val bankFromCSV = sparkSession.read

.option("header", ”true") // Use first line of all files as header

.option("inferSchema", ”true") // Automatically infer data types

.option("delimiter", " ")

.csv("/users/lresende/data.csv”)

.as[Bank]

bankFromCSV.select(‘age).show() // will return all rows of column “age” from this dataset.

12](https://image.slidesharecdn.com/apachebigdata-apachebahir-161116210757/85/Writing-Apache-Spark-and-Apache-Flink-Applications-Using-Apache-Bahir-12-320.jpg)