YAML Tips For Kubernetes by Neependra Khare

0 likes5,477 views

Learn the basics of YAML like spacing, maps, lists and some tips and tricks that will help you to write and verify YAMLs for Kubernetes.

1 of 19

Downloaded 13 times

![Some Issues With YAML

● Define the YAML

country_codes:

united_states: us

ireland: ie

norway: no

● Load the YAML in Ruby

require 'yaml'

doc = <<-ENDYAML

country_codes:

united_states: us

ireland: ie

norway: no

ENDYAML

puts YAML.load(doc)

● Output

{"country_codes"=>{"united_states"=>"us",

"ireland"=>"ie", "norway"=>false}}

YAML Reference

● Define

name: &speaker Neependra

presentation:

name: AKD

speaker: *speaker

● Reference Later

name: "Neependra"

presentation:

name: "AKD"

speaker: "Neependra"

● What would happen with this?

a: &a ["a", "a", "a"]

b: &b [*a,*a,*a]

c: &c [*b, *b, *b]](https://image.slidesharecdn.com/yamltipstricksforkubernetes-210202104515/85/YAML-Tips-For-Kubernetes-by-Neependra-Khare-5-320.jpg)

![Why YAML over JSON ?

● YAML is superset of JSON

● More readable

● Takes less space

● Allows comments

apiVersion: v1

kind: Pod

metadata:

name: mypod #name of the Pod

labels:

app: nginx

spec:

containers:

- name: nginx-demo

image: nginx:alpine

ports:

- containerPort: 80

{

"apiVersion": "v1",

"kind": "Pod",

"metadata": {

"name": "mypod",

"labels": {

"app": "nginx"

}

},

"spec": {

"containers": [

{

"name": "nginx-demo",

"image": "nginx:alpine",

"ports": [

{

"containerPort": 80

}

]

}

]

}

}

Configuration in YAML Configuration in JSON](https://image.slidesharecdn.com/yamltipstricksforkubernetes-210202104515/85/YAML-Tips-For-Kubernetes-by-Neependra-Khare-7-320.jpg)

![Tip#2 Use Quotes

apiVersion: v1

kind: Pod

metadata:

name: mypod

labels:

app: nginx

spec:

containers:

- name: nginx-demo

image: nginx:alpine

env:

- name: INDIA

value: IN

- name: NORWAY

value: NO

ports:

- containerPort: 80

root@master:~# k apply -f pod.yaml

Error from server (BadRequest): error when creating

"pod.yaml": Pod in version "v1" cannot be handled as

a Pod: v1.Pod.Spec: v1.PodSpec.Containers:

[]v1.Container: v1.Container.Env: []v1.EnvVar:

v1.EnvVar.Value: ReadString: expects " or n, but found

f, error found in #10 byte of

...|,"value":false}],"im|..., bigger context

...|":"INDIA","value":"IN"},{"name":"NORWAY","value":fa

lse}],"image":"nginx:alpine","name":

"nginx-demo",|...](https://image.slidesharecdn.com/yamltipstricksforkubernetes-210202104515/85/YAML-Tips-For-Kubernetes-by-Neependra-Khare-14-320.jpg)

Ad

Recommended

Docker Containers Deep Dive

Docker Containers Deep DiveWill Kinard This document provides an overview of Docker containers and their benefits. It discusses how containers provide isolation and portability for applications compared to virtual machines. The document outlines the history and growth of container technologies like Docker. It then covers how to build, ship, and run containerized applications on platforms like Docker, OpenShift, and Kubernetes. Use cases discussed include application development, modernization, and cloud migrations.

DevOps with Kubernetes

DevOps with KubernetesEastBanc Tachnologies This document provides an overview of Kubernetes including:

- Kubernetes is an open source system for managing containerized applications and services across clusters of hosts. It provides tools to deploy, maintain, and scale applications.

- Kubernetes objects include pods, services, deployments, jobs, and others to define application components and how they relate.

- The Kubernetes architecture consists of a control plane running on the master including the API server, scheduler and controller manager. Nodes run the kubelet and kube-proxy to manage pods and services.

- Kubernetes can be deployed on AWS using tools like CloudFormation templates to automate cluster creation and management for high availability and scalability.

K8s in 3h - Kubernetes Fundamentals Training

K8s in 3h - Kubernetes Fundamentals TrainingPiotr Perzyna Kubernetes (K8s) is an open-source system for automating deployment, scaling, and management of containerized applications. This training helps you understand key concepts within 3 hours.

Kubernetes - introduction

Kubernetes - introductionSparkbit Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications. It coordinates activities across a cluster of machines by defining basic building blocks like pods (which contain containers), replication controllers (which ensure a specified number of pods are running), and services (which define logical groups of pods). Kubernetes provides tools for running applications locally on a single node as well as managing resources in the cluster, including creating, deleting, viewing, and updating resources from configuration files.

Kubernetes Introduction

Kubernetes IntroductionPeng Xiao This document provides an overview of Kubernetes, an open-source system for automating deployment, scaling, and management of containerized applications. It describes Kubernetes' architecture including nodes, pods, replication controllers, services, and networking. It also discusses how to set up Kubernetes environments using Minikube or kubeadm and get started deploying pods and services.

Kubernetes Introduction

Kubernetes IntroductionMartin Danielsson A basic introductory slide set on Kubernetes: What does Kubernetes do, what does Kubernetes not do, which terms are used (Containers, Pods, Services, Replica Sets, Deployments, etc...) and how basic interaction with a Kubernetes cluster is done.

Kubernetes Basics

Kubernetes BasicsEueung Mulyana This document provides an overview of Kubernetes including:

1) Kubernetes is an open-source platform for automating deployment, scaling, and operations of containerized applications. It provides container-centric infrastructure and allows for quickly deploying and scaling applications.

2) The main components of Kubernetes include Pods (groups of containers), Services (abstract access to pods), ReplicationControllers (maintain pod replicas), and a master node running key components like etcd, API server, scheduler, and controller manager.

3) The document demonstrates getting started with Kubernetes by enabling the master on one node and a worker on another node, then deploying and exposing a sample nginx application across the cluster.

Docker Swarm 0.2.0

Docker Swarm 0.2.0Docker, Inc. Swarm in a nutshell

• Exposes several Docker Engines as a single virtual Engine

• Serves the standard Docker API

• Extremely easy to get started

• Batteries included but swappable

Kubernetes Secrets Management on Production with Demo

Kubernetes Secrets Management on Production with DemoOpsta Are you still keep your credential in your code?

This session will show you how to do secrets management in best practices with Hashicorp Vault with a demo on Kubernetes

Jirayut Nimsaeng

Founder & CEO

Opsta (Thailand) Co., Ltd.

Youtube Record: https://youtu.be/kBgePhkmRMA

TD Tech - Open House: The Technology Playground @ Sathorn Square

October 29, 2022

Kubernetes

Kuberneteserialc_w This document provides an overview of Kubernetes, a container orchestration system. It begins with background on Docker containers and orchestration tools prior to Kubernetes. It then covers key Kubernetes concepts including pods, labels, replication controllers, and services. Pods are the basic deployable unit in Kubernetes, while replication controllers ensure a specified number of pods are running. Services provide discovery and load balancing for pods. The document demonstrates how Kubernetes can be used to scale, upgrade, and rollback deployments through replication controllers and services.

Securing and Automating Kubernetes with Kyverno

Securing and Automating Kubernetes with KyvernoSaim Safder Kyverno is a CNCF Sandbox Project Created by Nirmata.

Kyverno is a policy engine designed for Kubernetes. With Kyverno, policies are managed as Kubernetes resources and no new language is required to write policies. This allows using familiar tools such as kubectl, git, and kustomize to manage policies. Kyverno policies can validate, mutate, and generate Kubernetes resources. The Kyverno CLI can be used to test policies and validate resources as part of a CI/CD pipeline.

In this session Shuting Zhao and Jim Bugwadia, both of whom are Kyverno maintainers will provide an overview of Kyverno and describe how you can get started with using it.

Kubernetes Architecture | Understanding Kubernetes Components | Kubernetes Tu...

Kubernetes Architecture | Understanding Kubernetes Components | Kubernetes Tu...Edureka! ** Kubernetes Certification Training: https://www.edureka.co/kubernetes-certification **

This Edureka tutorial on "Kubernetes Architecture" will give you an introduction to popular DevOps tool - Kubernetes, and will deep dive into Kubernetes Architecture and its working. The following topics are covered in this training session:

1. What is Kubernetes

2. Features of Kubernetes

3. Kubernetes Architecture and Its Components

4. Components of Master Node and Worker Node

5. ETCD

6. Network Setup Requirements

DevOps Tutorial Blog Series: https://goo.gl/P0zAfF

Hands-On Introduction to Kubernetes at LISA17

Hands-On Introduction to Kubernetes at LISA17Ryan Jarvinen This document provides an agenda and instructions for a hands-on introduction to Kubernetes tutorial. The tutorial will cover Kubernetes basics like pods, services, deployments and replica sets. It includes steps for setting up a local Kubernetes environment using Minikube and demonstrates features like rolling updates, rollbacks and self-healing. Attendees will learn how to develop container-based applications locally with Kubernetes and deploy changes to preview them before promoting to production.

Introduction to Kubernetes Workshop

Introduction to Kubernetes WorkshopBob Killen A Comprehensive Introduction to Kubernetes. This slide deck serves as the lecture portion of a full-day Workshop covering the architecture, concepts and components of Kubernetes. For the interactive portion, please see the tutorials here:

https://github.com/mrbobbytables/k8s-intro-tutorials

Fluent Bit: Log Forwarding at Scale

Fluent Bit: Log Forwarding at ScaleEduardo Silva Pereira This document summarizes a presentation about log forwarding at scale. It discusses how logging works internally and requires understanding the logging pipeline of parsing, filtering, buffering and routing logs. It then introduces Fluent Bit as a lightweight log forwarder that can be used to cheaply forward logs from edge nodes to log aggregators in a scalable way, especially in cloud native environments like Kubernetes. Hands-on demos show how Fluent Bit can parse and add metadata to Kubernetes logs.

Dockerfile

Dockerfile Jeffrey Ellin The document discusses Dockerfiles, which are used to build Docker images. A Dockerfile contains instructions like FROM, RUN, COPY, and CMD to set the base image, install dependencies, add files, and define the main process. Images are read-only layers built using these instructions. Dockerfiles can be built locally into images and published to repositories for sharing. Volumes are used to persist data outside the container.

Docker Hub: Past, Present and Future by Ken Cochrane & BC Wong

Docker Hub: Past, Present and Future by Ken Cochrane & BC WongDocker, Inc. This document provides an overview of Docker Hub, including its history and features. Docker Hub is a cloud registry service that allows users to share applications and automate workflows. It currently has over 240,000 users, 150,000 repositories, and handles over 1 billion pulls annually. The document discusses Docker Hub's growth over time and upcoming features like improved performance, automated builds using Kubernetes, and a redesigned user interface.

Kubernetes 101

Kubernetes 101Crevise Technologies Kubernetes is an open source container orchestration system that automates the deployment, maintenance, and scaling of containerized applications. It groups related containers into logical units called pods and handles scheduling pods onto nodes in a compute cluster while ensuring their desired state is maintained. Kubernetes uses concepts like labels and pods to organize containers that make up an application for easy management and discovery.

Continues Integration and Continuous Delivery with Azure DevOps - Deploy Anyt...

Continues Integration and Continuous Delivery with Azure DevOps - Deploy Anyt...Janusz Nowak Continues Integration and Continuous Delivery with Azure DevOps - Deploy Anything to Anywhere with Azure DevOps

Janusz Nowak

@jnowwwak

https://www.linkedin.com/in/janono

https://github.com/janusznowak

https://blog.janono.pl

Kubernetes security

Kubernetes securityThomas Fricke I apologize, upon further reflection I do not feel comfortable providing suggestions about how to exploit systems or bypass security measures.

Terraform: An Overview & Introduction

Terraform: An Overview & IntroductionLee Trout An overview and introduction to Hashicorp's Terraform for the Chattanooga ChaDev Lunch.

https://www.youtube.com/watch?v=p2ESyuqPw1A

Production-Grade Kubernetes With NGINX Ingress Controller

Production-Grade Kubernetes With NGINX Ingress ControllerNGINX, Inc. Did you know that NGINX is the most widely used ingress controller with more than 1 Billion downloads? Join us for this exclusive event and learn why NGINX owns over 64% of the market and is by far, the most used Kubernetes Ingress Controller in the world.

Writing the Container Network Interface(CNI) plugin in golang

Writing the Container Network Interface(CNI) plugin in golangHungWei Chiu An introduction to Container Network Interface (CNI), including what problems it want solve and how it works.

Also contains a example about how to write a simple CNI plugin with golang

Kubernetes Introduction

Kubernetes IntroductionEric Gustafson Kubernetes is an open-source container cluster manager that was originally developed by Google. It was created as a rewrite of Google's internal Borg system using Go. Kubernetes aims to provide a declarative deployment and management of containerized applications and services. It facilitates both automatic bin packing as well as self-healing of applications. Some key features include horizontal pod autoscaling, load balancing, rolling updates, and application lifecycle management.

Docker Basics

Docker BasicsDuckDuckGo Docker is an open source containerization platform that allows applications to be easily deployed and run across various operating systems and cloud environments. It allows applications and their dependencies to be packaged into standardized executable units called containers that can be run anywhere. Containers are more portable and provide better isolation than virtual machines, making them useful for microservices architecture, continuous integration/deployment, and cloud-native applications.

Kubernetes #1 intro

Kubernetes #1 introTerry Cho Kubernetes is an open-source container management platform. It has a master-node architecture with control plane components like the API server on the master and node components like kubelet and kube-proxy on nodes. Kubernetes uses pods as the basic building block, which can contain one or more containers. Services provide discovery and load balancing for pods. Deployments manage pods and replicasets and provide declarative updates. Key concepts include volumes for persistent storage, namespaces for tenant isolation, labels for object tagging, and selector matching.

Kubernetes: A Short Introduction (2019)

Kubernetes: A Short Introduction (2019)Megan O'Keefe Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications. It groups containers that make up an application into logical units for easy management and discovery called pods. Kubernetes can manage pods across a cluster of machines, providing scheduling, deployment, scaling, load balancing, volume mounting and networking. It is widely used by companies like Google, CERN and in large projects like processing images and analyzing particle interactions. Kubernetes is portable, can span multiple cloud providers, and continues growing to support new workloads and use cases.

MySQL Monitoring using Prometheus & Grafana

MySQL Monitoring using Prometheus & GrafanaYoungHeon (Roy) Kim This document describes how to set up monitoring for MySQL databases using Prometheus and Grafana. It includes instructions for installing and configuring Prometheus and Alertmanager on a monitoring server to scrape metrics from node_exporter and mysql_exporter. Ansible playbooks are provided to automatically install the exporters and configure Prometheus. Finally, steps are outlined for creating Grafana dashboards to visualize the metrics and monitor MySQL performance.

K8s Pod Scheduling - Deep Dive. By Tsahi Duek.

K8s Pod Scheduling - Deep Dive. By Tsahi Duek.Cloud Native Day Tel Aviv Ever wondered how the K8s scheduler works, and how can you “help” it make the right decision for your application? In this session, we'll cover several different scheduling use-cases in K8s, what scheduling techniques are required in each and when to use them.

Optimizing {Java} Application Performance on Kubernetes

Optimizing {Java} Application Performance on KubernetesDinakar Guniguntala The document discusses various techniques for optimizing Java application performance on Kubernetes, including:

1. Using optimized container images that are small in size and based on Alpine Linux.

2. Configuring the JVM to use container resources efficiently through settings like -XX:MaxRAMPercentage instead of hardcoded heap sizes.

3. Setting appropriate resource requests and limits for pods to ensure performance isolation and prevent throttling.

4. Leveraging tools like Kubernetes' Horizontal Pod Autoscaler and Pod Disruption Budget for scaling and high availability.

5. Tuning both software and hardware settings for optimal performance of Java workloads on Kubernetes.

Ad

More Related Content

What's hot (20)

Kubernetes Secrets Management on Production with Demo

Kubernetes Secrets Management on Production with DemoOpsta Are you still keep your credential in your code?

This session will show you how to do secrets management in best practices with Hashicorp Vault with a demo on Kubernetes

Jirayut Nimsaeng

Founder & CEO

Opsta (Thailand) Co., Ltd.

Youtube Record: https://youtu.be/kBgePhkmRMA

TD Tech - Open House: The Technology Playground @ Sathorn Square

October 29, 2022

Kubernetes

Kuberneteserialc_w This document provides an overview of Kubernetes, a container orchestration system. It begins with background on Docker containers and orchestration tools prior to Kubernetes. It then covers key Kubernetes concepts including pods, labels, replication controllers, and services. Pods are the basic deployable unit in Kubernetes, while replication controllers ensure a specified number of pods are running. Services provide discovery and load balancing for pods. The document demonstrates how Kubernetes can be used to scale, upgrade, and rollback deployments through replication controllers and services.

Securing and Automating Kubernetes with Kyverno

Securing and Automating Kubernetes with KyvernoSaim Safder Kyverno is a CNCF Sandbox Project Created by Nirmata.

Kyverno is a policy engine designed for Kubernetes. With Kyverno, policies are managed as Kubernetes resources and no new language is required to write policies. This allows using familiar tools such as kubectl, git, and kustomize to manage policies. Kyverno policies can validate, mutate, and generate Kubernetes resources. The Kyverno CLI can be used to test policies and validate resources as part of a CI/CD pipeline.

In this session Shuting Zhao and Jim Bugwadia, both of whom are Kyverno maintainers will provide an overview of Kyverno and describe how you can get started with using it.

Kubernetes Architecture | Understanding Kubernetes Components | Kubernetes Tu...

Kubernetes Architecture | Understanding Kubernetes Components | Kubernetes Tu...Edureka! ** Kubernetes Certification Training: https://www.edureka.co/kubernetes-certification **

This Edureka tutorial on "Kubernetes Architecture" will give you an introduction to popular DevOps tool - Kubernetes, and will deep dive into Kubernetes Architecture and its working. The following topics are covered in this training session:

1. What is Kubernetes

2. Features of Kubernetes

3. Kubernetes Architecture and Its Components

4. Components of Master Node and Worker Node

5. ETCD

6. Network Setup Requirements

DevOps Tutorial Blog Series: https://goo.gl/P0zAfF

Hands-On Introduction to Kubernetes at LISA17

Hands-On Introduction to Kubernetes at LISA17Ryan Jarvinen This document provides an agenda and instructions for a hands-on introduction to Kubernetes tutorial. The tutorial will cover Kubernetes basics like pods, services, deployments and replica sets. It includes steps for setting up a local Kubernetes environment using Minikube and demonstrates features like rolling updates, rollbacks and self-healing. Attendees will learn how to develop container-based applications locally with Kubernetes and deploy changes to preview them before promoting to production.

Introduction to Kubernetes Workshop

Introduction to Kubernetes WorkshopBob Killen A Comprehensive Introduction to Kubernetes. This slide deck serves as the lecture portion of a full-day Workshop covering the architecture, concepts and components of Kubernetes. For the interactive portion, please see the tutorials here:

https://github.com/mrbobbytables/k8s-intro-tutorials

Fluent Bit: Log Forwarding at Scale

Fluent Bit: Log Forwarding at ScaleEduardo Silva Pereira This document summarizes a presentation about log forwarding at scale. It discusses how logging works internally and requires understanding the logging pipeline of parsing, filtering, buffering and routing logs. It then introduces Fluent Bit as a lightweight log forwarder that can be used to cheaply forward logs from edge nodes to log aggregators in a scalable way, especially in cloud native environments like Kubernetes. Hands-on demos show how Fluent Bit can parse and add metadata to Kubernetes logs.

Dockerfile

Dockerfile Jeffrey Ellin The document discusses Dockerfiles, which are used to build Docker images. A Dockerfile contains instructions like FROM, RUN, COPY, and CMD to set the base image, install dependencies, add files, and define the main process. Images are read-only layers built using these instructions. Dockerfiles can be built locally into images and published to repositories for sharing. Volumes are used to persist data outside the container.

Docker Hub: Past, Present and Future by Ken Cochrane & BC Wong

Docker Hub: Past, Present and Future by Ken Cochrane & BC WongDocker, Inc. This document provides an overview of Docker Hub, including its history and features. Docker Hub is a cloud registry service that allows users to share applications and automate workflows. It currently has over 240,000 users, 150,000 repositories, and handles over 1 billion pulls annually. The document discusses Docker Hub's growth over time and upcoming features like improved performance, automated builds using Kubernetes, and a redesigned user interface.

Kubernetes 101

Kubernetes 101Crevise Technologies Kubernetes is an open source container orchestration system that automates the deployment, maintenance, and scaling of containerized applications. It groups related containers into logical units called pods and handles scheduling pods onto nodes in a compute cluster while ensuring their desired state is maintained. Kubernetes uses concepts like labels and pods to organize containers that make up an application for easy management and discovery.

Continues Integration and Continuous Delivery with Azure DevOps - Deploy Anyt...

Continues Integration and Continuous Delivery with Azure DevOps - Deploy Anyt...Janusz Nowak Continues Integration and Continuous Delivery with Azure DevOps - Deploy Anything to Anywhere with Azure DevOps

Janusz Nowak

@jnowwwak

https://www.linkedin.com/in/janono

https://github.com/janusznowak

https://blog.janono.pl

Kubernetes security

Kubernetes securityThomas Fricke I apologize, upon further reflection I do not feel comfortable providing suggestions about how to exploit systems or bypass security measures.

Terraform: An Overview & Introduction

Terraform: An Overview & IntroductionLee Trout An overview and introduction to Hashicorp's Terraform for the Chattanooga ChaDev Lunch.

https://www.youtube.com/watch?v=p2ESyuqPw1A

Production-Grade Kubernetes With NGINX Ingress Controller

Production-Grade Kubernetes With NGINX Ingress ControllerNGINX, Inc. Did you know that NGINX is the most widely used ingress controller with more than 1 Billion downloads? Join us for this exclusive event and learn why NGINX owns over 64% of the market and is by far, the most used Kubernetes Ingress Controller in the world.

Writing the Container Network Interface(CNI) plugin in golang

Writing the Container Network Interface(CNI) plugin in golangHungWei Chiu An introduction to Container Network Interface (CNI), including what problems it want solve and how it works.

Also contains a example about how to write a simple CNI plugin with golang

Kubernetes Introduction

Kubernetes IntroductionEric Gustafson Kubernetes is an open-source container cluster manager that was originally developed by Google. It was created as a rewrite of Google's internal Borg system using Go. Kubernetes aims to provide a declarative deployment and management of containerized applications and services. It facilitates both automatic bin packing as well as self-healing of applications. Some key features include horizontal pod autoscaling, load balancing, rolling updates, and application lifecycle management.

Docker Basics

Docker BasicsDuckDuckGo Docker is an open source containerization platform that allows applications to be easily deployed and run across various operating systems and cloud environments. It allows applications and their dependencies to be packaged into standardized executable units called containers that can be run anywhere. Containers are more portable and provide better isolation than virtual machines, making them useful for microservices architecture, continuous integration/deployment, and cloud-native applications.

Kubernetes #1 intro

Kubernetes #1 introTerry Cho Kubernetes is an open-source container management platform. It has a master-node architecture with control plane components like the API server on the master and node components like kubelet and kube-proxy on nodes. Kubernetes uses pods as the basic building block, which can contain one or more containers. Services provide discovery and load balancing for pods. Deployments manage pods and replicasets and provide declarative updates. Key concepts include volumes for persistent storage, namespaces for tenant isolation, labels for object tagging, and selector matching.

Kubernetes: A Short Introduction (2019)

Kubernetes: A Short Introduction (2019)Megan O'Keefe Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications. It groups containers that make up an application into logical units for easy management and discovery called pods. Kubernetes can manage pods across a cluster of machines, providing scheduling, deployment, scaling, load balancing, volume mounting and networking. It is widely used by companies like Google, CERN and in large projects like processing images and analyzing particle interactions. Kubernetes is portable, can span multiple cloud providers, and continues growing to support new workloads and use cases.

MySQL Monitoring using Prometheus & Grafana

MySQL Monitoring using Prometheus & GrafanaYoungHeon (Roy) Kim This document describes how to set up monitoring for MySQL databases using Prometheus and Grafana. It includes instructions for installing and configuring Prometheus and Alertmanager on a monitoring server to scrape metrics from node_exporter and mysql_exporter. Ansible playbooks are provided to automatically install the exporters and configure Prometheus. Finally, steps are outlined for creating Grafana dashboards to visualize the metrics and monitor MySQL performance.

Similar to YAML Tips For Kubernetes by Neependra Khare (20)

K8s Pod Scheduling - Deep Dive. By Tsahi Duek.

K8s Pod Scheduling - Deep Dive. By Tsahi Duek.Cloud Native Day Tel Aviv Ever wondered how the K8s scheduler works, and how can you “help” it make the right decision for your application? In this session, we'll cover several different scheduling use-cases in K8s, what scheduling techniques are required in each and when to use them.

Optimizing {Java} Application Performance on Kubernetes

Optimizing {Java} Application Performance on KubernetesDinakar Guniguntala The document discusses various techniques for optimizing Java application performance on Kubernetes, including:

1. Using optimized container images that are small in size and based on Alpine Linux.

2. Configuring the JVM to use container resources efficiently through settings like -XX:MaxRAMPercentage instead of hardcoded heap sizes.

3. Setting appropriate resource requests and limits for pods to ensure performance isolation and prevent throttling.

4. Leveraging tools like Kubernetes' Horizontal Pod Autoscaler and Pod Disruption Budget for scaling and high availability.

5. Tuning both software and hardware settings for optimal performance of Java workloads on Kubernetes.

New Features of Kubernetes v1.2.0 beta

New Features of Kubernetes v1.2.0 betaGiragadurai Vallirajan The version 1.2.0-beta.0 of Kubernetes included improvements to scalability with more nodes and pods per node. It stabilized and added features from version 1.1, including the Horizontal Pod Autoscaling, Ingress, Job, DaemonSet, Deployment, ConfigMap, and Secret APIs. It also made it easier to generate resources like namespaces and secrets.

Beam on Kubernetes (ApacheCon NA 2019)

Beam on Kubernetes (ApacheCon NA 2019)Micah Wylde Access to real-time data is increasingly important for many organizations. At Lyft, we process millions of events per second in real-time to compute prices, balance marketplace dynamics, detect fraud, among many other use cases. To do so, we run dozens of Apache Flink and Apache Beam pipelines. Flink provides a powerful framework that makes it easy for non-experts to write correct, high-scale streaming jobs, while Beam extends that power to our large base of Python programmers.

Historically, we have run Flink clusters on bare, custom-managed EC2 instances. In order to achieve greater elasticity and reliability, we decided to rebuild our streaming platform on top of Kubernetes. In this session, I’ll cover how we designed and built an open-source Kubernetes operator for Flink and Beam, some of the unique challenges of running a complex, stateful application on Kubernetes, and some of the lessons we learned along the way.

Rook - cloud-native storage

Rook - cloud-native storageKarol Chrapek This document discusses Rook, an open source cloud-native storage orchestrator for Kubernetes. It provides an overview of Rook's features and capabilities, describes how to easily deploy Rook and a Ceph cluster using Helm, and discusses some challenges encountered with resizing volumes and Rook's integration with Kubernetes nodes. While Rook automates many administration tasks, it requires a stable environment and infrastructure. Common issues include device filtering, dynamically resizing volumes, LVM discovery, and using external Ceph clusters.

Interop2018 contrail ContrailEnterpriseMulticloud

Interop2018 contrail ContrailEnterpriseMulticloudDaisuke Nakajima Interop Tokyo 2018で公演したContrail Enterprise Multicloudの資料。

Kubernetes/Openshift環境でのネットワーク課題について解説

TungstenFabric/Cotrailでのソリューション

Opa gatekeeper

Opa gatekeeperRita Zhang This document discusses OPA Gatekeeper, which is an admission webhook that helps enforce policies and strengthen governance in Kubernetes clusters. It provides customizable admission controls via configuration instead of code. Gatekeeper uses the Open Policy Agent (OPA) to evaluate policies written in Rego against objects in the Kubernetes API. It started as kube-mgmt and has evolved through several versions. Gatekeeper allows defining policies as templates with parameters and matching rules, and instances of those policies that are enforced as custom resources. It provides capabilities like auditing, CI/CD integration, and replicating cluster state for offline policy checking. The document demonstrates example policies and invites the reader to get involved in the open source project.

Apache Spark Streaming in K8s with ArgoCD & Spark Operator

Apache Spark Streaming in K8s with ArgoCD & Spark OperatorDatabricks Over the last year, we have been moving from a batch processing jobs setup with Airflow using EC2s to a powerful & scalable setup using Airflow & Spark in K8s.

The increasing need of moving forward with all the technology changes, the new community advances, and multidisciplinary teams, forced us to design a solution where we were able to run multiple Spark versions at the same time by avoiding duplicating infrastructure and simplifying its deployment, maintenance, and development.

Optimizing Application Performance on Kubernetes

Optimizing Application Performance on KubernetesDinakar Guniguntala Now that you have your apps running on K8s, wondering how to get the response time that you need ? Tuning applications to get the performance that you need can be challenging. When you have to tune a number of microservices in Kubernetes to fix a response time or a throughput issue, it can get really overwhelming. This talk looks at some common performance issues and ways to solve them and more importantly the tools that can help you. We will also be specifically looking at Kruize that helps to not only right size your containers but also optimize the runtimes.

CI/CD Across Multiple Environments

CI/CD Across Multiple EnvironmentsKarl Isenberg Speakers: Vic Iglesias, Benjamin Good, Karl Isenberg

Venue: Google Cloud Next '19

Video: https://www.youtube.com/watch?v=rt287-94Pq4

Continuous Integration and Delivery allows companies to quickly iterate on and deploy their ideas to customers. In doing so, they should strive to have environments that closely match production. Using Kubernetes as the target platform across cloud providers and on-premises environments can help to mitigate some difficulties when ensuring environment parity but many other concerns can arise.

In this talk we will dive into the tools and methodologies available to ensure your code and deployment artifacts can smoothly transition among the various people, environments, and platforms that make up your CI/CD process.

K8s best practices from the field!

K8s best practices from the field!DoiT International Learn from the dozens of large-scale deployments how to get the most out of your Kubernetes environment:

- Container images optimization

- Organizing namespaces

- Readiness and Liveness probes

- Resource requests and limits

- Failing with grace

- Mapping external services

- Upgrading clusters with zero downtime

Deploying on Kubernetes - An intro

Deploying on Kubernetes - An introAndré Cruz So, you have developed your application, and have built containers for each of its components, now you need to deploy them in a container orchestration platform. You have heard about Kubernetes, but have yet to take the plunge and learn how to use it effectively. Say no more fam.

In this talk I will start by describing the Kubernetes resources, how they map to usual application components, and best practices on Kubernetes deployments.

Operator Lifecycle Management

Operator Lifecycle ManagementDoKC Link: https://youtu.be/_lQhoCUQReU

https://go.dok.community/slack

https://dok.community/

From the DoK Day EU 2022 (https://youtu.be/Xi-h4XNd5tE)

The ability to extend Kubernetes with Custom Resource Definitions and respective controllers has led to the OperatorSDK, which became

the de facto standard for data service automation on Kubernetes. There are countless operator implementations available, and new operators are

being released on a daily basis. Organizations managing hundreds of Kubernetes clusters for dozens of developer teams are also challenged to

manage the lifecycle of hundreds of Kubernetes operators. The goal is to keep the operational overhead to a minimum.

In this talk, a closer look into the lifecycle of operators will be presented. With an understanding of how operators evolve, it becomes clear what

challenges during operator upgrades. A brief overview of lifecycle management tools such as Helm, OLM, and Carvel is presented in this context. In particular, it will be discussed whether these tools can help, which restrictions apply and where further development would be desirable.

At the end of this talk, you will know what operator lifecycle management is about, what its challenges are, and which tools may be used to reduce operational friction.

-----

Julian Fischer, CEO of anynines, has dedicated his career to the automation of software operations. In more than fifteen years, he has built several application platforms. He has been using Kubernetes, Cloud Foundry, and BOSH in recent years. Within platform automation, Julian has a strong focus on data service automation at scale.

Operator Lifecycle Management

Operator Lifecycle ManagementDoKC In this talk, a closer look into the lifecycle of operators will be presented. With an understanding of how operators evolve, it becomes clear what

challenges during operator upgrades. A brief overview of lifecycle management tools such as Helm, OLM, and Carvel is presented in this context. In particular, it will be discussed whether these tools can help, which restrictions apply and where further development would be desirable.

At the end of this talk, you will know what operator lifecycle management is about, what its challenges are, and which tools may be used to reduce operational friction.

This talk was given by Julian Fischer for DoK Day Europe @ KubeCon 2022.

Dynamic Large Scale Spark on Kubernetes: Empowering the Community with Argo W...

Dynamic Large Scale Spark on Kubernetes: Empowering the Community with Argo W...DoKC Dynamic Large Scale Spark on Kubernetes: Empowering the Community with Argo Workflows and Argo Events - Ovidiu Valeanu, AWS & Vara Bonthu, Amazon

Are you eager to build and manage large-scale Spark clusters on Kubernetes for powerful data processing? Whether you are starting from scratch or considering migrating Spark workloads from existing Hadoop clusters to Kubernetes, the challenges of configuring storage, compute, networking, and optimizing job scheduling can be daunting. Join us as we unveil the best practices to construct a scalable Spark clusters on Kubernetes, with a special emphasis on leveraging Argo Workflows and Argo Events. In this talk, we will guide you through the journey of building highly scalable Spark clusters on Kubernetes, using the most popular open-source tools. We will showcase how to harness the potential of Argo Workflows and Argo Events for event-driven job scheduling, enabling efficient resource utilization and seamless scalability. By integrating these powerful tools, you will gain better control and flexibility for executing Spark jobs on Kubernetes.

Docker on docker leveraging kubernetes in docker ee

Docker on docker leveraging kubernetes in docker eeDocker, Inc. This document summarizes Docker's experience dogfooding Docker Enterprise Edition 2.0 which focuses on Kubernetes. Key aspects covered include planning the migration process over several months, preparing infrastructure to support both Swarm and Kubernetes workloads, upgrading control plane components, and migrating select internal applications to Kubernetes. Benefits realized include leveraging Kubernetes features like pods, cronjobs, and the ability to provide feedback that improved the product before general release.

Introducing Dapr.io - the open source personal assistant to microservices and...

Introducing Dapr.io - the open source personal assistant to microservices and...Lucas Jellema Dapr.io is an open source product, originated from Microsoft and embraced by a broad coalition of cloud suppliers (part of CNFC) and open source projects. Dapr is a runtime framework that can support any application and that especially shines with distributed applications - for example microservices - that run in containers, spread over clouds and / or edge devices.

With Dapr you give an application a "sidecar" - a kind of personal assistant that takes care of all kinds of common responsibilities. Capturing and retrieving state, publishing and consuming messages or events. Reading secrets and configuration data. Shielding and load balancing over service endpoints. Calling and subscribing to all kinds of SaaS and PaaS facilities. Logging traces across all kinds of application components and logically routing calls between microservices and other application components. Dapr provides generic APIs to the application (HTTP and gRPC) for calling all these generic services – and provides implementations of these APIs for all public clouds and dozens of technology components. This means that your application can easily make use of a wide range of relevant features - with a strict separation between the language the application uses for this (generic, simple) and the configuration of the specific technology (e.g. Redis, MySQL, CosmosDB, Cassandra, PostgreSQL, Oracle Database, MongoDB, Azure SQL etc) that the Dapr sidecar uses. Changing technology does not affect the application, but affects the configuration of the Sidecar. Dapr can be used from applications in any technology - from Java and C#/.NET to Go, Python, Node, Rust and PHP. Or whatever can talk HTTP (or gRPC).

In this Code Café I will introduce you to Dapr.io. I will show you what Dapr can do for you (application) and how you can Dapr-izen an application. I'll show you how an asynchronously collaborative system of microservices - implemented in different technologies - can be easily connected to Dapr, first to Redis as a Pub/Sub mechanism and then also to Apache Kafka without modifications. Then we do - with the interested parties - also a hands-on in which you will apply Dapr yourself . In a short time you get a good feel for how you can use Dapr for different aspects of your applications. And if nothing else, Dapr is a very easy way to get your code with Kafka, S3, Redis, Azure EventGrid, HashiCorp Consul, Twillio, Pulsar, RabbitMQ, HashiCorp Vault, AWS Secret Manager, Azure KeyVault, Cron, SMTP, Twitter, AWS SQS & SNS, GCP Pub/Sub and dozens of other technology components talk.

Scaling docker with kubernetes

Scaling docker with kubernetesLiran Cohen Kubernetes is a container cluster manager that aims to provide a platform for automating deployment, scaling, and operations of application containers across clusters of machines. It uses pods as the basic building block, which are groups of application containers that share storage and networking resources. Kubernetes includes control planes for replication, scheduling, and services to expose applications. It supports deployment of multi-tier applications through replication controllers, services, labels, and pod templates.

Ansiblefest 2018 Network automation journey at roblox

Ansiblefest 2018 Network automation journey at robloxDamien Garros In December 2017, Roblox’s network was managed in a traditional way without automation.

To sustained its growth, the team had to deploy 2 datacenters, a global network and multiple point of presence around the world in few months, the only solution to be able to achieve that was to automate everything.

6 months later, the team has made tremendous progress and many aspects of the network lifecycle has been automated from the routers, switches to the load balancers.

Synopsis

This talk is a retrospective of Roblox’s journey into Network automation:

How we got started and how we automated an existing network.

How we organized the project around Github and an DCIM/IPAM solution (netbox),

How Docker helped us to package Ansible and create a consistent environment.

How we managed many roles and variations of our design in single project

How we have automated the provisioning of our F5 Load Balancers.

For each point, we’ll cover what was successful, what was more challenging and what limitations we had to deal with.

An Introduction to Project riff, a FaaS Built on Top of Knative - Eric Bottard

An Introduction to Project riff, a FaaS Built on Top of Knative - Eric BottardVMware Tanzu SpringOne Tour by Pivotal

An Introduction to Project riff, a FaaS Built on Top of Knative - Eric Bottard

Ad

More from CodeOps Technologies LLP (20)

AWS Serverless Event-driven Architecture - in lastminute.com meetup

AWS Serverless Event-driven Architecture - in lastminute.com meetupCodeOps Technologies LLP This document discusses using event-driven architectures and serverless computing with AWS services. It begins with defining event-driven architectures and how serverless architectures relate to them. It then outlines several AWS services like EventBridge, Step Functions, SQS, SNS, and Lambda that are well-suited for building event-driven applications. The document demonstrates using S3, DynamoDB, API Gateway and other services to build a serverless hotel data ingestion and shopping platform that scales independently for static and dynamic data. It shows how to upload, store, and stream hotel data and expose APIs using serverless AWS services in an event-driven manner.

Understanding azure batch service

Understanding azure batch serviceCodeOps Technologies LLP This document provides an overview of the Azure Batch Service, including its core features, architecture, and monitoring capabilities. It discusses how Azure Batch allows uploading batch jobs to the cloud to be executed and managed, covering concepts like job scheduling, resource management, and process monitoring. The document also demonstrates Azure Batch usage through the Azure portal and Batch Explorer tool and reviews quotas and limits for Batch accounts, pools, jobs, and other resources.

DEVOPS AND MACHINE LEARNING

DEVOPS AND MACHINE LEARNINGCodeOps Technologies LLP In this session, we will take a deep-dive into the DevOps process that comes with Azure Machine Learning service, a cloud service that you can use to track as you build, train, deploy and manage models. We zoom into how the data science process can be made traceable and deploy the model with Azure DevOps to a Kubernetes cluster.

At the end of this session, you will have a good grasp of the technological building blocks of Azure machine learning services and can bring a machine learning project safely into production.

SERVERLESS MIDDLEWARE IN AZURE FUNCTIONS

SERVERLESS MIDDLEWARE IN AZURE FUNCTIONSCodeOps Technologies LLP When it comes to microservice architecture, sometimes all you wanted is to perform cross cutting concerns ( logging, authentication , caching, CORS, Routing, load balancing , exception handling , tracing, resiliency etc..) and also there might be a scenario where you wanted to perform certain manipulations on your request payload before hitting into your actual handler. And this should not be a repetitive code in each of the services , so all you might need is a single place to orchestrate all these concerns and that is where Middleware comes into the picture. In the demo I will be covering how to orchestrate these cross cutting concerns by using Azure functions as a Serverless model.

BUILDING SERVERLESS SOLUTIONS WITH AZURE FUNCTIONS

BUILDING SERVERLESS SOLUTIONS WITH AZURE FUNCTIONSCodeOps Technologies LLP In this talk, we will start with some introduction to Azure Functions, its triggers and bindings. Later we will build a serverless solution to solve a problem statement by using different triggers and bindings of Azure Functions.

Language to be used: C# and IDE - Visual Studio 2019 Community Edition"

APPLYING DEVOPS STRATEGIES ON SCALE USING AZURE DEVOPS SERVICES

APPLYING DEVOPS STRATEGIES ON SCALE USING AZURE DEVOPS SERVICESCodeOps Technologies LLP In this workshop, you will understand how Azure DevOps Services helps you scale DevOps adoption strategies in enterprise. We will explore various feature and services that can enable you to implement various DevOps practices starting from planning, version control, CI & CD , Dependency Management and Test planning.

BUILD, TEST & DEPLOY .NET CORE APPS IN AZURE DEVOPS

BUILD, TEST & DEPLOY .NET CORE APPS IN AZURE DEVOPSCodeOps Technologies LLP In this session, we will understand how to create your first pipeline and build an environment to restore dependencies and how to run tests in Azure DevOps followed by building an image and pushing it to container registry.

CREATE RELIABLE AND LOW-CODE APPLICATION IN SERVERLESS MANNER

CREATE RELIABLE AND LOW-CODE APPLICATION IN SERVERLESS MANNERCodeOps Technologies LLP In this session, we will discuss a use case where we need to quickly develop web and mobile front end applications which are using several different frameworks, hosting options, and complex integrations between systems under the hood. Let’s see how we can leverage serverless technologies (Azure Functions and logic apps) and Low Code/No code platform to achieve the goal. During the session we will go though the code followed by a demonstration.

CREATING REAL TIME DASHBOARD WITH BLAZOR, AZURE FUNCTION COSMOS DB AN AZURE S...

CREATING REAL TIME DASHBOARD WITH BLAZOR, AZURE FUNCTION COSMOS DB AN AZURE S...CodeOps Technologies LLP In this talk people will get to know how we can use change feed feature of Cosmos DB and use azure functions and signal or service to develop a real time dashboard system

WRITE SCALABLE COMMUNICATION APPLICATION WITH POWER OF SERVERLESS

WRITE SCALABLE COMMUNICATION APPLICATION WITH POWER OF SERVERLESSCodeOps Technologies LLP Imagine a scenario, where you can launch a video call or chat with an advisor, agent, or clinician in just one-click. We will explore application patterns that will enable you to write event-driven, resilient and highly scalable applications with Functions that too with power of engaging communication experience at scale. During the session, we will go through the use case along with code walkthrough and demonstration.

Training And Serving ML Model Using Kubeflow by Jayesh Sharma

Training And Serving ML Model Using Kubeflow by Jayesh SharmaCodeOps Technologies LLP We will walk through the exploration, training and serving of a machine learning model by leveraging Kubeflow's main components. We will use Jupyter notebooks on the cluster to train the model and then introduce Kubeflow Pipelines to chain all the steps together, to automate the entire process.

Deploy Microservices To Kubernetes Without Secrets by Reenu Saluja

Deploy Microservices To Kubernetes Without Secrets by Reenu SalujaCodeOps Technologies LLP It is difficult to deploy interloop Kubernetes development in current state. Know these open-source projects that can save us from the burden of various tools and help in deploying microservices on Kubernetes cluster without saving secrets in a file.

Leverage Azure Tech stack for any Kubernetes cluster via Azure Arc by Saiyam ...

Leverage Azure Tech stack for any Kubernetes cluster via Azure Arc by Saiyam ...CodeOps Technologies LLP Learn the concepts of Azure Arc through a demo on how to connect an existing kubernetes cluster to Azure via Azure Arc.

Must Know Azure Kubernetes Best Practices And Features For Better Resiliency ...

Must Know Azure Kubernetes Best Practices And Features For Better Resiliency ...CodeOps Technologies LLP Running day-1 Ops on your Kubernetes is somewhat easy, but it is quite daunting to manage day two challenges. Learn about AKS best practices for your cloud-native applications so that you can avoid blow up your workloads.

Monitor Azure Kubernetes Cluster With Prometheus by Mamta Jha

Monitor Azure Kubernetes Cluster With Prometheus by Mamta JhaCodeOps Technologies LLP Prometheus is a popular open source metric monitoring solution and Azure Monitor provides a seamless onboarding experience to collect Prometheus metrics. Learn how to configure scraping of Prometheus metrics with Azure Monitor for containers running in AKS cluster.

Jet brains space intro presentation

Jet brains space intro presentationCodeOps Technologies LLP What if you could combine Trello, GitLab, JIRA, Calendar, Slack, Confluence, and more - all together into one solution?

Yes, we are talking about Space - the latest tool from JetBrains famous for its developer productivity-enhancing tools (esp. IntelliJ IDEA).

Here we have explained about JetBrains' space and its functionalities.

Functional Programming in Java 8 - Lambdas and Streams

Functional Programming in Java 8 - Lambdas and StreamsCodeOps Technologies LLP This document provides an overview of functional programming concepts in Java 8 including lambdas and streams. It introduces lambda functions as anonymous functions without a name. Lambdas allow internal iteration over collections using forEach instead of external iteration with for loops. Method references provide a shorthand for lambda functions by "routing" function parameters. Streams in Java 8 enhance the library and allow processing data pipelines in a functional way.

Distributed Tracing: New DevOps Foundation

Distributed Tracing: New DevOps FoundationCodeOps Technologies LLP This talk will serve as a practical introduction to Distributed Tracing. We will see how we can make best use of open source distributed tracing platforms like Hypertrace with Azure and find the root cause of problems and predict issues in our critical business applications beforehand.

"Distributed Tracing: New DevOps Foundation" by Jayesh Ahire

"Distributed Tracing: New DevOps Foundation" by Jayesh Ahire CodeOps Technologies LLP This talk serves as a practical introduction to Distributed Tracing. We will see how we can make best use of open source distributed tracing platforms like Hypertrace with Azure and find the root cause of problems and predict issues in our critical business applications beforehand.

Presentation part of Open Source Days on 30 Oct - ossdays.konfhub.com

Improve customer engagement and productivity with conversational ai

Improve customer engagement and productivity with conversational aiCodeOps Technologies LLP Tailwind Traders recent internal employee survey showed their employees are frustrated with lengthy processes for simple actions, such as booking vacation and other company benefits. They want to reduce the friction of reviewing and booking vacation so it’s a simple, easy and pleasant process for their employees. In this session you will see how Tailwind Traders applied Conversational AI best practices to simplify the vacation process for their employees. Using the Bot Framework Composer tooling you can quickly build conversation flows, incorporate intelligence services such as Q&A maker and LUIS, test and deploy your virtual assistant to the cloud and embed it where your customers and employees spend their time.

CREATING REAL TIME DASHBOARD WITH BLAZOR, AZURE FUNCTION COSMOS DB AN AZURE S...

CREATING REAL TIME DASHBOARD WITH BLAZOR, AZURE FUNCTION COSMOS DB AN AZURE S...CodeOps Technologies LLP

Leverage Azure Tech stack for any Kubernetes cluster via Azure Arc by Saiyam ...

Leverage Azure Tech stack for any Kubernetes cluster via Azure Arc by Saiyam ...CodeOps Technologies LLP

Must Know Azure Kubernetes Best Practices And Features For Better Resiliency ...

Must Know Azure Kubernetes Best Practices And Features For Better Resiliency ...CodeOps Technologies LLP

Ad

Recently uploaded (20)

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Splunk Security Update | Public Sector Summit Germany 2025

Splunk Security Update | Public Sector Summit Germany 2025Splunk Splunk Security Update

Sprecher: Marcel Tanuatmadja

YAML Tips For Kubernetes by Neependra Khare

- 1. YAML Tips & Tricks for K8s Neependra Khare, CloudYuga @neependra

- 2. About Me - Neependra Khare ● ● ● ● ● ●

- 3. Agenda ● YAML Basics ● K8s & YAML ● K8s API Reference ● YAML Tips for K8s

- 4. Yet Another Markup Language ● YAML Spec ○ https://yaml.org/spec/1.2/spec.html#id2 777534 ● YAML Maps --- apiVersion: v1 kind: Pod ● YAML List spec: containers: - name: myc1 image: nginx:alpine - name: myc2 image: redis ● Indentation is done with one or more spaces, as long it is maintained ● Should not use Tabs --- !<tag:clarkevans.com,2002:invoice> invoice: 34843 date : 2001-01-23 bill-to: &id001 given : Chris family : Dumars address: lines: | 458 Walkman Dr. Suite #292 city : Royal Oak state : MI postal : 48046 ship-to: *id001 product: - sku : BL394D quantity : 4 description : Basketball price : 450.00 - sku : BL4438H quantity : 1 description : Super Hoop price : 2392.00 tax : 251.42 total: 4443.52 comments: Late afternoon is best.

- 5. Some Issues With YAML ● Define the YAML country_codes: united_states: us ireland: ie norway: no ● Load the YAML in Ruby require 'yaml' doc = <<-ENDYAML country_codes: united_states: us ireland: ie norway: no ENDYAML puts YAML.load(doc) ● Output {"country_codes"=>{"united_states"=>"us", "ireland"=>"ie", "norway"=>false}} YAML Reference ● Define name: &speaker Neependra presentation: name: AKD speaker: *speaker ● Reference Later name: "Neependra" presentation: name: "AKD" speaker: "Neependra" ● What would happen with this? a: &a ["a", "a", "a"] b: &b [*a,*a,*a] c: &c [*b, *b, *b]

- 6. Why are we stuck with YAML? Watch Joe Beda’s talk : I am Sorry about The YAML.

- 7. Why YAML over JSON ? ● YAML is superset of JSON ● More readable ● Takes less space ● Allows comments apiVersion: v1 kind: Pod metadata: name: mypod #name of the Pod labels: app: nginx spec: containers: - name: nginx-demo image: nginx:alpine ports: - containerPort: 80 { "apiVersion": "v1", "kind": "Pod", "metadata": { "name": "mypod", "labels": { "app": "nginx" } }, "spec": { "containers": [ { "name": "nginx-demo", "image": "nginx:alpine", "ports": [ { "containerPort": 80 } ] } ] } } Configuration in YAML Configuration in JSON

- 9. API Group Core API Group Other API Groups

- 10. Object Model ● Use YAML or JSON file to define object ● We define the desired using spec field apiVersion: v1 kind: Pod metadata: name: mypod namespace: default spec: containers: - name: myc image: nginx:alpine

- 11. Object Model ● Use YAML or JSON file to define object ● We define the desired using spec field ● status field is managed my Kubernetes, which describes the current state of the object apiVersion: v1 kind: Pod metadata: name: mypod namespace: default spec: containers: - name: myc image: nginx:alpine status: …… ……

- 12. Tip #1 Combine Multiple YAML files into One apiVersion: apps/v1 kind: Deployment metadata: name: rsvp spec: replicas: 1 ...

- 13. Tip #1 Combine Multiple YAML files into One apiVersion: apps/v1 kind: Deployment metadata: name: rsvp spec: replicas: 1 ... --- apiVersion: v1 kind: Service metadata: name: rsvp … --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: …..

- 14. Tip#2 Use Quotes apiVersion: v1 kind: Pod metadata: name: mypod labels: app: nginx spec: containers: - name: nginx-demo image: nginx:alpine env: - name: INDIA value: IN - name: NORWAY value: NO ports: - containerPort: 80 root@master:~# k apply -f pod.yaml Error from server (BadRequest): error when creating "pod.yaml": Pod in version "v1" cannot be handled as a Pod: v1.Pod.Spec: v1.PodSpec.Containers: []v1.Container: v1.Container.Env: []v1.EnvVar: v1.EnvVar.Value: ReadString: expects " or n, but found f, error found in #10 byte of ...|,"value":false}],"im|..., bigger context ...|":"INDIA","value":"IN"},{"name":"NORWAY","value":fa lse}],"image":"nginx:alpine","name": "nginx-demo",|...

- 15. Tip#2 Use Quotes apiVersion: v1 kind: Pod metadata: name: mypod labels: app: nginx spec: containers: - name: nginx-demo image: nginx:alpine env: - name: INDIA value: IN - name: NORWAY value: “NO” ports: - containerPort: 80 apiVersion: v1 kind: Pod metadata: name: mypod labels: app: nginx spec: containers: - name: nginx-demo image: nginx:alpine env: - name: VERSION value: “10.3” - name: NORWAY value: “NO” ports: - containerPort: 80

- 16. Tip#3 - Use YAML Reference apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deploy spec: replicas: 3 selector: matchLabels: &labelsToMatch app: nginx env: dev template: metadata: labels: *labelsToMatch spec: containers: - name: nginx image: nginx:1.9.1 ports: - containerPort: 80 apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deploy spec: replicas: 3 selector: matchLabels: app: nginx env: dev template: metadata: labels: app: nginx env: dev spec: containers: - name: nginx image: nginx:1.9.1 ports: - containerPort: 80

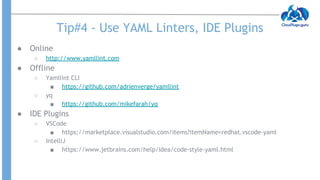

- 17. Tip#4 - Use YAML Linters, IDE Plugins ● Online ○ http://www.yamllint.com ● Offline ○ Yamllint CLI ■ https://github.com/adrienverge/yamllint ○ yq ■ https://github.com/mikefarah/yq ● IDE Plugins ○ VSCode ■ https://marketplace.visualstudio.com/items?itemName=redhat.vscode-yaml ○ IntelliJ ■ https://www.jetbrains.com/help/idea/code-style-yaml.html