Mongo db php_shaken_not_stirred_joomlafrappe

1 like1,164 views

My presentation on MongoDB at the 2nd Joomlafrappe in Athens. A short introduction to NoSQL, MongoDB Replica Sets, sharding and the PHP driver

1 of 36

Downloaded 21 times

![MongoDB | What can a value be?

• Keys are always strings (without . and $)

• Value can be

• String

• Number

• Date

• Array

• Document

{“name” : “Spyros Passas”}

{“age” : 30}

{“birthday” : Date(“1982-12-12”}

{“interests” : [“Programming”, “NoSQL”]}

{“address” : {

“street” : “123 Pireus st.”,

“city” : “Athens”,

“zip_code” : 17121

}

}

Friday, April 12, 13](https://image.slidesharecdn.com/mongodbphpshakennotstirredjoomlafrappe-130415131913-phpapp01/85/Mongo-db-php_shaken_not_stirred_joomlafrappe-8-320.jpg)

![MongoDB | Example of a document

{

“_id” : ObjectId(“47cc67093475061e3d95369d”),

“name” : “Spyros Passas”,

“birthday” : Date(“1982-12-12”),

“age” : 30,

“interests” : [“Programming”, “NoSQL”],

“address” : {

“street” : “123 Pireus st.”,

“city” : “Athens”,

“zip_code” : 17121

}

“_id” : ObjectId(“47cc67093475061e3d95369d”)

ObjectId is a special type

Friday, April 12, 13](https://image.slidesharecdn.com/mongodbphpshakennotstirredjoomlafrappe-130415131913-phpapp01/85/Mongo-db-php_shaken_not_stirred_joomlafrappe-9-320.jpg)

Recommended

Elasticsearch in 15 minutes

Elasticsearch in 15 minutesDavid Pilato Xebia hosted this session with a workshop as well: http://blog.xebia.fr/2013/06/14/atelier-elasticsearch/

Solr vs. Elasticsearch - Case by Case

Solr vs. Elasticsearch - Case by CaseAlexandre Rafalovitch A presentation given at the Lucene/Solr Revolution 2014 conference to show Solr and Elasticsearch features side by side. The presentation time was only 30 minutes, so only the core usability features were compared. The full video is embedded on the last slide.

Cool bonsai cool - an introduction to ElasticSearch

Cool bonsai cool - an introduction to ElasticSearchclintongormley An introduction to Clinton Gormley and the search engine Elasticsearch. It discusses how Elasticsearch works by tokenizing text, creating an inverted index, and using relevance scoring. It also summarizes how to install and use Elasticsearch for indexing, retrieving, and searching documents.

Introduction to Elasticsearch

Introduction to ElasticsearchSperasoft The document provides an overview of Elasticsearch including that it is easy to install, horizontally scalable, and highly available. It discusses Elasticsearch's core search capabilities using Lucene and how data can be stored and retrieved. The document also covers Elasticsearch's distributed nature, plugins, scripts, custom analyzers, and other features like aggregations, filtering and sorting.

Simple search with elastic search

Simple search with elastic searchmarkstory Elasticsearch is a JSON document database that allows for powerful full-text search capabilities. It uses Lucene under the hood for indexing and search. Documents are stored in indexes and types which are analogous to tables in a relational database. Documents can be created, read, updated, and deleted via a RESTful API. Searches can be performed across multiple indexes and types. Elasticsearch offers advanced search features like facets, highlighting, and custom analyzers. Mappings allow for customization of how documents are indexed. Shards and replicas improve performance and availability. Multi-tenancy can be achieved through separate indexes or filters.

Elasticsearch in 15 Minutes

Elasticsearch in 15 MinutesKarel Minarik The document provides an overview of using Elasticsearch. It demonstrates how to install Elasticsearch, index and update documents, perform searches, add nodes to the cluster, and configure shards and clusters. It also covers JSON and HTTP usage, different search types (terms, phrases, wildcards), filtering and boosting searches, and the JSON query DSL. Faceted searching is demonstrated using terms and terms_stats facets. Advanced topics like mapping, analyzing, and features above the basic search capabilities are also briefly mentioned.

Elasticsearch And Ruby [RuPy2012]![Elasticsearch And Ruby [RuPy2012]](https://cdn.slidesharecdn.com/ss_thumbnails/elasticsearchandruby-karelminarik-rupy2012-121118065224-phpapp01-thumbnail.jpg?width=560&fit=bounds)

![Elasticsearch And Ruby [RuPy2012]](https://cdn.slidesharecdn.com/ss_thumbnails/elasticsearchandruby-karelminarik-rupy2012-121118065224-phpapp01-thumbnail.jpg?width=560&fit=bounds)

![Elasticsearch And Ruby [RuPy2012]](https://cdn.slidesharecdn.com/ss_thumbnails/elasticsearchandruby-karelminarik-rupy2012-121118065224-phpapp01-thumbnail.jpg?width=560&fit=bounds)

![Elasticsearch And Ruby [RuPy2012]](https://cdn.slidesharecdn.com/ss_thumbnails/elasticsearchandruby-karelminarik-rupy2012-121118065224-phpapp01-thumbnail.jpg?width=560&fit=bounds)

Elasticsearch And Ruby [RuPy2012]Karel Minarik Elasticsearch and Ruby document summarized in 3 sentences:

Elasticsearch is an open source search and analytics engine built on Apache Lucene that provides scalable searching and analyzing of big data. It is a great fit for dynamic languages like Ruby and web-oriented workflows due to its REST API and JSON DSL. The document provides examples of using the Ruby library Tire to interface with Elasticsearch to perform searches and facets from Ruby applications and Rails frameworks.

elasticsearch basics workshop

elasticsearch basics workshopMathieu Elie Quick install of elasticsearch, put documents, request, set a mapping and prepare yourself to read the doc !

elasticsearch - advanced features in practice

elasticsearch - advanced features in practiceJano Suchal How we used faceted search, percolator and scroll api to identify suspicious contracts published by slovak government.

Elasticsearch (Rubyshift 2013)

Elasticsearch (Rubyshift 2013)Karel Minarik Elasticsearch is a distributed, RESTful search and analytics engine capable of searching, analyzing, and storing large volumes of data. It allows for flexible configuration via a REST API and JSON documents and is scalable, versatile in search capabilities including text analytics, and open source. Elasticsearch can index data in flexible schemas and supports various data modeling approaches like flat structures with separate indexes or denormalized structures to optimize search performance at the cost of update efficiency.

dataviz on d3.js + elasticsearch

dataviz on d3.js + elasticsearchMathieu Elie The document discusses using Elasticsearch, D3.js, Angular.js, and Google Refine to create a full stack data visualization of open data from Bordeaux, France. It focuses on data from the CAPC contemporary art museum, importing the data into Elasticsearch for scalable search and then using D3.js, Angular.js, and Yeoman to build the front-end visualization with JavaScript. The goal is to make the data more accessible and understandable through interactive visualization.

Hands On Spring Data

Hands On Spring DataEric Bottard This document summarizes a presentation on Spring Data by Eric Bottard and Florent Biville. Spring Data aims to provide a consistent programming model for new data stores while retaining store-specific features. It uses conventions over configuration for mapping objects to data stores. Repositories provide basic CRUD functionality without implementations. Magic finders allow querying by properties. Pagination and sorting are also supported.

Elastic search apache_solr

Elastic search apache_solrmacrochen Elasticsearch offers several advantages over Apache Solr including being more easily distributed, replicated, and supporting real-time indexing. It allows for easy sharding and replication of indexes across multiple nodes. However, Elasticsearch lacks some features found in Solr such as spell checking, date math, and facet pagination. The document provides an overview of the similarities and differences between Elasticsearch and Solr for choosing between the two search servers.

ElasticSearch - index server used as a document database

ElasticSearch - index server used as a document databaseRobert Lujo Presentation held on 5.10.2014 on http://2014.webcampzg.org/talks/.

Although ElasticSearch (ES) primary purpose is to be used as index/search server, in its featureset ES overlaps with common NoSql database; better to say, document database.

Why this could be interesting and how this could be used effectively?

Talk overview:

- ES - history, background, philosophy, featureset overview, focus on indexing/search features

- short presentation on how to get started - installation, indexing and search/retrieving

- Database should provide following functions: store, search, retrieve -> differences between relational, document and search databases

- it is not unusual to use ES additionally as an document database (store and retrieve)

- an use-case will be presented where ES can be used as a single database in the system (benefits and drawbacks)

- what if a relational database is introduced in previosly demonstrated system (benefits and drawbacks)

ES is a nice and in reality ready-to-use example that can change perspective of development of some type of software systems.

Elastic search Walkthrough

Elastic search WalkthroughSuhel Meman Elasticsearch is an open-source, distributed search and analytics engine built on Apache Lucene. It allows storing, searching, and analyzing large volumes of data quickly and in near real-time. Key concepts include being schema-free, document-oriented, and distributed. Indices can be created to store different types of documents. Mapping defines how documents are indexed. Documents can be added, retrieved, updated, and deleted via RESTful APIs. Queries can be used to search for documents matching search criteria. Faceted search provides aggregated data based on search queries. Elastica provides a PHP client for interacting with Elasticsearch.

Elastic Search

Elastic SearchNavule Rao Introduction to Elastic Search

Elastic Search Terminology

Index, Type, Document, Field

Comparison with Relational Database

Understanding of Elastic architecture

Clusters, Nodes, Shards & Replicas

Search

How it works?

Inverted Index

Installation & Configuration

Setup & Run Elastic Server

Elastic in Action

Indexing, Querying & Deleting

Elasticsearch first-steps

Elasticsearch first-stepsMatteo Moci This document discusses using Elasticsearch for social media analytics and provides examples of common tasks. It introduces Elasticsearch basics like installation, indexing documents, and searching. It also covers more advanced topics like mapping types, facets for aggregations, analyzers, nested and parent/child relations between documents. The document concludes with recommendations on data design, suggesting indexing strategies for different use cases like per user, single index, or partitioning by time range.

Elasticsearch: You know, for search! and more!

Elasticsearch: You know, for search! and more!Philips Kokoh Prasetyo Elasticsearch is presented as an expert in real-time search, aggregation, and analytics. The document outlines Elasticsearch concepts like indexing, mapping, analysis, and the query DSL. Examples are provided for real-time search queries, aggregations including terms, date histograms, and geo distance. Lessons learned from using Elasticsearch at LARC are also discussed.

Managing Your Content with Elasticsearch

Managing Your Content with ElasticsearchSamantha Quiñones This document provides an overview of Elasticsearch including:

- Elasticsearch is a distributed, real-time search and analytics engine. It allows storing, searching, and analyzing big volumes of data in near real-time.

- Documents are stored in indexes which can be queried using a RESTful API or with query languages like the Query DSL.

- CRUD operations allow indexing, retrieving, updating, and deleting documents. More operations can be performed efficiently using the bulk API.

- Documents are analyzed and indexed to support full-text search queries and structured queries against specific fields. Mappings and analyzers define how text is processed for searching.

Elastic Search

Elastic SearchLukas Vlcek This document provides an overview of ElasticSearch, an open source, distributed, RESTful search and analytics engine. It discusses how ElasticSearch is highly available, distributed across shards and replicas, and can be deployed in the cloud. Examples are provided showing how to index and search data via the REST API and retrieve cluster health information. Advanced features like faceting, scripting, parent/child relationships, and versioning are also summarized.

An Introduction to Elastic Search.

An Introduction to Elastic Search.Jurriaan Persyn Talk given for the #phpbenelux user group, March 27th in Gent (BE), with the goal of convincing developers that are used to build php/mysql apps to broaden their horizon when adding search to their site. Be sure to also have a look at the notes for the slides; they explain some of the screenshots, etc.

An accompanying blog post about this subject can be found at http://www.jurriaanpersyn.com/archives/2013/11/18/introduction-to-elasticsearch/

Philly PHP: April '17 Elastic Search Introduction by Aditya Bhamidpati

Philly PHP: April '17 Elastic Search Introduction by Aditya BhamidpatiRobert Calcavecchia Philly PHP April 2017 Meetup: Introduction to Elastic Search as presented by Aditya Bhamidpati on April 19, 2017.

These slides cover an introduction to using Elastic Search

NoSQL: Why, When, and How

NoSQL: Why, When, and HowBigBlueHat The document discusses NoSQL databases and CouchDB. It provides an overview of NoSQL, the different types of NoSQL databases, and when each type would be used. It then focuses on CouchDB, explaining its features like document centric modeling, replication, and fail fast architecture. Examples are given of how to interact with CouchDB using its HTTP API and tools like Resty.

Elasticsearch Introduction at BigData meetup

Elasticsearch Introduction at BigData meetupEric Rodriguez (Hiring in Lex) Global introduction to elastisearch presented at BigData meetup.

Use cases, getting started, Rest CRUD API, Mapping, Search API, Query DSL with queries and filters, Analyzers, Analytics with facets and aggregations, Percolator, High Availability, Clients & Integrations, ...

Introduction to Lucene & Solr and Usecases

Introduction to Lucene & Solr and UsecasesRahul Jain Rahul Jain gave a presentation on Lucene and Solr. He began with an overview of information retrieval and the inverted index. He then discussed Lucene, describing it as an open source information retrieval library for indexing and searching. He discussed Solr, describing it as an enterprise search platform built on Lucene that provides distributed indexing, replication, and load balancing. He provided examples of how Solr is used for search, analytics, auto-suggest, and more by companies like eBay, Netflix, and Twitter.

DataFrame: Spark's new abstraction for data science by Reynold Xin of Databricks

DataFrame: Spark's new abstraction for data science by Reynold Xin of DatabricksData Con LA Spark DataFrames provide a unified data structure and API for distributed data processing across Python, R and Scala. DataFrames allow users to manipulate distributed datasets using familiar data frame concepts from single machine tools like Pandas and dplyr. The DataFrame API is built on a logical query plan called Catalyst that is optimized for efficient execution across different languages and Spark execution engines like Tungsten.

Distributed percolator in elasticsearch

Distributed percolator in elasticsearchmartijnvg The document discusses the percolator feature in Elasticsearch. It begins by explaining what a percolator is and how it works at a high level. It then provides more technical details on how to index queries, perform percolation searches, and the benefits of the redesigned percolator. Key points covered include how the percolator works in distributed environments, examples of how percolator can be used, and new features like filtering, sorting, scoring, and highlighting.

Introduction to Elasticsearch

Introduction to ElasticsearchJason Austin Elasticsearch is a distributed, RESTful search and analytics engine that allows for fast searching, filtering, and analysis of large volumes of data. It is document-based and stores structured and unstructured data in JSON documents within configurable indices. Documents can be queried using a simple query string syntax or more complex queries using the domain-specific query language. Elasticsearch also supports analytics through aggregations that can perform metrics and bucketing operations on document fields.

Project sample- PHP, MySQL, Android, MongoDB, R

Project sample- PHP, MySQL, Android, MongoDB, RVijayananda Mohire Arif Plaza Inn is the name of the sample project using PHP MySQL MongoDB(Chat app) Android SDK (Geopic ) and R based simple suggestions

Open Source Creativity

Open Source CreativitySara Cannon We’re all trying to find that idea or spark that will turn a good project into a great project. Creativity plays a huge role in the outcome of our work. Harnessing the power of collaboration and open source, we can make great strides towards excellence. Not just for designers, this talk can be applicable to many different roles – even development. In this talk, Seasoned Creative Director Sara Cannon is going to share some secrets about creative methodology, collaboration, and the strong role that open source can play in our work.

More Related Content

What's hot (20)

elasticsearch - advanced features in practice

elasticsearch - advanced features in practiceJano Suchal How we used faceted search, percolator and scroll api to identify suspicious contracts published by slovak government.

Elasticsearch (Rubyshift 2013)

Elasticsearch (Rubyshift 2013)Karel Minarik Elasticsearch is a distributed, RESTful search and analytics engine capable of searching, analyzing, and storing large volumes of data. It allows for flexible configuration via a REST API and JSON documents and is scalable, versatile in search capabilities including text analytics, and open source. Elasticsearch can index data in flexible schemas and supports various data modeling approaches like flat structures with separate indexes or denormalized structures to optimize search performance at the cost of update efficiency.

dataviz on d3.js + elasticsearch

dataviz on d3.js + elasticsearchMathieu Elie The document discusses using Elasticsearch, D3.js, Angular.js, and Google Refine to create a full stack data visualization of open data from Bordeaux, France. It focuses on data from the CAPC contemporary art museum, importing the data into Elasticsearch for scalable search and then using D3.js, Angular.js, and Yeoman to build the front-end visualization with JavaScript. The goal is to make the data more accessible and understandable through interactive visualization.

Hands On Spring Data

Hands On Spring DataEric Bottard This document summarizes a presentation on Spring Data by Eric Bottard and Florent Biville. Spring Data aims to provide a consistent programming model for new data stores while retaining store-specific features. It uses conventions over configuration for mapping objects to data stores. Repositories provide basic CRUD functionality without implementations. Magic finders allow querying by properties. Pagination and sorting are also supported.

Elastic search apache_solr

Elastic search apache_solrmacrochen Elasticsearch offers several advantages over Apache Solr including being more easily distributed, replicated, and supporting real-time indexing. It allows for easy sharding and replication of indexes across multiple nodes. However, Elasticsearch lacks some features found in Solr such as spell checking, date math, and facet pagination. The document provides an overview of the similarities and differences between Elasticsearch and Solr for choosing between the two search servers.

ElasticSearch - index server used as a document database

ElasticSearch - index server used as a document databaseRobert Lujo Presentation held on 5.10.2014 on http://2014.webcampzg.org/talks/.

Although ElasticSearch (ES) primary purpose is to be used as index/search server, in its featureset ES overlaps with common NoSql database; better to say, document database.

Why this could be interesting and how this could be used effectively?

Talk overview:

- ES - history, background, philosophy, featureset overview, focus on indexing/search features

- short presentation on how to get started - installation, indexing and search/retrieving

- Database should provide following functions: store, search, retrieve -> differences between relational, document and search databases

- it is not unusual to use ES additionally as an document database (store and retrieve)

- an use-case will be presented where ES can be used as a single database in the system (benefits and drawbacks)

- what if a relational database is introduced in previosly demonstrated system (benefits and drawbacks)

ES is a nice and in reality ready-to-use example that can change perspective of development of some type of software systems.

Elastic search Walkthrough

Elastic search WalkthroughSuhel Meman Elasticsearch is an open-source, distributed search and analytics engine built on Apache Lucene. It allows storing, searching, and analyzing large volumes of data quickly and in near real-time. Key concepts include being schema-free, document-oriented, and distributed. Indices can be created to store different types of documents. Mapping defines how documents are indexed. Documents can be added, retrieved, updated, and deleted via RESTful APIs. Queries can be used to search for documents matching search criteria. Faceted search provides aggregated data based on search queries. Elastica provides a PHP client for interacting with Elasticsearch.

Elastic Search

Elastic SearchNavule Rao Introduction to Elastic Search

Elastic Search Terminology

Index, Type, Document, Field

Comparison with Relational Database

Understanding of Elastic architecture

Clusters, Nodes, Shards & Replicas

Search

How it works?

Inverted Index

Installation & Configuration

Setup & Run Elastic Server

Elastic in Action

Indexing, Querying & Deleting

Elasticsearch first-steps

Elasticsearch first-stepsMatteo Moci This document discusses using Elasticsearch for social media analytics and provides examples of common tasks. It introduces Elasticsearch basics like installation, indexing documents, and searching. It also covers more advanced topics like mapping types, facets for aggregations, analyzers, nested and parent/child relations between documents. The document concludes with recommendations on data design, suggesting indexing strategies for different use cases like per user, single index, or partitioning by time range.

Elasticsearch: You know, for search! and more!

Elasticsearch: You know, for search! and more!Philips Kokoh Prasetyo Elasticsearch is presented as an expert in real-time search, aggregation, and analytics. The document outlines Elasticsearch concepts like indexing, mapping, analysis, and the query DSL. Examples are provided for real-time search queries, aggregations including terms, date histograms, and geo distance. Lessons learned from using Elasticsearch at LARC are also discussed.

Managing Your Content with Elasticsearch

Managing Your Content with ElasticsearchSamantha Quiñones This document provides an overview of Elasticsearch including:

- Elasticsearch is a distributed, real-time search and analytics engine. It allows storing, searching, and analyzing big volumes of data in near real-time.

- Documents are stored in indexes which can be queried using a RESTful API or with query languages like the Query DSL.

- CRUD operations allow indexing, retrieving, updating, and deleting documents. More operations can be performed efficiently using the bulk API.

- Documents are analyzed and indexed to support full-text search queries and structured queries against specific fields. Mappings and analyzers define how text is processed for searching.

Elastic Search

Elastic SearchLukas Vlcek This document provides an overview of ElasticSearch, an open source, distributed, RESTful search and analytics engine. It discusses how ElasticSearch is highly available, distributed across shards and replicas, and can be deployed in the cloud. Examples are provided showing how to index and search data via the REST API and retrieve cluster health information. Advanced features like faceting, scripting, parent/child relationships, and versioning are also summarized.

An Introduction to Elastic Search.

An Introduction to Elastic Search.Jurriaan Persyn Talk given for the #phpbenelux user group, March 27th in Gent (BE), with the goal of convincing developers that are used to build php/mysql apps to broaden their horizon when adding search to their site. Be sure to also have a look at the notes for the slides; they explain some of the screenshots, etc.

An accompanying blog post about this subject can be found at http://www.jurriaanpersyn.com/archives/2013/11/18/introduction-to-elasticsearch/

Philly PHP: April '17 Elastic Search Introduction by Aditya Bhamidpati

Philly PHP: April '17 Elastic Search Introduction by Aditya BhamidpatiRobert Calcavecchia Philly PHP April 2017 Meetup: Introduction to Elastic Search as presented by Aditya Bhamidpati on April 19, 2017.

These slides cover an introduction to using Elastic Search

NoSQL: Why, When, and How

NoSQL: Why, When, and HowBigBlueHat The document discusses NoSQL databases and CouchDB. It provides an overview of NoSQL, the different types of NoSQL databases, and when each type would be used. It then focuses on CouchDB, explaining its features like document centric modeling, replication, and fail fast architecture. Examples are given of how to interact with CouchDB using its HTTP API and tools like Resty.

Elasticsearch Introduction at BigData meetup

Elasticsearch Introduction at BigData meetupEric Rodriguez (Hiring in Lex) Global introduction to elastisearch presented at BigData meetup.

Use cases, getting started, Rest CRUD API, Mapping, Search API, Query DSL with queries and filters, Analyzers, Analytics with facets and aggregations, Percolator, High Availability, Clients & Integrations, ...

Introduction to Lucene & Solr and Usecases

Introduction to Lucene & Solr and UsecasesRahul Jain Rahul Jain gave a presentation on Lucene and Solr. He began with an overview of information retrieval and the inverted index. He then discussed Lucene, describing it as an open source information retrieval library for indexing and searching. He discussed Solr, describing it as an enterprise search platform built on Lucene that provides distributed indexing, replication, and load balancing. He provided examples of how Solr is used for search, analytics, auto-suggest, and more by companies like eBay, Netflix, and Twitter.

DataFrame: Spark's new abstraction for data science by Reynold Xin of Databricks

DataFrame: Spark's new abstraction for data science by Reynold Xin of DatabricksData Con LA Spark DataFrames provide a unified data structure and API for distributed data processing across Python, R and Scala. DataFrames allow users to manipulate distributed datasets using familiar data frame concepts from single machine tools like Pandas and dplyr. The DataFrame API is built on a logical query plan called Catalyst that is optimized for efficient execution across different languages and Spark execution engines like Tungsten.

Distributed percolator in elasticsearch

Distributed percolator in elasticsearchmartijnvg The document discusses the percolator feature in Elasticsearch. It begins by explaining what a percolator is and how it works at a high level. It then provides more technical details on how to index queries, perform percolation searches, and the benefits of the redesigned percolator. Key points covered include how the percolator works in distributed environments, examples of how percolator can be used, and new features like filtering, sorting, scoring, and highlighting.

Introduction to Elasticsearch

Introduction to ElasticsearchJason Austin Elasticsearch is a distributed, RESTful search and analytics engine that allows for fast searching, filtering, and analysis of large volumes of data. It is document-based and stores structured and unstructured data in JSON documents within configurable indices. Documents can be queried using a simple query string syntax or more complex queries using the domain-specific query language. Elasticsearch also supports analytics through aggregations that can perform metrics and bucketing operations on document fields.

Viewers also liked (6)

Project sample- PHP, MySQL, Android, MongoDB, R

Project sample- PHP, MySQL, Android, MongoDB, RVijayananda Mohire Arif Plaza Inn is the name of the sample project using PHP MySQL MongoDB(Chat app) Android SDK (Geopic ) and R based simple suggestions

Open Source Creativity

Open Source CreativitySara Cannon We’re all trying to find that idea or spark that will turn a good project into a great project. Creativity plays a huge role in the outcome of our work. Harnessing the power of collaboration and open source, we can make great strides towards excellence. Not just for designers, this talk can be applicable to many different roles – even development. In this talk, Seasoned Creative Director Sara Cannon is going to share some secrets about creative methodology, collaboration, and the strong role that open source can play in our work.

The impact of innovation on travel and tourism industries (World Travel Marke...

The impact of innovation on travel and tourism industries (World Travel Marke...Brian Solis From the impact of Pokemon Go on Silicon Valley to artificial intelligence, futurist Brian Solis talks to Mathew Parsons of World Travel Market about the future of travel, tourism and hospitality.

Reuters: Pictures of the Year 2016 (Part 2)

Reuters: Pictures of the Year 2016 (Part 2)maditabalnco This document contains 20 photos from news events around the world between January and November 2016. The photos show international events like the US presidential election, the conflict in Ukraine, the migrant crisis in Europe, the Rio Olympics, and more. They also depict human interest stories and natural phenomena from various countries.

The Six Highest Performing B2B Blog Post Formats

The Six Highest Performing B2B Blog Post FormatsBarry Feldman If your B2B blogging goals include earning social media shares and backlinks to boost your search rankings, this infographic lists the size best approaches.

The Outcome Economy

The Outcome EconomyHelge Tennø 1) The document discusses the opportunity for technology to improve organizational efficiency and transition economies into a "smart and clean world."

2) It argues that aggregate efficiency has stalled at around 22% for 30 years due to limitations of the Second Industrial Revolution, but that digitizing transport, energy, and communication through technologies like blockchain can help manage resources and increase efficiency.

3) Technologies like precision agriculture, cloud computing, robotics, and autonomous vehicles may allow for "dematerialization" and do more with fewer physical resources through effects like reduced waste and need for transportation/logistics infrastructure.

Similar to Mongo db php_shaken_not_stirred_joomlafrappe (20)

Intro To Mongo Db

Intro To Mongo Dbchriskite This document provides an introduction to MongoDB, including what it is, why it may be used, and how its data model works. Some key points:

- MongoDB is a non-relational database that stores data in flexible, JSON-like documents rather than fixed schema tables.

- It offers advantages like dynamic schemas, embedding of related data, and fast performance at large scales.

- Data is organized into collections of documents, which can contain sub-documents to represent one-to-many relationships without joins.

- Queries use JSON-like syntax to search for patterns in documents, and indexes can improve performance.

Introduction to MongoDB and Workshop

Introduction to MongoDB and WorkshopAhmedabadJavaMeetup Agenda:

MongoDB Overview/History

Workshop

1. How to perform operations to MongoDB – Workshop

2. Using MongoDB in your Java application

Advance usage of MongoDB

1. Performance measurement comparison – real life use cases

3. Doing Cluster setup

4. Cons of MongoDB with other document oriented DB

5. Map-reduce/ Aggregation overview

Workshop prerequisite

1. All participants must bring their laptops.

2. https://github.com/geek007/mongdb-examples

3. Software prerequisite

a. Java version 1.6+

b. Your favorite IDE, Preferred http://www.jetbrains.com/idea/download/

c. MongoDB server version – 2.6.3 (http://www.mongodb.org/downloads - 64 bit version)

d. Participants can install MongoDB client – http://robomongo.org/

About Speaker:

Akbar Gadhiya is working with Ishi Systems as Programmer Analyst. Previously he worked with PMC, Baroda and HCL Technologies.

Mongo Presentation by Metatagg Solutions

Mongo Presentation by Metatagg SolutionsMetatagg Solutions MongoDB is an open source document database, and the leading NoSQL database. MongoDB is a document oriented database that provides high performance, high availability, and easy scalability. It is Maintained and supported by 10gen.

How to use NoSQL in Enterprise Java Applications - NoSQL Roadshow Basel

How to use NoSQL in Enterprise Java Applications - NoSQL Roadshow BaselPatrick Baumgartner Once you begin developing with NoSQL technologies you will quickly realize that accessing data stores or services often requires in-depth knowledge of proprietary APIs that are typically not designed for use in enterprise Java applications. Sooner or later you might find yourself wanting to write an abstraction layer to encapsulate those APIs and simplify your application code. Luckily such an abstraction layer already exits: Spring Data.

elasticsearch

elasticsearchSatish Mohan The document discusses ElasticSearch, an open source search engine and database. It describes how ElasticSearch allows data to flow from various sources into an index using Rivers. It also explains key ElasticSearch concepts like shards, replicas, and index aliases that improve scalability and performance. The document provides examples of ElasticSearch REST API calls for indexing, searching, and retrieving documents.

Getting Started with MongoDB (TCF ITPC 2014)

Getting Started with MongoDB (TCF ITPC 2014)Michael Redlich This document provides an overview and introduction to MongoDB. It discusses what MongoDB is, how it compares to SQL databases, basic CRUD operations, and getting started steps like downloading, installing, and starting MongoDB. It also covers MongoDB concepts like documents, collections, queries and indexes. The document aims to help attendees understand MongoDB and includes several examples and promises a live demo.

Tech Gupshup Meetup On MongoDB - 24/06/2016

Tech Gupshup Meetup On MongoDB - 24/06/2016Mukesh Tilokani MongoDB is a non-relational database that stores data in flexible, JSON-like documents. It does not have a predefined schema, so documents in a collection do not need to have the same fields. Documents can also embed other documents to efficiently represent relationships between data. MongoDB is scalable and supports features like sharding. While it lacks rigid schema enforcement and referential integrity of SQL databases, MongoDB allows for rapid development and can handle diverse data types and scale easily.

MongoDB

MongoDBSteven Francia This presentation was given at the LDS Tech SORT Conference 2011 in Salt Lake City. The slides are quite comprehensive covering many topics on MongoDB. Rather than a traditional presentation, this was presented as more of a Q & A session. Topics covered include. Introduction to MongoDB, Use Cases, Schema design, High availability (replication) and Horizontal Scaling (sharding).

Data Abstraction for Large Web Applications

Data Abstraction for Large Web Applicationsbrandonsavage This document discusses data abstraction for large web applications. It recommends separating the use of data from how it is retrieved by using data models. Applications should be built to be storage agnostic by using a standard data format and avoiding dependencies on specific databases. The correct data storage medium should be used based on factors like the type of data, availability needs, and performance requirements. Thinking in terms of domain-specific actions rather than database queries helps achieve this separation and flexibility.

Introduction to NoSQL with MongoDB

Introduction to NoSQL with MongoDBHector Correa This document provides an introduction to NoSQL databases, using MongoDB as an example. It discusses what NoSQL databases are, why they were created, different types of NoSQL databases, and MongoDB features like replication and sharding. Examples are shown of basic CRUD operations in MongoDB as an alternative to SQL. Advantages and disadvantages of both SQL and NoSQL databases are also presented.

Architecture | Busy Java Developers Guide to NoSQL | Ted Neward

Architecture | Busy Java Developers Guide to NoSQL | Ted NewardJAX London 2011-11-02 | 03:45 PM - 04:35 PM |

The NoSQL movement has stormed onto the development scene, and it’s left a few developers scratching their heads, trying to figure out when to use a NoSQL database instead of a regular database, much less which NoSQL database to use. In this session, we’ll examine the NoSQL ecosystem, look at the major players, how the compare and contrast, and what sort of architectural implications they have for software systems in general.

Mongodb intro

Mongodb introchristkv Christian Kvalheim gave an introduction to NoSQL and MongoDB. Some key points:

1) MongoDB is a scalable, high-performance, open source NoSQL database that uses a document-oriented model.

2) It supports indexing, replication, auto-sharding for horizontal scaling, and querying.

3) Documents are stored in JSON-like records which can contain various data types including nested objects and arrays.

Slick Data Sharding: Slides from DrupalCon London

Slick Data Sharding: Slides from DrupalCon LondonPhase2 Presented at DrupalCon London by Senior Developer Tobby Hagler Slick Data Sharding teaches you how to develop scalable data applications with Drupal.

An Introduction To NoSQL & MongoDB

An Introduction To NoSQL & MongoDBLee Theobald This document provides an introduction to NoSQL and MongoDB. It discusses that NoSQL is a non-relational database management system that avoids joins and is easy to scale. It then summarizes the different flavors of NoSQL including key-value stores, graphs, BigTable, and document stores. The remainder of the document focuses on MongoDB, describing its structure, how to perform inserts and searches, features like map-reduce and replication. It concludes by encouraging the reader to try MongoDB themselves.

Use Your MySQL Knowledge to Become a MongoDB Guru

Use Your MySQL Knowledge to Become a MongoDB GuruTim Callaghan Leverage all of your MySQL knowledge and experience to get up to speed quickly with MongoDB.

Presented at Percona Live London 2013 with Robert Hodges of Continuent.

Introducción a NoSQL

Introducción a NoSQLMongoDB No se pierda esta oportunidad de conocer las ventajas de NoSQL. Participe en nuestro seminario web y descubra:

Qué significa el término NoSQL

Qué diferencias hay entre los almacenes clave-valor, columna ancha, grafo y de documentos

Qué significa el término «multimodelo»

Your Database Cannot Do this (well)

Your Database Cannot Do this (well)javier ramirez Relational databases were created a long time ago for a simpler world. Even if they are still awesome tools for generic workloads, there are some things they cannot do well.

In this session I will speak about purpose-built databases that you can use for specific business scenarios. We will see the type of queries you can run on a Graph database, a Document Database, and a Time-Series database. We will then see how a relational database could also be used for the same use cases, just in a much more complex way.

Using Document Databases with TYPO3 Flow

Using Document Databases with TYPO3 FlowKarsten Dambekalns This document discusses using document databases like CouchDB with TYPO3 Flow. It provides an overview of persistence basics in Flow and Doctrine ORM. It then covers using CouchDB as a document database, including its REST API, basics, and the TYPO3.CouchDB package. It notes limitations and introduces alternatives like Radmiraal.CouchDB that support multiple backends. Finally, it discusses future support for multiple persistence backends in Flow.

Rails with mongodb

Rails with mongodbKosuke Matsuda This document discusses using MongoDB as the database for a Rails application. It provides instructions for installing MongoDB on Mac OS X using MacPorts. It also discusses connecting Rails to MongoDB using the mongo-ruby-driver and either MongoMapper or Mongoid ORM frameworks. This allows modeling data as Rails models and using an SQL-like interface while storing data in MongoDB, a NoSQL database.

MongoDB Pros and Cons

MongoDB Pros and Consjohnrjenson These are the slides I presented at the Nosql Night in Boston on Nov 4, 2014. The slides were adapted from a presentation given by Steve Francia in 2011. Original slide deck can be found here:

http://spf13.com/presentation/mongodb-sort-conference-2011

Recently uploaded (20)

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Complete Guide to Advanced Logistics Management Software in Riyadh.pdf

Complete Guide to Advanced Logistics Management Software in Riyadh.pdfSoftware Company Explore the benefits and features of advanced logistics management software for businesses in Riyadh. This guide delves into the latest technologies, from real-time tracking and route optimization to warehouse management and inventory control, helping businesses streamline their logistics operations and reduce costs. Learn how implementing the right software solution can enhance efficiency, improve customer satisfaction, and provide a competitive edge in the growing logistics sector of Riyadh.

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...Aqusag Technologies In late April 2025, a significant portion of Europe, particularly Spain, Portugal, and parts of southern France, experienced widespread, rolling power outages that continue to affect millions of residents, businesses, and infrastructure systems.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

Mongo db php_shaken_not_stirred_joomlafrappe

- 1. PHP & MongoDB Shaken, not stirred Spyros Passas / @spassas Friday, April 12, 13

- 2. NoSQL | Everybody is talking about it! Friday, April 12, 13

- 3. NoSQL | What • NoSQL ≠ SQL is dead • Not an opposite, but an alternative/complement to SQL (Not Only SQL) • Came as a need for large volumes of data and high transaction rates • Are generally based on key/value store • Are very happy with denormalized data since they have no joins Friday, April 12, 13

- 4. NoSQL | Why • Flexible Data Model, so no prototyping is needed • Scaling out instead of Scaling up • Performance is significantly higher • Cheaper licenses (or free) • Runs on commodity hardware • Caching layer already there • Data variety Friday, April 12, 13

- 5. NoSQL | Why not • well, it’s not all ACID... • Less mature than the relational systems (so the ecosystem of tools/addons is still small) • BI & Reporting is limited • Coarse grained Security settings Friday, April 12, 13

- 6. NoSQL | Which • Key/Value stores • Document Databases • And more... Friday, April 12, 13

- 7. MongoDB | Documents & Collections • Document is the equivalent of an SQL table row and is a set of key/value pairs • Collection is the equivalent of an SQL table and is a set of documents not necessarily of the same type • Database is the equivalent of a... database { “name” : “Spyros Passas”, “company” : “Neybox” } { “name” : “Spyros Passas”, “company” : “Neybox” } { “Event” : “JoomlaFrappe”, “location” : “Athens” } Friday, April 12, 13

- 8. MongoDB | What can a value be? • Keys are always strings (without . and $) • Value can be • String • Number • Date • Array • Document {“name” : “Spyros Passas”} {“age” : 30} {“birthday” : Date(“1982-12-12”} {“interests” : [“Programming”, “NoSQL”]} {“address” : { “street” : “123 Pireus st.”, “city” : “Athens”, “zip_code” : 17121 } } Friday, April 12, 13

- 9. MongoDB | Example of a document { “_id” : ObjectId(“47cc67093475061e3d95369d”), “name” : “Spyros Passas”, “birthday” : Date(“1982-12-12”), “age” : 30, “interests” : [“Programming”, “NoSQL”], “address” : { “street” : “123 Pireus st.”, “city” : “Athens”, “zip_code” : 17121 } “_id” : ObjectId(“47cc67093475061e3d95369d”) ObjectId is a special type Friday, April 12, 13

- 10. MongoDB | Indexes • Any field can be indexed • Indexes are ordered • Indexes can be unique • Compound indexes are possible (and in fact very useful) • Can be created or dropped at anytime • Indexes have a large size and an insertion overhead Friday, April 12, 13

- 11. MongoDB | Operators & Modifiers • Comparison: $lt (<), $lte (<=), $ne (!=), $gte (>=), $gt (>) • Logical: $and, $or, $not, $nor • Array: $all, $in, $nin • Geospatial: $geoWithin, $geoIntersects, $near, $nearSphere • Fields: $inc, $rename, $set, $unset • Array: $pop, $pull, $push, $addToSet Friday, April 12, 13

- 12. MongoDB | Data Relations • MongoDB has no joins (but you can fake them in the application level) • MongoDB supports nested data (and it’s a pretty good idea actually!) • Collections are not necessary, but greatly help data organization and performance Friday, April 12, 13

- 13. MongoDB | Going from relational to NoSQL • Rethink your data and select a proper database • Rethink the relationships between your data • Rethink your query access patterns to create efficient indexes • Get to know your NoSQL database (and its limitations) • Move logic from data to application layer (but be careful) Friday, April 12, 13

- 14. MongoDB | Deployment Mongo Server Data Layer Application Layer App Server + mongos Friday, April 12, 13

- 15. MongoDB | Deployment Mongo Server Friday, April 12, 13

- 16. MongoDB | Replica Set Primary (Master) Secondary (Slave) Friday, April 12, 13

- 17. MongoDB | Replica Set Primary (Master) Secondary (Slave)Secondary (Slave) Secondary (Slave) Friday, April 12, 13

- 18. MongoDB | Replica Set when things go wrong Primary (Master) Secondary (Slave)Secondary (Slave) Secondary (Slave) Friday, April 12, 13

- 19. MongoDB | Replica Set when things go wrong Primary (Master) Secondary (Slave) Secondary (Slave) Friday, April 12, 13

- 20. MongoDB | Replica Set when things go wrong Primary (Master) Secondary (Slave) Secondary (Slave)Secondary (Slave) Friday, April 12, 13

- 21. MongoDB | Replica set tips • Physical machines should be in independent availability zones • Selecting to read from slaves significantly increases performance (but you have to be cautious) Friday, April 12, 13

- 22. MongoDB | Sharding A...Z Friday, April 12, 13

- 23. MongoDB | Sharding A...J K....P Q....Z Friday, April 12, 13

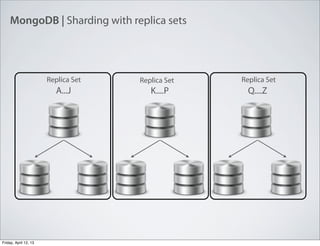

- 24. MongoDB | Sharding with replica sets A...J K....P Q....Z Replica SetReplica SetReplica Set Friday, April 12, 13

- 25. MongoDB | Sharding with replica sets A...J K....P Q....Z Config Servers Replica SetReplica SetReplica Set Friday, April 12, 13

- 26. MongoDB | Things to consider when sharding • Picking the right sharding key is of paramount importance! • Rule of thumb:“the shard key must distribute reads and writes and keep the data you’re using together” • Key must be of high cardinality • Key must not be monotonically ascending to infinity • Key must not be random • A good idea is a coarsely ascending field + a field you query a lot Friday, April 12, 13

- 27. MongoDB | PHP | The driver • Serializes objects to BSON • Uses exceptions to handle errors • Core classes • MongoClient: Creates and manages DB connections • MongoDB: Interact with a database • MongoCollection: Represents and manages a collection • MongoCursor: Used to iterate through query results Friday, April 12, 13

- 28. MongoDB | PHP | MongoClient <?php // Gets the client $mongo = new MongoClient(“mongodb://localhost:27017”); // Sets the read preferences (Primary only or primary & secondary) $mongo->setReadPreference(MongoClient::RP_SECONDARY); // If in replica set, returns hosts status $hosts_array = mongo->getHosts(); // Returns an array with the database names $db_array = $mongo->listDBs(); // Returns a MongoDB object $database = $mongo->selectDB(“myblog”); ?> Creates a connection and sets read preferences Provide info about hosts status and health Lists, selects or drops databases Friday, April 12, 13

- 29. MongoDB | PHP | MongoDB <?php // Create a collection $database->createCollection(“blogposts”); // Select a collection $blogCollection = $database->selectCollection(“blogposts”); // Drop a collection $database->dropCollection(“blogposts”) ?> Handles Collections Friday, April 12, 13

- 30. MongoDB | PHP | MongoCollection | Insert <?php // Fire and forget insertion $properties = array(“author”=>”spassas”, “title”=>”Hello World”); $collection->insert($properties); ?> Insert <?php // Safe insertion $properties = array(“author”=>”spassas”, “title”=>”Hello World”); $collection->insert($properties, array(“safe”=>true)); ?> Friday, April 12, 13

- 31. MongoDB | PHP | MongoCollection | Update <?php // Update $c->insert(array("firstname" => "Spyros", "lastname" => "Passas" )); $newdata = array('$set' => array("address" => "123 Pireos st")); $c->update(array("firstname" => "Spyros"), $newdata); // Upsert $c->update( array("uri" => "/summer_pics"), array('$inc' => array("page_hits" => 1)), array("upsert" => true) ); ?> Friday, April 12, 13

- 32. MongoDB | PHP | MongoCollection | Delete <?php // Delete parameters $keyValue = array(“name” => “Spyros”); // Safe remove $collection->remove($keyValue, array('safe' => true)); // Fire and forget remove $collection->remove($keyValue); ?> Friday, April 12, 13

- 33. MongoDB | PHP | MongoCollection | Query <?php // Get the collection $posts = $mongo->selectDB(“blog”)->selectCollection(“posts”); // Find one $post = $posts->findOne(array('author' => 'john'), array('title')); // Find many $allPosts = $posts->find(array('author' => 'john')); // Find using operators $commentedPosts = $posts->find(array(‘comment_count’ => array(‘$gt’=>1))); // Find in arrays $tags = array(‘technology’, ‘nosql’); // Find any $postsWithAnyTag = $posts->find(array('tags' => array('$in' => $tags))); // Find all $postsWithAllTags = $posts->find(array('tags' => array('$all' => $tags))); ?> Friday, April 12, 13

- 34. MongoDB | PHP | MongoCursor <?php // Iterate through results $results = $collection->find(); foreach ($results as $result) { // Do something here } // Sort $posts = $posts->sort(array('created_at'=> -1)); // Skip a number of results $posts = $posts->skip(5); // Limit the number of results $posts = $posts->limit(10); // Chaining $posts->sort(array('created_at'=> -1))->skip(5)->limit(10); ?> Friday, April 12, 13

- 35. MongoDB | PHP | Query monitoring & Optimization explain() Gives data about index performance for a specific query { "n" : <num>, /* Number documents that match the query */ "nscannedObjects" : <num>, /* total number of documents scanned during the query */ "nscanned" : <num>, /* total number of documents and index entries */ "millis" : <num>, /* time to complete the query in milliseconds */ “millisShardTotal” : <num> /* total time to complete the query on shards */ “millisShardAvg” : <num> /* average time to complete the query on each shard */ } Friday, April 12, 13

- 36. Thank you! {“status” : “over and out”, “mood” : “:)”, “coming_up” : “Q & A” “contact_details”: { “email”:“[email protected]”, “twitter”:”@spassas”, } } Friday, April 12, 13