Ad

Presentation recovery manager (rman) configuration and performance tuning best practices

- 1. <Insert Picture Here> Recovery Manager (RMAN) Configuration and Performance Tuning Best Practices Timothy Chien Principal Product Manager Oracle America Greg Green Senior Database Administrator Starbucks Coffee Company

- 2. 2 Oracle OpenWorld Latin America 2010 December 7–9, 2010

- 3. 3 Oracle OpenWorld Beijing 2010 December 13–16, 2010

- 4. 4 Oracle Products Available Online Oracle Store Buy Oracle license and support online today at oracle.com/store

- 5. 5 <Insert Picture Here> Agenda • Recovery Manager Overview • Configuration Best Practices – Backup Strategies Comparison – Fast Recovery Area (FRA) • Performance Tuning Methodology – Backup Data Flow – Tuning Principles – Diagnosing Performance Bottlenecks • Starbucks Case Study • Summary/Q&A

- 6. 6 Oracle Recovery Manager (RMAN) Oracle-integrated Backup & Recovery Engine Oracle Enterprise Manager RMAN Database Fast Recovery Area Tape Drive Oracle Secure Backup* •Intrinsic knowledge of database file formats and recovery procedures • Block validation • Online block-level recovery • Tablespace/data file recovery • Online, multi-streamed backup • Unused block compression • Native encryption •Integrated disk, tape & cloud backup leveraging the Fast Recovery Area (FRA) and Oracle Secure BackupCloud *RMAN also supports leading 3rd party media managers

- 7. 7 Most Critical Question To Ask First.. • What are my recovery requirements? – Assess tolerance for data loss - Recovery Point Objective (RPO) • How frequently should backups be taken? • Is point-in-time recovery required? – Assess tolerance for downtime - Recovery Time Objective (RTO) • Downtime: Problem identification + recovery planning + systems recovery • Tiered RTO per level of granularity, e.g. database, tablespace, table, row – Determine backup retention policy • Onsite, offsite, long-term • Then..how does my RMAN backup strategy fulfill those requirements?

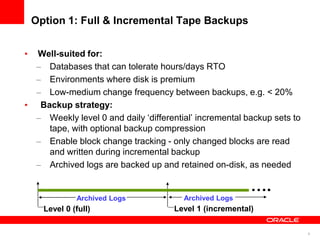

- 8. 8 Option 1: Full & Incremental Tape Backups • Well-suited for: – Databases that can tolerate hours/days RTO – Environments where disk is premium – Low-medium change frequency between backups, e.g. < 20% • Backup strategy: – Weekly level 0 and daily ‘differential’ incremental backup sets to tape, with optional backup compression – Enable block change tracking - only changed blocks are read and written during incremental backup – Archived logs are backed up and retained on-disk, as needed Level 0 (full) Level 1 (incremental) …. Archived Logs Archived Logs

- 9. 9 Script Example • Configure SBT (i.e. tape) channels: – CONFIGURE CHANNEL DEVICE TYPE SBT PARMS '<channel parameters>'; • Weekly full backup: – BACKUP AS BACKUPSET INCREMENTAL LEVEL 0 DATABASE PLUS ARCHIVELOG; • Daily incremental backup: – BACKUP AS BACKUPSET INCREMENTAL LEVEL 1 DATABASE PLUS ARCHIVELOG;

- 10. 10 Option 2: Incrementally Updated Disk Backups • Well-suited for: – Databases that can tolerate no more than a few hours RTO – Environments where disk can be allocated for 1X size of database or most critical tablespaces • Backup strategy: – Initial image copy to FRA, followed by daily incremental backups – Roll forward copy with incremental, to produce new on-disk copy – Full backup archived to tape, as needed – Archived logs are backed up and retained on-disk, as needed – Fast recovery from disk or SWITCH to use image copies Level 0 (full) + archive to tape Level 1 …. Roll forward image copy + Level 1 Archived Logs Archived Logs Archived Logs

- 11. 11 Script Example • Configure SBT channels, if needed: – [CONFIGURE CHANNEL DEVICE TYPE SBT PARMS '<channel parameters>';] • Daily roll forward copy and incremental backup: – RECOVER COPY OF DATABASE WITH TAG 'OSS'; – BACKUP DEVICE TYPE DISK INCREMENTAL LEVEL 1 FOR RECOVER OF COPY WITH TAG 'OSS' DATABASE; – [BACKUP DEVICE TYPE SBT ARCHIVELOG ALL;] • What happens? – First run: Image copy – Second run: Incremental backup – Third run+: Roll forward copy & create new incremental backup • Backup FRA to tape, if needed: – [BACKUP RECOVERY AREA;]

- 12. 12 Fast Recovery with RMAN SWITCH Demo

- 13. 13 Option 3: Offload Backups to Physical Standby Database in Data Guard Environment • Well-suited for: – Databases that require no more than several minutes of recovery time, in event of any failure – Environments that can preferably allocate symmetric hardware and storage for physical standby database – Environments whose tape infrastructure can be shared between primary and standby database sites • Backup strategy: – Full and incremental backups offloaded to physical standby database – Fast incremental backup on standby with Active Data Guard – Backups can be restored to primary or standby database • Backups can be taken at each database for optimal local protection

- 14. 14 Backup Strategies Comparison Strategy Backup Factors Recovery Factors Option 1: Full & Incremental Tape Backups •Fast incrementals •Save space with backup compression •Cost-effective tape storage •Full backup restored first, then incrementals & archived logs •Tape backups read sequentially Option 2: Incrementally Updated Disk Backups •Incremental + roll forward to create up-to-date copy •Requires 1X production storage for copy •Optional tape storage •Backups read via random access •Restore-free recovery with SWITCH command Option 3: Offload Backups to Physical Standby Database •Above benefits + primary database free to handle more workloads •Requires 1X production hardware and storage for standby database •Fast failover to standby database in event of any failure •Backups are last resort, in event of double site failure

- 15. 15 Fast Recovery Area (FRA) Sizing • If you want to keep: – Control file backups and archived logs • Estimate total size of all archived logs generated between successive backups on the busiest days x 2 (in case of unexpected redo spikes) – Flashback logs • Add in {Redo rate x Flashback retention target time x 2} – Incremental backups • Add in their estimated sizes – On-disk image copy • Add in size of the database minus size of temporary files – Further details: • http://download.oracle.com/docs/cd/E11882_01/backup.112/e106 42/rcmconfb.htm#i1019211

- 16. 16 FRA File Retention and Deletion • When FRA space needs exceed quota, automatic file deletion occurs in the following order: 1. Flashback logs • Oldest Flashback time can be affected (with exception of guaranteed restore points) 2. RMAN backup pieces/copies and archived redo logs that are: • Not needed to maintain RMAN retention policy, or • Have been backed up to tape (via DEVICE TYPE SBT) or secondary disk location (via BACKUP RECOVERY AREA TO DESTINATION ‘..’) • If archived log deletion policy is configured as: – APPLIED ON [ALL] STANDBY • Archived log must have been applied to mandatory or all standby databases – SHIPPED TO [ALL] STANDBY • Archived log must have been transferred to mandatory or all standby databases – BACKED UP <N> TIMES TO DEVICE TYPE [DISK | SBT] • Archived log must have been backed up at least <N> times – If [APPLIED or SHIPPED] and BACKED UP policies are configured, both conditions must be satisfied for an archived log to be considered for deletion.

- 18. 18 <Insert Picture Here> Performance Tuning Overview • RMAN Backup Data Flow • Performance Tuning Principles • Diagnosing Performance Bottlenecks

- 19. 19 RMAN Backup Data Flow A. Prepare backup tasks & read blocks into input buffers B. Validate blocks & copy them to output buffers – Compress and/or encrypt data if requested C. Write output buffers to storage media (DISK or SBT) – Media manager handles writing of output buffers to SBT A. Prepare backup tasks & read blocks into input buffers Write to storage media Output I/O Buffer Restore is inverse of data flow..

- 20. 20 Tuning Principles 1. Determine the maximum input disk, output media, and network throughput – E.g. Oracle ORION – downloadable from OTN, DD command – Evaluate network throughput at all touch points, e.g. database server->media management environment->tape system 2. Configure disk subsystem for optimal performance – Use ASM • Configure external redundancy & leverage hardware RAID • If disks will be shared for DATA and FRA disk groups: – Provision the outer sectors to DATA for higher performance – Provision inner sectors to FRA, which has lower performance, but suitable for sequential write activity (e.g. backups) • Otherwise, separate DATA and FRA disks – If not using ASM, stripe data files across all disks with 1 MB stripe size.

- 21. 21 Tuning Principles 3. Tune RMAN to fully utilize disk subsystem and tape – Use asynchronous I/O • For disk backup: – If the system does not support native asynchronous I/O, set DBWR_IO_SLAVES. • Four slave processes allocated per session • For tape backup: – Set BACKUP_TAPE_IO_SLAVES, unless media manager states otherwise. • One slave process allocated per channel process

- 22. 22 Tuning Principles 3. Tune RMAN to fully utilize disk subsystem and tape – For backups to disk, allocate as many channels as can be handled by the system. • For image copies, one channel processes one data file at a time. – For backups to tape, allocate one channel per tape drive. • “But allocating # of channels greater than # of tape drives increases backup performance..so that’s a good thing, right?” – No..restore time can be degraded due to tape-side multiplexing • If BACKUP VALIDATE duration (i.e. read phase) where: – Time {channels = tape drives} ~= Time {channels > tape drives} • Bottleneck is most likely in media manager. • Discussed later in ‘Diagnosing Performance Bottlenecks’ – Time {channels = tape drives} >> Time {channels > tape drives} • Tune read phase (discussed next)

- 23. 23 Read Phase - RMAN Multiplexing • Multiplexing level: maximum number of files read by one channel, at any time, during backup – Min(MAXOPENFILES, FILESPERSET) – MAXOPENFILES default = 8 – FILESPERSET default = 64 • Larger vs smaller backup set trade-offs – Restore performance • All data files vs. single data file – Backup restartability • MAXOPENFILES determines number and size of input buffers – Number and size of input buffers in V$BACKUP_ASYNC_IO/V$BACKUP_SYNC_IO – All buffers allocated from PGA, unless disk or tape I/O slaves are enabled (SGA by default or LARGE_POOL, if set)

- 24. 24 Read Phase - RMAN Input Buffers • MAXOPENFILES ≤ 4 – Each buffer = 1MB, total buffer size for channel is up to 16MB • MAXOPENFILES=1 => 16 buffers/file, 1 MB/buffer = 16 MB/file – Optimal for ASM or striped system • 4 < MAXOPENFILES ≤ 8 – Each buffer = 512KB, total buffer size for channel is up to 16MB. Number of buffers per file will depend on number of files. • MAXOPENFILES=8 => 4 buffers/file, 512 KB/buffer = 2 MB/file – Optimal for non-striped system – Reduce the number of input buffers/file to more effectively spread out I/O usage (since each file resides on one disk) • MAXOPENFILES > 8 – Each buffer = 128KB, 4 buffers per file, so each file will have 512KB buffer

- 25. 25 Tuning Principles 4. If BACKUP VALIDATE still does not utilize available disk I/O & there is available CPU and memory: – Increase RMAN buffer memory usage • With Oracle Database 11g Release 11.1.0.7 or lower versions - • Set _BACKUP_KSFQ_BUFCNT (default 16) = # of input disks – Number of input buffers per file allocated – Achieve balance between memory usage and I/O • E.g. Setting to 500 for 500 input disks may exceed tolerable memory consumption • Set _BACKUP_KSFQ_BUFSZ (default 1048576) = stripe size (in bytes) • With Oracle Database 11g Release 2 - • Set _BACKUP_FILE_BUFCNT,_BACKUP_FILE_BUFSZ • Restore performance can increase with setting these parameters, as output buffers used during restore will also increase correspondingly • Refer to Support Note 1072545.1 for more details • Note: With Oracle Database 11g Release 2 & ASM, all buffers are automatically sized for optimal performance

- 26. 26 Backup Data Flow A. Prepare backup tasks & read blocks into input buffers B. Validate blocks & copy them to output buffers – Compress and/or encrypt data if requested C. Write output buffers to storage media (DISK or SBT) – Media manager handles writing of output buffers to SBT Write to storage media Output I/O Buffer

- 27. 27 Tuning Principles 5. RMAN backup compression & encryption guidelines – Both operations depend heavily on CPU resources – Increase CPU resources or use LOW/MEDIUM setting – Verify that uncompressed backup performance scales properly, as channels are added – Note - if data is encrypted with: • TDE column encryption – For encrypted backup, data is double encrypted (i.e. encrypted columns treated as if they were not encrypted) • TDE tablespace encryption – For compressed & encrypted backup, encrypted tablespaces are decrypted, compressed, then re-encrypted – If only encrypted backup, encrypted blocks pass through backup unchanged

- 28. 28 Tuning Principles 6. Tune RMAN output buffer size – Output buffers => blocks written to DISK as copies or backup pieces or to SBT as backup pieces – Four buffers allocated per channel – Default buffer sizes • DISK: 1 MB • SBT: 256 KB – Adjust with BLKSIZE channel parameter – Set BLKSIZE >= media management client buffer size – No changes needed for Oracle Secure Backup • Output buffer count & size for disk backup can be manually adjusted – Details in Support Note 1072545.1 – Note: With Oracle Database 11g Release 2 & ASM, all buffers are automatically sized for optimal performance

- 29. 29 <Insert Picture Here> Performance Tuning Overview • RMAN Backup Data Flow • Performance Tuning Principles • Diagnosing Performance Bottlenecks

- 30. 30 Diagnosing Performance Bottlenecks – Pt. 1 • Query EFFECTIVE_BYTES_PER_SECOND column (EBPS) for ‘AGGREGATE’row in V$BACKUP_ASYNC_IO or V$BACKUP_SYNC_IO – If EBPS < storage media throughput, run BACKUP VALIDATE • Case 1: BACKUP VALIDATE time ~= actual backup time, then read phase is the likely bottleneck. – Refer to RMAN multiplexing and buffer usage guidelines – Investigate ‘slow’ performing files • Find data file with highest (LONG_WAITS/IO_COUNT)ratio • If ASM, add disk spindles and/or re-balance disks • Move file to new disk or multiplex with another ‘slow’ file

- 31. 31 Diagnosing Performance Bottlenecks – Pt. 2 • Case 2: BACKUP VALIDATE time << actual backup time, then buffer copy or write to storage media phase is the likely bottleneck. – Refer to backup compression and encryption guidelines – If tape backup, check media management (MML) settings: • TCP/IP buffer size • Media management client/server buffer size • Client/socket timeout • Media server hardware, connectivity to tape • Enable tape compression (but not RMAN compression)

- 32. 32 Restore & Recovery Performance Best Practices • Minimize archive log application by using incremental backups • Use block media recovery for isolated block corruptions • Keep adequate number of archived logs on disk • Increase RMAN buffer memory usage • Tune database for I/O, DBWR performance, CPU utilization • Refer to MAA Media Recovery Best Practices paper – Active Data Guard 11g Best Practices (includes best practices for Redo Apply)

- 34. 34 Greg Green Senior Database Administrator September 22, 2010 Starbucks Enterprise Data Warehouse (EDW) Backup and Recovery Tuning

- 35. 35 Greg Green Senior Database Administrator September 22, 2010 Starbucks Enterprise Data Warehouse (EDW) Backup and Recovery Tuning

- 36. 36 Starbucks Enterprise Data Warehouse (EDW) Backup and Recovery Tuning • Starbucks Background and EDW Architecture • EDW Backup and Recovery Strategy • Issues/Challenges with Tape Backups • Course of Action to Resolve Tape Backup Performance Issue

- 37. 37 Global Brand Grows from a Single Store

- 38. 38 The Starbucks of Today Licensed Stores: Grocery stores, Borders Book stores, airports, convention centers Foodservice: “We Proudly Brew,” Serving coffee through hotels, colleges, hospitals, airlines Company-operated stores in the U.S. and International

- 39. 39 EDW - Who it Supports • Production EDW supports Starbucks internal business users • 10 TB VLDB warehouse, growing 1-2 TB per year • Provides reports to the store level – sales, staffing, etc. • Thousands of stores directly access the EDW • Web-based dashboard reports via company intranet • Monday Morning Mayhem • Front-end reporting with Microstrategy • Leveraging Ascential DataStage ETL Tool • Toad, SQL Developer, and other ad-hoc tools used by developers and QA • And Much, Much, More…..

- 40. 40 Production Hardware • Servers – 4 CPU HP ia64 1.5 GHz CPU 16 GB RAM • Network – Infiniband Private Interconnect • Public Network – Gigabit Ethernet • Storage – SAN, ASM • 12 TB RAID 1+0 (DATA DG), 146 GB Drives • 14 TB RAID 5 (FRA DG), 300 GB Drives • Oracle Database 11.1.0.7 EE • Media Manager – NetBackup 6.5.5 • RMAN Backup & Recovery 5 Node RAC Database

- 41. 41 Starbucks Enterprise Data Warehouse (EDW) Backup and Recovery Tuning • Starbucks Background and EDW Architecture • EDW Backup and Recovery Strategy • Issues/Challenges with Tape Backups • Course of Action to Resolve Tape Backup Performance Issue

- 42. 42 Backup Strategy • RPO – Anytime within the last 24 hours, Backup window of 24 hours • RMAN Incrementally Updated Backup Strategy • Disk - Flash Recovery Area (FRA) • Daily Incremental update of image copy with ‘SYSDATE – 1’ • Daily Level 1 Differential Incremental Backups • Daily Script: { RECOVER COPY OF DATABASE WITH TAG 'WEEKLY_FULL_BKUP' UNTIL TIME 'SYSDATE - 1'; BACKUP INCREMENTAL LEVEL 1 FOR RECOVER OF COPY WITH TAG WEEKLY_FULL_BKUP DATABASE; BACKUP AS BACKUPSET ARCHIVELOG ALL NOT BACKED UP DELETE ALL INPUT; DELETE NOPROMPT OBSOLETE RECOVERY WINDOW OF 1 DAYS DEVICE TYPE DISK; } • Tape • Weekly: BACKUP RECOVERY AREA • Each day, for rest of the week: BACKUP BACKUPSET ALL

- 43. 43 Backup Performance to FRA • Daily Incremental Update + Incremental Backup • 1 hr 45 minutes -> 2 hrs 30 minutes depending upon workload • 60-75 minutes for RECOVER COPY OF DATABASE .. • 30-45 minutes for incremental backup set creation + time to purge old backup pieces • The backup set is typically 250-350 GB but can vary depending on the workload • 4 RMAN channels to disk running on single RAC node

- 44. 44 Backup Performance to Tape • Daily Backup of Backup Sets to Tape • Using 2 channels on 1 node takes 60-90 minutes (some concern here with speed) • Weekly Backup of Recovery Area to Tape • With 4 channels (2 channels per node) backing up 10.5 TB in FRA, backup duration can be highly variable. • Backup will sometimes run in 15-16 hours and other times 30+ hours! • Why the wide variance? • But first, what is expected backup rate?

- 45. 45 What is Expected Backup Rate? • LTO-2 tape drive can backup at roughly 70 MB/sec compressed (or better) • 4 drives x 70 MB = 280 MB/sec (1 TB/hr) • Is the tape rate supported by FRA disk? • RMAN – BACKUP VALIDATE DATAFILECOPY ALL • Observed rate (read phase) > 1 TB/hr • What is the effect of GigE connection to media server? • Maximum theoretical speed is 128 MB/sec • With overhead, ~115 MB/sec per node • Maximum rate from 2 nodes is 230 MB/sec (828 GB/hr) • Observed rate is more like 180 MB/sec (650 GB/hr) • Conclusion: GigE throttles overall backup rate • FRA backup time = 10.5 TB / 650 GB/hr = ~16 hrs • Something else going on with backup time variance..

- 46. 46 Why So Much Variance in FRA Backup Time? • Three Problem Areas Identified • Link Aggregation on the Media Server • Spent a lot of time making sure this was working • Network Load Balancing from Network Switch • On occasion, 3 out of 4 RMAN channels jumped on one port of Network Interface Card (NIC) • Processor Architecture on Media Server • T2000 Chip – 1 chip x 4 cores x 4 threads • Requires setting interrupts to load balance across the 4 cores • One core completely pegged during tests

- 47. 47 Starbucks Enterprise Data Warehouse (EDW) Backup and Recovery Tuning • Starbucks Background and EDW Architecture • EDW Backup and Recovery Strategy • Issues/Challenges with Tape Backups • Course of Action to Resolve Tape Backup Performance Issue

- 48. 48 Tuning Objective • Decrease Variance in Backup Time • Increase Backup Throughput for Future Growth • EDW capacity increasing from 12->17 TB over next month • Backup window still 24 hours • Current 720 MB/s throughput will overrun window at 17 TB • Desired throughput is ~ 1 TB/hr to accommodate growth & meet backup window • Simplify Backup Hardware Architecture

- 49. 49 Proposed Solution 1 - Eliminate Separate Media Server & Install Media Server on 2 RAC Nodes • Benefits • Reduces Backup Complexity • Eliminates 1 GigE Network Bottleneck • Eliminates Network Load Balancing Issues • Easier to Monitor

- 50. 50 Proposed Solution 2 – Use NetBackup SAN Clients • Benefits • Eliminates 1 GigE Network Bottleneck • Eliminates Network Load Balancing Issues

- 51. 51 What is New Theoretical Bottleneck? • LTO-3 tape drive backs up at ~140 MB/s compressed (or better) • 2 drives (1 drive / node) x 140 MB/sec = 280 MB/s (1 TB/hr) • Is tape speed supported by FRA disk? • RMAN - BACKUP VALIDATE DATAFILECOPY ALL • Observed rate > 1 TB/hr (with 4 RMAN channels) • Is tape speed limited by connection over fiber? • Each Node has 4 x 2 Gb Fiber Connections with EMC PowerPath Multipathing software • Storage Engineer – “1.37 GB/Sec max rate for cluster.” • Two tape drives - 280 MB/s out of 1.37 GB/s • 20% of available I/O capacity utilization • FRA backup time: 10.5 TB / 1 TB/hr = 10.5 hrs • 35% performance improvement vs. today (16 hrs)

- 52. 52 Finally – Some Real RMAN Tuning • Tests were conducted with running a BACKUP VALIDATE DATAFILECOPY ALL command with 2 channels • Test 1 – 2 channels on 1 node • Test 2 - 2 channels on 2 nodes (1 channel/node) • FRA disk group is comprised of 72 – 193 GB LUNs • _BACKUP_KSFQ_BUFCNT = 16 (default) => 200 MB/s (720 GB/hr) = 32 => 250 MB/s (900 GB/hr) = 64 => 300 MB/s (1 TB/hr) • 50% read rate improvement when correctly tuned • Yes, I can fully drive 2 LTO-3s with 2 channels, based on BACKUP VALIDATE testing

- 53. 53 Test 1 – 1 Node with 2 Channels • Test _BACKUP_KSFQ_BUFCNT = 16, 32, 64

- 54. 54 Test 2 – 2 Channels with 1 Channel per Node Node 1 - _BACKUP_KSFQ_BUFCNT = 16, 32, 64 Node 2 - _BACKUP_KSFQ_BUFCNT = 16, 32, 64

- 55. 55 Initial Results of Tape Backup Testing Media Server Installed on RAC Nodes •1 channel per node (2 channels total) + 2 LTO-3 Drives •Observed backup rate of 200 MB/s (720 GB/hr) vs. theoretical 280 MB/s (1 TB/hr with 2 x 140 MB/s for LTO-3) •Recall: RMAN VALIDATE (read rate) > 1 TB/hr, so RMAN not bottleneck •Other possible factors: • Database compression – Yes, but can’t account for all of the lower backup rates • Tuning – Additional performance might be gained by tuning media server parameters • Hardware Setup – HBA ports configuration or how tapes are zoned to the servers

- 56. 56 After Rezoning Tape Drives to HBAs 2 Channels with 1 Channel per Node • Node 1 ~ 145 MB/s • Node 2 ~ 120 MB/s • 33% improvement after rezoning Node 1 Backup Throughput:

- 57. 57 Four Channels with 2 Channels per Node Achieved Backup Rate ~ 1.6 TB/Hour Node 1 Backup Throughput ~240 MB/s: Node 2 Backup Throughput ~200 MB/s (due to other high query activity)

- 58. 58 Summary • Starbucks Background and EDW Architecture • EDW Backup and Recovery Strategy • Issues/Challenges with Tape Backups • Identify the bottlenecks in your system and know your theoretical backup speed • Course of Action to Resolve Tape Backup Performance Issue • Re-architect if bottleneck is hardware related • Tune RMAN parameters to get the most out of your backup hardware • 50% increase in RMAN read performance was achieved by tuning _BACKUP_KSFQ_BUFCNT • RMAN should never be the bottleneck • Keep tuning as new bottlenecks are discovered..

- 59. 59 Summary/Q&A

- 60. 60 Summary • Recovery & business requirements drive the design of backup / data protection strategy – Disk and/or tape, offload to Data Guard? • RMAN performance tuning is all about answering the question: – What is my bottleneck? (then removing it) • Determine maximum throughput/ceiling of each backup phase – Read blocks into input buffers (memory, disk I/O) – Copy to output buffers (CPU, esp. compression and/or encryption) – Write to storage media (memory, disk/tape I/O, media management/HW configuration) • Get knowledgeable with media management and tape configuration – A smarter DBA = smarter case to make with the SA!

- 61. 61 RMAN Trivia Time.. 1. In which Oracle release did RMAN first appear? 2. In which Oracle release did the multi-section backup feature first appear? 3. What is the negative effect of RMAN + tape-side multiplexing? 4. Which view reports throughput and memory buffer usage during backup? 5. How does Oracle Database 11g Release 2 RMAN with ASM behave differently in memory buffer allocation versus older releases?

- 62. 62 Key HA Sessions, Labs, & Demos by Oracle Development Monday, 20 Sep – Moscone South * 3:30p Extreme Consolidation with RAC One Node, Rm 308 4:00p Edition-Based Redefinition, Hotel Nikko, Monterey I / II 5:00p Five Key HA Innovations, Rm 103 5:00p GoldenGate Strategy & Roadmap, Moscone West, Rm 3020 Tuesday, 21 Sep – Moscone South * 11:00a App Failover with Data Guard, Rm 300 12:30p Oracle Data Centers & Oracle Secure Backup, Rm 300 2:00p ASM Cluster File System, Rm 308 2:00p Exadata: OLTP, Warehousing, Consolidation, Rm 103 3:30p Deep Dive into OLTP Table Compression, Rm 104 3:30p MAA for E-Business Suite R12.1, Moscone West, Rm 2020 5:00p Instant DR by Deploying on Amazon Cloud, Rm 300 Wednesday, 22 Sep – Moscone South * 11:30a RMAN Best Practices, Rm 103 11:30a Database & Exadata Smart Flash Cache, Rm 307 11:30a Configure Oracle Grid Infrastructure, Rm 308 1:00p Top HA Best Practices, Rm 103 1:00p Exadata Backup/Recovery Best Practices, Rm 307 4:45p GoldenGate Architecture, Hotel Nikko, Peninsula Thursday, 23 Sep – Moscone South * 10:30a Active Data Guard Under the Hood, Rm 103 1:30p Minimal Downtime Upgrades, Rm 306 3:00p DR for Database Machine, Rm 103 Hands-on Labs Marriott Marquis, Salon 10 / 11 Monday, Sep 20, 12:30 pm - 1:30 pm Oracle Active Data Guard Tuesday, Sep 21, 5:00 pm - 6:00 pm Oracle Active Data Guard Demos Moscone West DEMOGrounds Mon & Tue 9:45a - 5:30p; Wed 9:00a - 4:00p Maximum Availability Architecture (MAA) Oracle Active Data Guard Oracle Secure Backup Oracle Recovery Manager & Flashback Oracle GoldenGate Oracle Real Application Clusters Oracle Automatic Storage Management * All session rooms are at Moscone South unless otherwise noted * After Oracle OpenWorld, visit http://www.oracle.com/goto/availability

- 63. 63

![11

Script Example

• Configure SBT channels, if needed:

– [CONFIGURE CHANNEL DEVICE TYPE SBT PARMS

'<channel parameters>';]

• Daily roll forward copy and incremental backup:

– RECOVER COPY OF DATABASE WITH TAG 'OSS';

– BACKUP DEVICE TYPE DISK INCREMENTAL LEVEL 1

FOR RECOVER OF COPY WITH TAG 'OSS' DATABASE;

– [BACKUP DEVICE TYPE SBT ARCHIVELOG ALL;]

• What happens?

– First run: Image copy

– Second run: Incremental backup

– Third run+: Roll forward copy & create new incremental backup

• Backup FRA to tape, if needed:

– [BACKUP RECOVERY AREA;]](https://image.slidesharecdn.com/presentation-recoverymanagerrmanconfigurationandperformancetuningbestpractices-150728024731-lva1-app6892/85/Presentation-recovery-manager-rman-configuration-and-performance-tuning-best-practices-11-320.jpg)

![16

FRA File Retention and Deletion

• When FRA space needs exceed quota, automatic file deletion occurs

in the following order:

1. Flashback logs

• Oldest Flashback time can be affected (with exception of guaranteed restore

points)

2. RMAN backup pieces/copies and archived redo logs that are:

• Not needed to maintain RMAN retention policy, or

• Have been backed up to tape (via DEVICE TYPE SBT) or secondary disk location

(via BACKUP RECOVERY AREA TO DESTINATION ‘..’)

• If archived log deletion policy is configured as:

– APPLIED ON [ALL] STANDBY

• Archived log must have been applied to mandatory or all standby databases

– SHIPPED TO [ALL] STANDBY

• Archived log must have been transferred to mandatory or all standby databases

– BACKED UP <N> TIMES TO DEVICE TYPE [DISK | SBT]

• Archived log must have been backed up at least <N> times

– If [APPLIED or SHIPPED] and BACKED UP policies are configured, both conditions

must be satisfied for an archived log to be considered for deletion.](https://image.slidesharecdn.com/presentation-recoverymanagerrmanconfigurationandperformancetuningbestpractices-150728024731-lva1-app6892/85/Presentation-recovery-manager-rman-configuration-and-performance-tuning-best-practices-16-320.jpg)